https://ift.tt/tOyCZ0s Text data is everywhere: blogs, reviews, chat messages, emails, support tickets, meeting transcripts, and social med...

Text data is everywhere: blogs, reviews, chat messages, emails, support tickets, meeting transcripts, and social media posts, just to name a few. But making sense of it at scale is tricky. Unlike the tidy spreadsheets and databases we often work with in data science, text data is messy, unstructured, and packed with human complexity that computers struggle to understand.

In this tutorial, we'll walk through a hands-on NLP task in PyTorch that is able to make sense of text data: classifying tweets as real or fake disaster reports. We'll learn how to tokenize text, load pretrained models, fine-tune them, and evaluate predictions without any abstractions or advanced math.

By the end, you'll have built a complete NLP pipeline in PyTorch that can determine whether a tweet is describing a real disaster or not.

Why NLP Matters to Data Scientists

Natural Language Processing powers many common data science tasks:

- Sentiment analysis — Understanding customer opinions from reviews

- Content categorization — Automatically sorting support tickets or news articles

- Chatbots and virtual assistants — Powering conversational interfaces

- Information extraction — Pulling structured data from text documents

NLP is unique because text has a sequential structure and context; words build meaning based on their position and relationship to other words. Unlike tabular data, language must be interpreted in sequence, which requires specialized techniques.

Our Disaster Tweets Dataset

We'll be working with a real-world dataset: classifying whether a tweet describes an actual disaster event or not. This dataset works well for learning NLP because:

- The texts are short and manageable

- The problem is binary classification (real disaster or not)

- The business relevance is clear (identifying actual emergency situations)

- The results are easy to interpret

For example, consider these tweets from the dataset:

- "Forest fire near La Ronge Sask. Canada" (Real disaster)

- "The sun is shining and I'm heading to the beach #disaster #notreally" (Not a real disaster)

Key NLP Concepts in PyTorch

Before we get into the code, let's quickly go over some key components of modern NLP systems.

Tokenization

Text needs to be converted into numbers before neural networks can process it. Tokenization breaks text into pieces (tokens) and assigns each piece a unique numeric ID. If you've experimented with large language models (LLMs) like ChatGPT, you've already encountered tokenization—these models use tokens internally to understand and generate text.

Modern tokenizers don't just split on spaces; they use subword tokenization methods that can handle:

- Rare words by breaking them into smaller fragments

- Misspellings by leveraging known subword pieces

- New or unknown words by combining familiar subwords

For example, the word "preprocessing" might be broken into tokens such as "pre", "process", and "ing", each receiving its own numeric token ID. Large language models like GPT-4 use similar techniques, breaking down input text into tokens to help the model efficiently handle vast vocabularies.

Embeddings and Vectorization

Once we have token IDs, we need a way to represent them that captures meaning. Embeddings are dense vector representations of tokens that place similar words close together in a high-dimensional space.

To understand embeddings, imagine a multi-dimensional space where each word has its own unique position. In this embedding space:

- Words with similar meanings appear close together

- Words with opposite meanings are far apart

- Relationships between words are preserved as directions

For example, in a well-trained embedding space:

- "King" - "Man" + "Queen" ≈ "Woman" (capturing gender relationships)

- "Paris" - "France" + "Rome" ≈ "Italy" (capturing capital-country relationships)

A word like "disaster" might be represented as a vector of 768 floating-point numbers, with similar concepts like "catastrophe" having a vector that's nearby in this embedding space. Words like "tornado", "earthquake", and "flood" would cluster in a nearby region, while unrelated words like "sunshine" or "birthday" would be further apart.

These rich numerical representations allow models to understand semantic relationships far better than simple one-hot encodings where every word is equally different from every other word.

Transformers in NLP

Once tokens have been converted into meaningful embeddings, the next step is to use an architecture that can effectively leverage these embeddings. Transformers are the dominant architecture powering modern NLP and large language models (LLMs), including GPT models. Unlike older architectures such as recurrent neural networks, transformers process text using a mechanism called self-attention (the "T" in GPT stands for "Transformer"), enabling them to:

- Analyze all words simultaneously, dramatically speeding up training and inference

- Capture complex relationships between words regardless of their distance in a sentence

- Understand subtle context and nuance more effectively

In this tutorial, we'll use a transformer model called DistilBERT, a compact, efficient version of the widely-used BERT model that retains excellent performance with fewer parameters.

Transfer Learning in NLP

A significant advantage of transformer-based models is their compatibility with transfer learning, a technique central to modern NLP. Transfer learning involves taking a model already pre-trained on massive text datasets (the "P" in GPT stands for "Pre-trained") and fine-tuning it on specific NLP tasks like sentiment analysis, question answering, or disaster tweet classification.

This approach is powerful because it:

- Reduces the need for large task-specific datasets

- Dramatically shortens training times

- Leads to improved performance, especially with smaller datasets

For example, ChatGPT—a fine-tuned version of GPT-3.5 or GPT-4—leverages transfer learning to adapt a general-purpose language model to specific conversational tasks. Similarly, we'll leverage transfer learning with DistilBERT in this tutorial, fine-tuning its pretrained weights to classify tweets as disaster-related or not, dramatically simplifying the training process.

Pretrained Models and the Hugging Face Hub

Hugging Face has become the central hub for NLP models, providing a unified API for thousands of pretrained models. Their transformers library makes it easy to load, fine-tune, and deploy state-of-the-art models with just a few lines of code.

The model we'll be using comes with:

- A pretrained DistilBERT base that understands language patterns

- A classification head added on top, which we'll train (fine-tune) for our specific task

Okay, with that all out of the way, let's start building our disaster tweet classifier!

Preparing the Dataset

Let's begin by loading the necessary libraries and datasets. If you're using Google Colab to code along, the required libraries are already installed. If you're running this locally, you might need to install them first:

# Uncomment and run if needed

# !pip install pandas numpy matplotlib seaborn torch scikit-learnNow, let's import the necessary libraries and load our train and test datasets.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import re

import random

import torch

from torch.utils.data import DataLoader, TensorDataset

import torch.nn.functional as F

from sklearn.model_selection import train_test_split

from sklearn.metrics import classification_report, f1_score

# For reproducibility across both CPU and GPU

SEED = 42

torch.manual_seed(SEED)

np.random.seed(SEED)

random.seed(SEED)

# Additional seeds for CUDA operations

if torch.cuda.is_available():

torch.cuda.manual_seed(SEED)

torch.cuda.manual_seed_all(SEED) # for multi-GPU setups

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = False

# Set Pandas display options

pd.set_option('display.max_colwidth', 100)

# Load the datasets

train_df = pd.read_csv('train.csv')

test_df = pd.read_csv('test.csv')

# Take a first look at the data

print(f"Training set shape: {train_df.shape}")

print(f"Test set shape: {test_df.shape}")

train_df.head()Expected output:

Training set shape: (7613, 5)

Test set shape: (3263, 4)

id keyword location text target

0 1 NaN NaN Our Deeds are the Reason of this #earthquake M... 1

1 4 NaN NaN Forest fire near La Ronge Sask. Canada 1

2 5 NaN NaN All residents asked to 'shelter in place' are ... 1

3 6 NaN NaN 13,000 people receive #wildfires evacuation or... 1

4 7 NaN NaN Just got sent this photo from Ruby #Alaska as ... 1Let's see what columns we have and what they contain:

# Check for missing values

print("Missing values per column:")

print(train_df.isnull().sum())

# Target distribution

print("\nTarget distribution:")

print(train_df['target'].value_counts())Expected output:

Missing values per column:

id 0

keyword 61

location 2533

text 0

target 0

dtype: int64

Target distribution:

0 4342

1 3271

Name: target, dtype: int64We can see that:

- Our dataset has 7,613 tweets with labeled targets

- The target is binary (0 = not a disaster, 1 = real disaster)

- We have more non-disaster tweets (4,342) than disaster tweets (3,271)

- Many tweets are missing

locationand some are missingkeywordinformation- Since we’re only interested in

textandtarget, we don’t need to worry about handling missing data

- Since we’re only interested in

Exploring the Data with Word Clouds

Let's visualize our text data using word clouds to better understand the content of disaster and non-disaster tweets. If you're running this code locally, you'll likely need to install these required libraries before continuing:

# Uncomment and run if needed

# !pip install nltk wordcloudNow, let's create word clouds for each class:

import nltk

from nltk.corpus import stopwords

from wordcloud import WordCloud

# Download stopwords if needed

nltk.download('stopwords')

stop_words = set(stopwords.words('english'))

def create_wordcloud(text_series, title):

# Combine all text

text = ' '.join(text_series)

# Create and generate a word cloud image

wordcloud = WordCloud(width=800, height=400,

background_color='white',

stopwords=stop_words,

max_words=150,

collocations=False).generate(text)

# Display the word cloud

plt.figure(figsize=(10, 5))

plt.imshow(wordcloud, interpolation='bilinear')

plt.axis("off")

plt.title(title)

plt.show()

# Create word clouds for each class

create_wordcloud(train_df[train_df['target'] == 1]['text'],

'Words in Disaster Tweets')

print()

create_wordcloud(train_df[train_df['target'] == 0]['text'],

'Words in Non-Disaster Tweets')

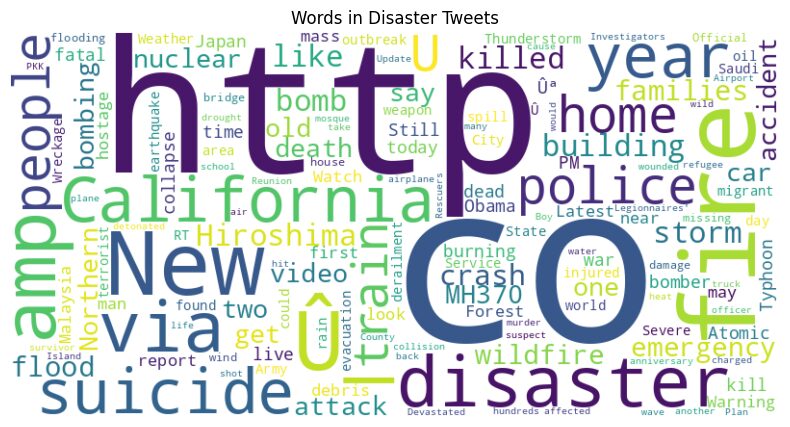

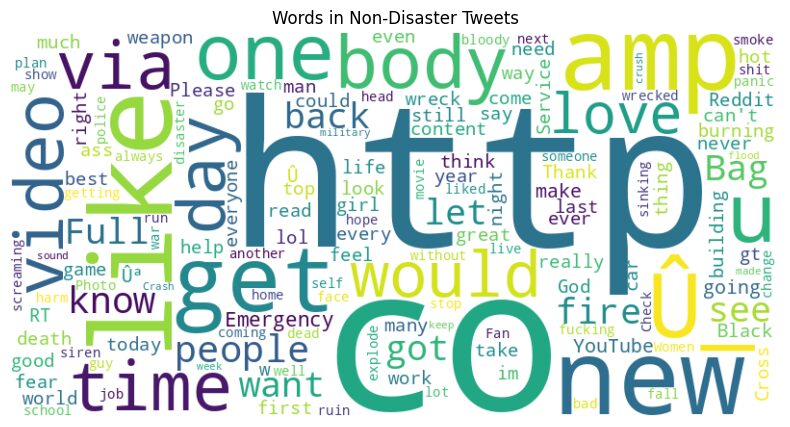

Looking at these word clouds, we can notice several interesting patterns:

- Disaster tweets (top image) prominently feature words like "disaster", "fire", "police", "killed", "flood", "emergency", and "bomb" — terms clearly associated with catastrophic events.

- Non-disaster tweets (bottom image) contain more everyday terms like "like", "get", "new", "day", "time", "go", and "people".

- Encoding issues: Both word clouds show "Û" characters, which are encoding errors that happen when special characters aren't properly decoded. This suggests we need to handle character encoding in our preprocessing.

- URLs and Twitter-specific content: Both clouds show "http" and "co" (from "http://t.co/..." links), suggesting we need to remove URLs during preprocessing.

- RT and amp: We see Twitter-specific terms like "RT" (retweet) and "amp" (from HTML encoded "&" symbols) that don't add value for classification.

These insights will inform our text preprocessing strategy.

Basic Text Preprocessing

Based on our word cloud analysis, we need to clean our text data before feeding it to the model. Even though modern transformers are quite robust, we should address several issues:

def clean_text(text):

"""Basic text cleaning function"""

# Convert to lowercase

text = text.lower()

# Remove URLs

text = re.sub(r'https?://\S+|www\.\S+', '', text)

# Remove Twitter-specific content

text = re.sub(r'@\w+', '', text) # Remove mentions

text = re.sub(r'#', '', text) # Remove hashtag symbols but keep the words

text = re.sub(r'rt\s+', '', text) # Remove RT (retweet) indicators

# Remove HTML entities (like &)

text = re.sub(r'&\w+;', '', text)

# Remove HTML tags

text = re.sub(r'<.*?>', '', text)

# Handle encoding errors like 'Û'

text = re.sub(r'Û', '', text)

# Remove special characters and digits

text = re.sub(r'[^\w\s]', '', text)

text = re.sub(r'\d+', '', text)

# Remove extra whitespace

text = re.sub(r'\s+', ' ', text).strip()

return text

# Apply cleaning to the text column

train_df['cleaned_text'] = train_df['text'].apply(clean_text)

test_df['cleaned_text'] = test_df['text'].apply(clean_text)

# Display a few examples of cleaned text

print("Original vs Cleaned:")

for i in range(3):

print(f"Original: {train_df['text'].iloc[i]}")

print(f"Cleaned: {train_df['cleaned_text'].iloc[i]}")

print()Expected output:

Original vs Cleaned:

Original: Our Deeds are the Reason of this #earthquake May ALLAH Forgive us all

Cleaned: our deeds are the reason of this earthquake may allah forgive us all

Original: Forest fire near La Ronge Sask. Canada

Cleaned: forest fire near la ronge sask canada

Original: All residents asked to 'shelter in place' are being notified by officers. No other evacuation or shelter in place orders are expected

Cleaned: all residents asked to shelter in place are being notified by officers no other evacuation or shelter in place orders are expectedThis preprocessing addresses the issues we identified in the word clouds:

- Removes URLs (including "http" and "t.co" links)

- Eliminates Twitter-specific content like mentions and RT indicators

- Handles encoding issues such as "Û" characters

- Removes special characters, numbers, and extra whitespace

- Converts all text to lowercase for consistency

Turning Text into Tensors

Now we'll convert our text data into a format that PyTorch can process. To do this, we'll use the Hugging Face transformers library, which has become the standard toolkit for working with transformer models in NLP.

If you're running this code locally, you'll need to install the transformers library:

# Uncomment and run if needed

# !pip install transformersNow let's set up our tokenizer:

from transformers import DistilBertTokenizer

# Load pretrained tokenizer

tokenizer = DistilBertTokenizer.from_pretrained('distilbert-base-uncased')

# Example of tokenization

example_text = "PyTorch is great for NLP"

tokens = tokenizer.tokenize(example_text)

token_ids = tokenizer.encode(example_text)

print(f"Original text: {example_text}")

print(f"Tokens: {tokens}")

print(f"Token IDs: {token_ids}")Expected output:

Original text: PyTorch is great for NLP

Tokens: ['p', '##yt', '##or', '##ch', 'is', 'great', 'for', 'nl', '##p']

Token IDs: [101, 1052, 22123, 2953, 2818, 2003, 2307, 2005, 17953, 2361, 102]Let's create a function to tokenize our text data using the DistilBertTokenizer from above:

def tokenize_text(texts, tokenizer, max_length=128):

"""

Tokenize a list of texts using the provided tokenizer

Returns input IDs and attention masks

"""

# Tokenize all texts at once

encodings = tokenizer(

list(texts),

max_length=max_length,

padding='max_length',

truncation=True,

return_tensors='pt'

)

return encodings['input_ids'], encodings['attention_mask']Understanding Attention Masks

When we tokenize our texts using the function above, we get two important outputs:

- Input IDs: These are the numerical representations of our tokens. Each word or subword is converted to a unique number according to the tokenizer's vocabulary.

- Attention Masks: These are binary tensors (containing only 0s and 1s) that tell the model which tokens to "pay attention to" and which to ignore.

Why do we need attention masks? Because we process tweets in batches, and tweets have different lengths. To make a batch where all sequences have the same length, we add padding tokens to shorter sequences. The attention mask has 1’s for real tokens and 0’s for padding tokens, telling the model: "Focus on the real content, ignore the padding."

For example, if we have a tweet that's 10 tokens long but we're padding to 128 tokens, the attention mask would look like:

[1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, ..., 0]This mechanism is crucial for transformer models as it ensures they don't try to extract meaning from the artificial padding tokens.

Creating Datasets and DataLoaders

Next, we need to split our data into training and validation sets, and prepare them for training. This involves several steps:

- Splitting the data: We separate our dataset into training data (what the model learns from) and validation data (what we use to evaluate the model's performance)

- Tokenizing the text: We convert all text to token IDs and attention masks

- Creating DataLoaders: We set up efficient pipelines to feed data to our model during training

This process deserves our attention because it:

- Helps prevent overfitting by providing separate evaluation data

- Enables batch processing for faster training

- Standardizes input shapes for the model

Let's implement these steps:

# Split data into train and validation sets

train_texts, val_texts, train_targets, val_targets = train_test_split(

train_df['cleaned_text'].values,

train_df['target'].values,

test_size=0.1,

random_state=42,

stratify=train_df['target'] # Maintain class distribution

)

print(f"Training texts: {len(train_texts)}")

print(f"Validation texts: {len(val_texts)}")

# Set the batch size for effecient training

batch_size = 16

# Process training data

train_input_ids, train_attention_masks = tokenize_text(train_texts, tokenizer)

train_targets = torch.tensor(train_targets, dtype=torch.long)

# Process validation data

val_input_ids, val_attention_masks = tokenize_text(val_texts, tokenizer)

val_targets = torch.tensor(val_targets, dtype=torch.long)

# Create tensor datasets

train_dataset = TensorDataset(

train_input_ids,

train_attention_masks,

train_targets

)

val_dataset = TensorDataset(

val_input_ids,

val_attention_masks,

val_targets

)

# Create dataloaders

train_loader = DataLoader(

train_dataset,

batch_size=batch_size,

shuffle=True

)

val_loader = DataLoader(

val_dataset,

batch_size=batch_size

)

# Look at a single batch

batch = next(iter(train_loader))

input_ids, attention_mask, targets = batch

print(f"Input IDs shape: {input_ids.shape}")

print(f"Attention mask shape: {attention_mask.shape}")

print(f"Targets shape: {targets.shape}")Expected output:

Training texts: 6851

Validation texts: 762

Input IDs shape: torch.Size([16, 128])

Attention mask shape: torch.Size([16, 128])

Targets shape: torch.Size([16])This output tells us:

- We have 6,851 tweets for training and 762 for validation

- Each batch contains 16 tweets (our chosen batch size)

- Each tweet is represented by 128 tokens (including padding)

- The attention masks match the input shape, with 1’s for real tokens and 0’s for padding

- Our targets are simply 0 or 1 for each tweet (non-disaster or disaster)

Fine-Tuning a Pretrained Transformer

Now we're ready to load a pretrained transformer model and fine-tune it for our disaster tweet classification task. When choosing a transformer model, you should consider factors like model size, speed of inference, task complexity, and the computational resources available. For classifying tweets—a relatively straightforward, short-text task—a smaller, efficient transformer like DistilBERT is ideal because it balances speed and accuracy without requiring extensive resources.

Let's start by setting up our model:

from transformers import DistilBertForSequenceClassification

# Set device

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"Using device: {device}")

# Load pretrained model

model = DistilBertForSequenceClassification.from_pretrained(

'distilbert-base-uncased',

num_labels=2 # Binary classification

)

# Move model to device

model = model.to(device)

# Set up optimizer

optimizer = torch.optim.AdamW(model.parameters(), lr=2e-5)Expected output:

Using device: cuda

model.safetensors: 100%

268M/268M [00:01<00:00, 257MB/s]

Some weights of DistilBertForSequenceClassification were not initialized from the model checkpoint at distilbert-base-uncased and are newly initialized: ['classifier.bias', 'classifier.weight', 'pre_classifier.bias', 'pre_classifier.weight']

You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.This output tells us a few important things:

- We're using a GPU for training (Thanks, Google Colab!)

- The model loaded successfully (268MB of parameters)

- Some model weights are newly initialized - specifically the classification layers we added on top of the base model

- The model needs training before we can use it for predictions (which is exactly what we're about to do!)

Understanding F1 Score

Before we begin training, let's understand how we'll evaluate our model. We'll use the F1 score, which is a popular metric for classification tasks, especially with imbalanced classes.

The F1 score is the harmonic mean of precision and recall:

- Precision: Of all the tweets we predicted as disasters, how many were actually disasters?

- Recall: Of all actual disaster tweets, how many did we correctly identify?

F1 = 2 (Precision Recall) / (Precision + Recall)

We use F1 instead of just accuracy because:

- It balances precision and recall, which is important for disaster detection

- It works well with imbalanced datasets (where one class appears more than the other)

- It penalizes both false positives and false negatives

Training the Model

Now, let's implement our training loop. The training process typically takes about 3-5 minutes on a GPU or a couple of hours on a CPU:

# Training function

def train_epoch(model, data_loader, optimizer, device):

model.train()

total_loss = 0

correct_predictions = 0

total_predictions = 0

for batch in data_loader:

# Unpack and move batch to device

input_ids, attention_mask, targets = batch

input_ids = input_ids.to(device)

attention_mask = attention_mask.to(device)

targets = targets.to(device)

# Forward pass

optimizer.zero_grad()

outputs = model(

input_ids=input_ids,

attention_mask=attention_mask,

labels=targets

)

loss = outputs.loss

logits = outputs.logits

# Backward pass

loss.backward()

optimizer.step()

total_loss += loss.item()

# Calculate accuracy

_, preds = torch.max(logits, dim=1)

correct_predictions += torch.sum(preds == targets)

total_predictions += len(targets)

# Calculate average loss and accuracy

avg_loss = total_loss / len(data_loader)

accuracy = correct_predictions.double() / total_predictions

return avg_loss, accuracy

# Evaluation function

def evaluate(model, data_loader, device):

model.eval()

total_loss = 0

correct_predictions = 0

total_predictions = 0

all_targets = []

all_preds = []

with torch.no_grad():

for batch in data_loader:

# Unpack and move batch to device

input_ids, attention_mask, targets = batch

input_ids = input_ids.to(device)

attention_mask = attention_mask.to(device)

targets = targets.to(device)

# Forward pass

outputs = model(

input_ids=input_ids,

attention_mask=attention_mask,

labels=targets

)

loss = outputs.loss

logits = outputs.logits

total_loss += loss.item()

# Calculate accuracy

_, preds = torch.max(logits, dim=1)

correct_predictions += torch.sum(preds == targets)

total_predictions += len(targets)

# Store targets and predictions for F1 score

all_targets.extend(targets.cpu().numpy())

all_preds.extend(preds.cpu().numpy())

# Calculate average loss and accuracy

avg_loss = total_loss / len(data_loader)

accuracy = correct_predictions.double() / total_predictions

f1 = f1_score(all_targets, all_preds)

return avg_loss, accuracy, f1

# Training loop

epochs = 3

best_f1 = 0

for epoch in range(epochs):

print(f"Epoch {epoch + 1}/{epochs}")

# Train

train_loss, train_acc = train_epoch(model, train_loader, optimizer, device)

print(f"Train Loss: {train_loss:.4f}, Train Accuracy: {train_acc:.4f}")

# Evaluate

val_loss, val_acc, val_f1 = evaluate(model, val_loader, device)

print(f"Val Loss: {val_loss:.4f}, Val Accuracy: {val_acc:.4f}, Val F1: {val_f1:.4f}")

if val_f1 > best_f1:

best_f1 = val_f1

# In a real scenario, we'd save the model here

# torch.save(model.state_dict(), "best_model.pt")

print()Expected output:

Epoch 1/3

Train Loss: 0.4351, Train Accuracy: 0.8097

Val Loss: 0.3737, Val Accuracy: 0.8491, Val F1: 0.8130

Epoch 2/3

Train Loss: 0.3300, Train Accuracy: 0.8678

Val Loss: 0.3899, Val Accuracy: 0.8399, Val F1: 0.8045

Epoch 3/3

Train Loss: 0.2410, Train Accuracy: 0.9085

Val Loss: 0.4127, Val Accuracy: 0.8451, Val F1: 0.8072Looking at these results, we can see:

- Training Loss and Accuracy: As expected, the training metrics steadily improve as the model learns. By epoch 3, the model achieves close to 91% accuracy on the training data.

- Validation Performance: The validation metrics tell a more complex story. While accuracy stays relatively stable (around 84-85%), we see some fluctuation in the F1 score, with the best value achieved after the first epoch.

- Potential Overfitting: The increasing gap between training and validation metrics (especially loss) suggests the model is starting to overfit to the training data. The fact that validation F1 slightly decreases after epoch 1 indicates that we may want to look into applying some regularization in a production scenario.

Evaluating the Results

Now that we've trained our model, let's evaluate it more thoroughly on the validation set to understand its strengths and limitations.

# Detailed evaluation

model.eval()

all_targets = []

all_preds = []

all_probs = [] # For prediction probabilities

with torch.no_grad():

for batch in val_loader:

# Unpack and move batch to device

input_ids, attention_mask, targets = batch

input_ids = input_ids.to(device)

attention_mask = attention_mask.to(device)

targets = targets.to(device)

# Forward pass

outputs = model(

input_ids=input_ids,

attention_mask=attention_mask

)

logits = outputs.logits

probs = F.softmax(logits, dim=1)

# Get predictions

_, preds = torch.max(logits, dim=1)

# Store targets, predictions, and probabilities

all_targets.extend(targets.cpu().numpy())

all_preds.extend(preds.cpu().numpy())

all_probs.extend(probs[:, 1].cpu().numpy()) # For positive class

# Classification report

print(classification_report(all_targets, all_preds, target_names=['Not Disaster', 'Disaster']))

# Confusion matrix

cm = pd.crosstab(

pd.Series(all_targets, name='Actual'),

pd.Series(all_preds, name='Predicted')

)

plt.figure(figsize=(8, 6))

sns.heatmap(cm, annot=True, fmt='d', cmap='Blues')

plt.title('Confusion Matrix')

plt.show()Expected output:

precision recall f1-score support

Not Disaster 0.83 0.91 0.87 435

Disaster 0.87 0.76 0.81 327

accuracy 0.85 762

macro avg 0.85 0.83 0.84 762

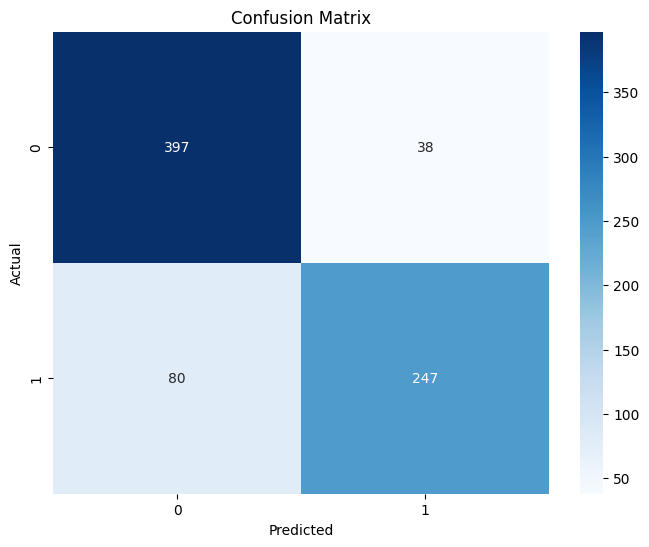

weighted avg 0.85 0.85 0.84 762The classification report and confusion matrix provide valuable insights:

- Overall Accuracy: Our model achieves 85% accuracy on the validation set, which is quite good for a complex NLP task with minimal preprocessing.

- Class Performance: The model performs slightly better on "Not Disaster" tweets (F1 score of 0.87) than on "Disaster" tweets (F1 score of 0.81).

- Confusion Matrix Analysis: Looking at the confusion matrix, we see:

- 397 true negatives (correctly identified non-disaster tweets)

- 247 true positives (correctly identified disaster tweets)

- 38 false positives (non-disaster tweets incorrectly flagged as disasters)

- 80 false negatives (missed disaster tweets)

- Error Type Implications: The model is slightly more likely to miss real disasters (80 false negatives) than to raise false alarms (38 false positives). In a real-world disaster monitoring system, this trade-off might need adjustment based on the relative costs of missing a real emergency versus investigating a false alarm.

Making Predictions on New Data

Finally, let's use our model to make predictions on the test set:

# Process test data

test_input_ids, test_attention_masks = tokenize_text(test_df['cleaned_text'].values, tokenizer)

# Create test dataloader

test_dataset = TensorDataset(test_input_ids, test_attention_masks)

test_loader = DataLoader(test_dataset, batch_size=batch_size)

# Generate predictions

model.eval()

test_preds = []

test_probs = []

with torch.no_grad():

for batch in test_loader:

# Unpack and move batch to device

input_ids, attention_mask = batch

input_ids = input_ids.to(device)

attention_mask = attention_mask.to(device)

# Forward pass

outputs = model(

input_ids=input_ids,

attention_mask=attention_mask

)

logits = outputs.logits

probs = F.softmax(logits, dim=1)

# Get predictions

_, preds = torch.max(logits, dim=1)

# Store predictions and probabilities

test_preds.extend(preds.cpu().numpy())

test_probs.extend(probs[:, 1].cpu().numpy())

# Add predictions to test dataframe

test_df['predicted_target'] = test_preds

test_df['disaster_probability'] = test_probs

# Display a sample of predictions

print("Sample predictions on the test set:")

sample_results = test_df[['text', 'predicted_target', 'disaster_probability']].sample(10)

sample_resultsExpected output:

Sample predictions on the test set:

text predicted_target disaster_probability

149 #MustRead: Vladimir #Putin Issues Major Warning But Is It Too Late To Escape Armageddon? by @PCr... 1 0.820633

2028 tarmineta3: Breaking news! Unconfirmed! I just heard a loud bang nearby. in what appears to be a... 0 0.079320

2559 @MeganRestivo I am literally screaming for you!! Congratulations! 0 0.011177

800 @PahandaBear @Nethaera Yup EU crashed too :P 0 0.039989

1237 Angry Woman Openly Accuses NEMA Of Stealing Relief Materials Meant For IDPs: An angry Internally... 0 0.078757

2448 Photo: theonion: Rescuers Heroically Help Beached Garbage Back Into OceanåÊ http://t.co/YcSmt7ovoc 1 0.739298

1566 ...@jeremycorbyn must be willing to fight and 2 call a spade a spade. Other wise very savvy piec... 0 0.032905

691 Emergency services called to Bacup after 'strong' chemical smells http://t.co/hJJ7EFTJ7O 1 0.934740

3103 T Shirts $10 male or female get wit me. 2 days until its game changin time. War Zone single will... 0 0.015829

2325 #Np love police @PhilCollinsFeed On #LateNiteMix Uganda Broadcasting Corporation. UBC 98FM #Radi... 0 0.340198

Results

Looking at the test predictions above, we can see that the model assigns high disaster probabilities to tweets with clear emergency-related language, such as those involving chemical smells or urgent warnings. Conversely, it assigns very low probabilities to clearly non-disaster tweets, including personal celebrations, casual conversation, and promotional content.

One interesting case is a satirical tweet from The Onion involving rescuers and garbage. While the model predicts it as a disaster (0.74), this highlights how it's strongly influenced by literal disaster-related vocabulary, even when used in a humorous context. On the other hand, a tweet mentioning the word “police” gets a middling score (~0.34) but is still correctly classified as non-disaster. This suggests the model has learned that certain keywords can appear in both disaster and non-disaster settings.

Overall, the predictions reflect a model that’s learned to balance literal cues with broader context, showing solid performance even without extensive preprocessing or long training.

Next Steps and Resources

Ready to take your NLP skills further? Here are some natural next steps:

Experiment with Other Tasks

- Multiclass classification: Try categorizing news articles by topic

- Sentiment analysis: Classify movie reviews as positive or negative

- Named entity recognition: Extract people, places, and organizations from text

Explore More Advanced Techniques

- Try other transformer models (BERT, RoBERTa, T5)

- Experiment with data augmentation for text

- Implement attention visualization to interpret model decisions

- Add early stopping to prevent overfitting (as we saw in our training results)

- Apply cross-validation for more robust evaluation

- Explore hyperparameter tuning to optimize learning rate, batch size, etc.

Suggested Resources

- Hugging Face Documentation

- PyTorch NLP Tutorials

- Getting Started with PyTorch

- Sequence Models in PyTorch

Text data can seem messy and complex, but modern tools like PyTorch and pretrained transformers make it surprisingly approachable. Starting small and building from there is often the best way to gain confidence and skill in NLP.

Key Terms Recap

- Tokenization: The process of converting text into tokens (words, subwords, or characters)

- Embeddings: Dense vector representations of tokens that capture semantic relationships

- Transfer Learning: Using knowledge from a pretrained model on a new task

- Transformer: A neural network architecture that uses self-attention to process sequences

- Fine-tuning: Adapting a pretrained model to a specific task by updating its parameters

- Attention: A mechanism that allows models to focus on relevant parts of the input

- Attention Masks: Binary tensors that tell the model which tokens are real content and which are padding

- F1 Score: A metric that balances precision and recall, useful for imbalanced datasets

- Hugging Face: An organization that provides tools and pretrained models for NLP

from Dataquest https://ift.tt/njtWyaf

via RiYo Analytics

No comments