https://ift.tt/5rwE3UG How would GPT -3 solve the “Baseball bat and a ball” quiz compared to humans? Image generated by the author with t...

How would GPT -3 solve the “Baseball bat and a ball” quiz compared to humans?

I’ve decided to test how GPT-3 would solve a classic “A bat and a ball” puzzle. It is usually used to showcase the difference between System 1 and System 2 of thinking. Will it make the same mistake as humans do when using System 1, and will it be able to solve it eventually?

TL;DR: yes, and yes.

Two modes of thinking

“System 1 and System 2” is a popular model that describes two modes of human decision-making and reasoning. It was proposed by psychologists Keith Stanovich and Richard West in 2000. Recently it was popularized by Daniel Kahneman in Thinking, Fast and Slow. The framework is new, but the ideas behind it are old. People realized the difference between instinctive thinking and conscious reasoning a long time ago. You can track it in the works of William James, Sigmund Freud, and even in the texts of ancient Greek philosophers.

The main idea of the model is that there are two ways humans think:

System 1 is fast, instinctive, and emotional. It operates automatically and unconsciously, with little or no effort and no sense of voluntary control. System 1 is essentially a powerful, quick, and energy-efficient pattern-matching machine.

System 2 is slower, more deliberative, and more logical. It consumes much more energy. It allocates attention to the effortful mental activities that demand it, including complex computations. Its operations are often associated with the subjective experience of agency, choice, and concentration. And it is expensive.

System 1 is automatic and fast, but can be error-prone. System 2 is more deliberate, conscious, and slow, but is more accurate.

Large Language Models are good approximations of System 1 mode

Large Language models like GPT-3 and its siblings have shown excellent performance on System 1 tasks. For example, they can generate text that is indistinguishable from human-written text, generate natural-looking responses to questions, do simple arithmetic, summarization and rephrasing. In many ways, these models are like very powerful pattern-matching machines. And pattern matching is what System 1 essentially is. Yet, large langue models don’t fare so well on System 2 tasks, such as planning, reasoning, and decision-making.

There are many explanations for that fact.

One possible reason for that comes from their autoregressive operational mode. That is, they predict the next token in a sequence based on the previous tokens in that sequence. When humans engage their System 2 thinking for any problem solving, they essentially perform multiple steps of computations in their working memory or using some external medium. But GPT-3 lacks such working memory. It is constrained only by a single forward pass of a signal through its layers. It is forced by design to provide a quick, single-step answer. There are prompt programming techniques like meta-prompts and chain-of-thought prompting to mitigate this to some extent. They help a language model to “slow down” and start using its outputs as a kind of working memory for multi-step System 2 tasks.

“We need to be patient with GPT-3, and give it time to think. GPT-3 does best when writing its own answers, as this forces it to think out loud: that is, to write out its thoughts in a slow and sequential manner.”© Methods of prompt programming.

But there is a deeper reason also. Large language models and generally all modern (Self-)Supervised Machine Learning models are essentially pattern recognition systems. Yoshua Bengio touched on this topic in his 2019 NeurIPS speech. They might not be suitable at all for System 2 tasks, and we might need another revolution in AI. But that is still a big open question.

Enhancing System 2 capabilities of Large Language Models is an active research area.

A ball and a bat quiz

This quiz was proposed by Daniel Kahneman, a Nobel Prize-winning economist, and psychologist.

The quiz statement is the following:

A bat and ball together cost $1.10. The bat costs one dollar more than the ball. How much does the ball cost?

The immediate answer to it is usually 0.10$. Which is incorrect. When you give a person a bit more time to think through, it’s easy to find the correct answer. Which is 0.5$.

This quiz demonstrates how humans often rely on System 1 rather than their System 2.

I’ve decided to check whether the GPT-3 way of reasoning resembles ours by checking its answers to this quiz.

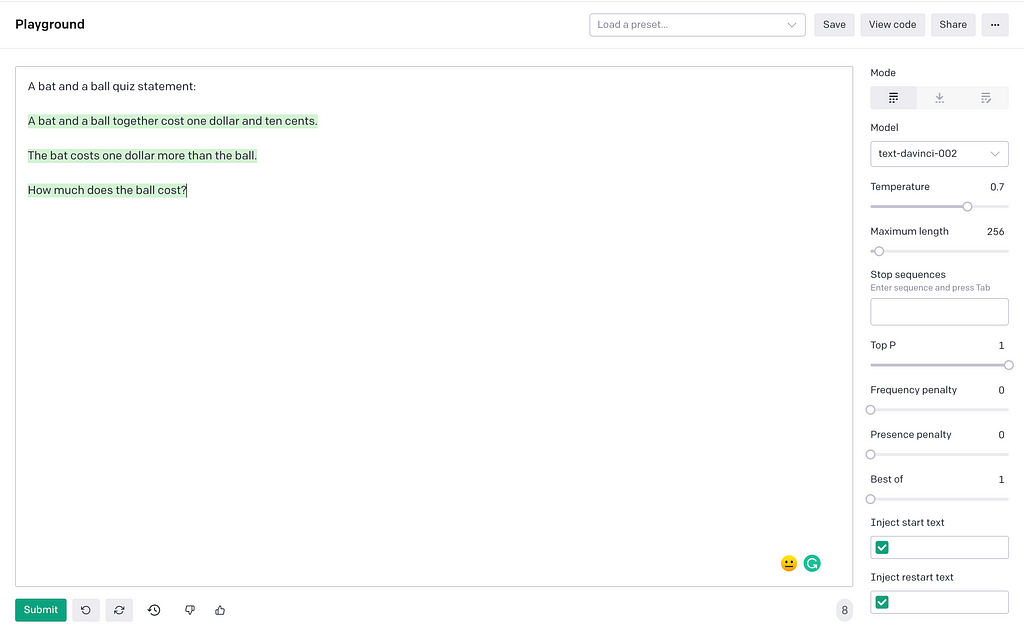

Now, the first problem is that GPT-3 has seen this puzzle in its training set. You can easily check it by letting it predict its statement and different contexts around it:

A bat and a ball quiz statement:

A bat and a ball together cost one dollar and ten cents.

The bat costs one dollar more than the ball.

How much does the ball cost?

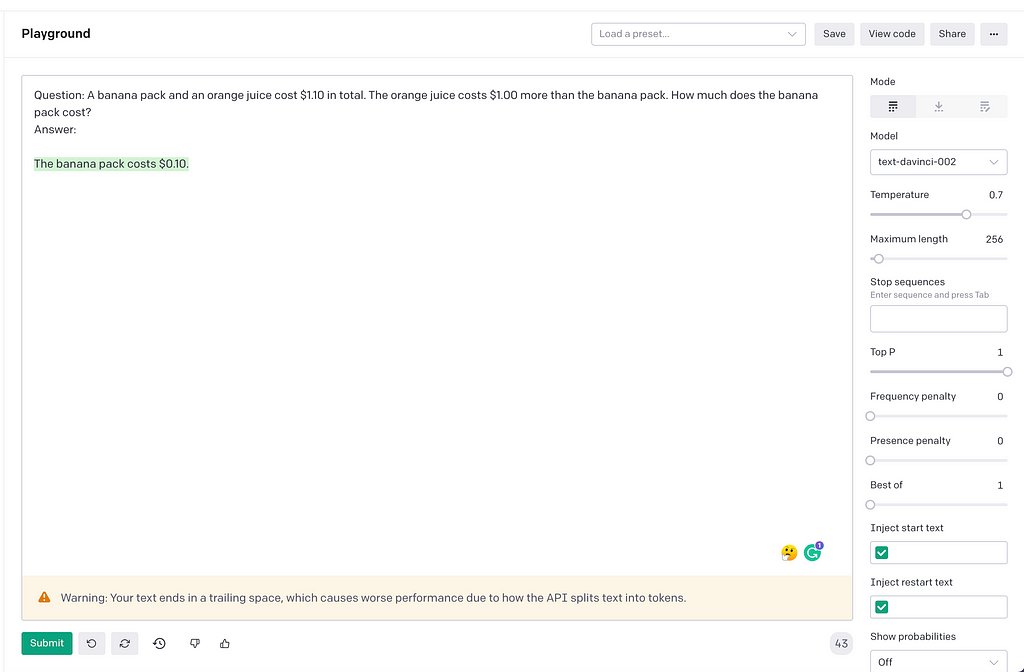

Indeed, it is aware of it. So to make it harder for the model to reproduce what it has simply seen before, let’s change the names of the items. I’ve decided to use a banana pack and orange juice. A healthy choice. Let’s see how it will answer:

Question: A banana pack and an orange juice cost $1.10 in total. The orange juice costs $1.00 more than the banana pack. How much does the banana pack cost?

Answer:

The banana pack costs $0.10.

Great, it provides the same answer. Now let’s make the experiment cleaner and change the numbers as well:

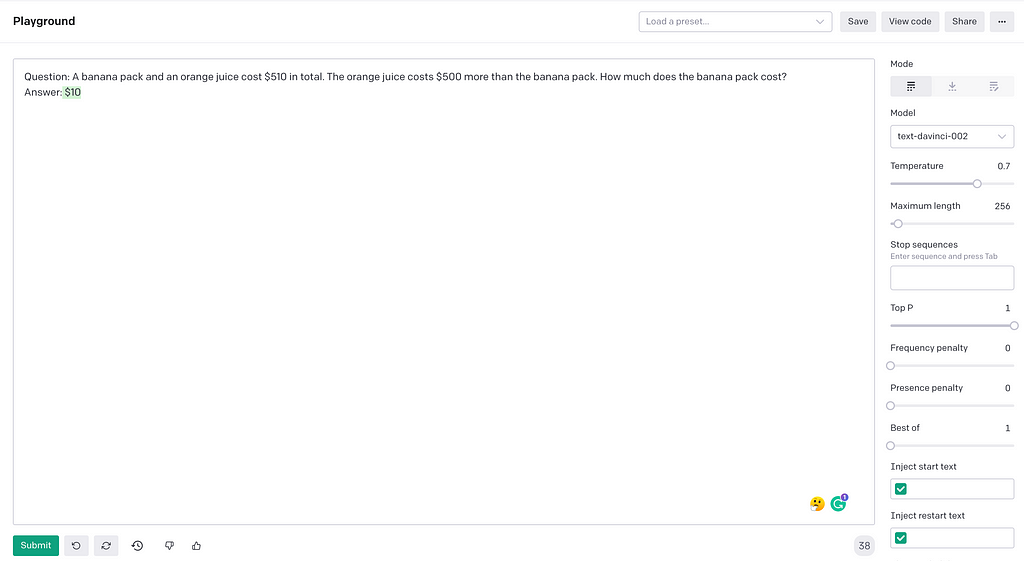

Question: A banana pack and an orange juice cost $510 in total. The orange juice costs $500 more than the banana pack. How much does the banana pack cost?

Answer: $10

So, GPT-3 indeed made the same mistake most people do when using System 1 mode.

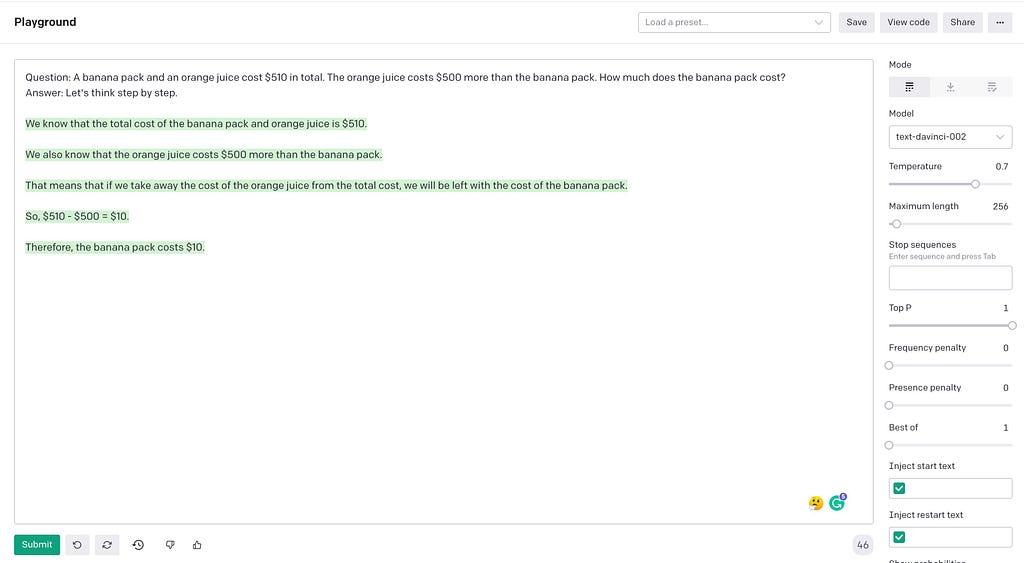

Let’s try to guide it into thinking through the problem slower without giving premature answers. Let’s try a meta-prompt technique proposed in the recent paper from Tokyo University and the Google Brain team. The idea is to “slow down” the model in its conclusions by adding the phrase “Let’s think step by step.” in your prompt:

Question: A banana pack and an orange juice cost $510 in total. The orange juice costs $500 more than the banana pack. How much does the banana pack cost?

Answer: Let’s think step by step.

We know that the total cost of the banana pack and orange juice is $510.

We also know that the orange juice costs $500 more than the banana pack.

That means that if we take away the cost of the orange juice from the total cost, we will be left with the cost of the banana pack.

So, $510 — $500 = $10.

Therefore, the banana pack costs $10.

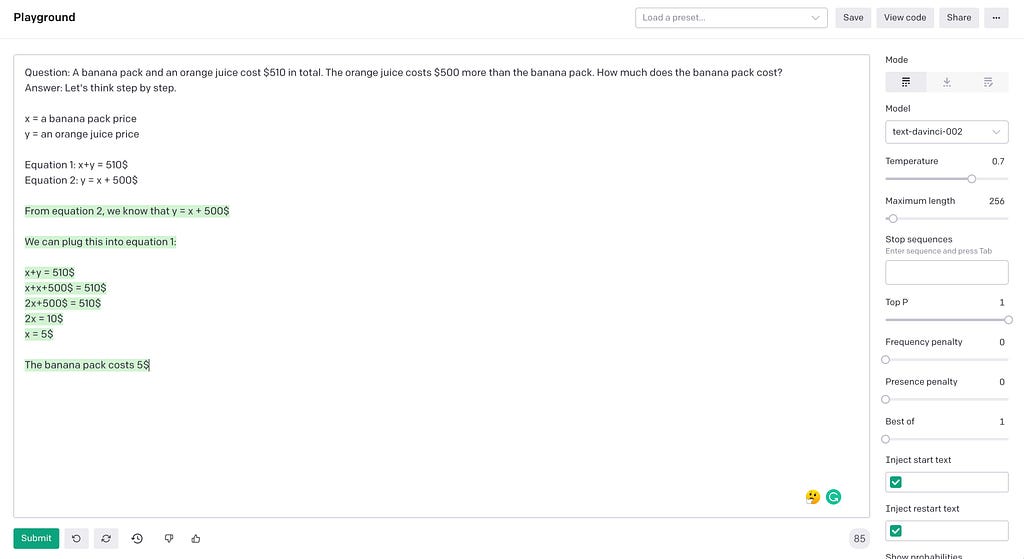

Didn’t help much. The model still fails to produce a correct line of reasoning. Let’s give it some guidance by following the first steps of the solution ourselves for the model:

Question: A banana pack and an orange juice cost $510 in total. The orange juice costs $500 more than the banana pack. How much does the banana pack cost?

Answer: Let’s think step by step.

x = a banana pack price

y = an orange juice price

Equation 1: x+y = 510$

Equation 2: y = x + 500$

From the equation 2, we know that y = x + 500$

We can plug this into equation 1:

x+y =510$

x+x+500$ = 510$

2x+500$ = 510$

2x = 10$

x = 5$

The banana pack costs 5$

It found the correct answer!

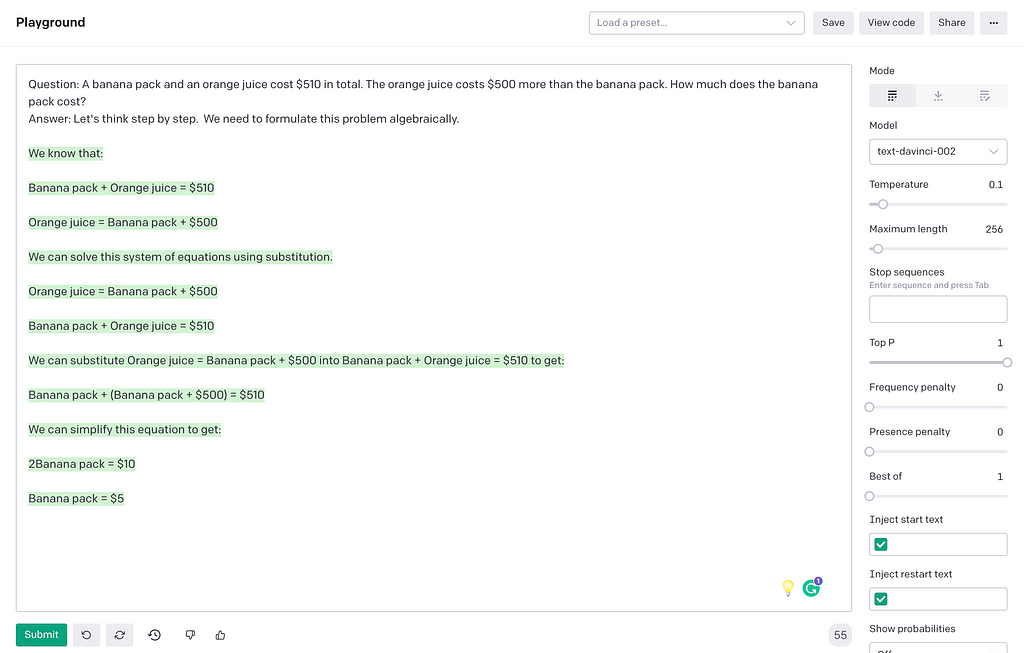

Okay, but maybe it was too much supervision. We can do better. Let’s decrease the model’s temperature to 0.1 to make it less variable but more coherent. And let’s simply give it a hint that it should use algebra to solve this puzzle. I actually used the same hint to guide my human friends in the correct direction for this quiz.

Question: A banana pack and an orange juice cost $510 in total. The orange juice costs $500 more than the banana pack. How much does the banana pack cost?

Answer: Let’s think step by step. We need to formulate this problem algebraically.

We know that:

Banana pack + Orange juice = $510

Orange juice = Banana pack + $500

We can solve this system of equations using substitution.

Orange juice = Banana pack + $500

Banana pack + Orange juice = $510

We can substitute Orange juice = Banana pack + $500 into Banana pack + Orange juice = $510 to get:

Banana pack + (Banana pack + $500) = $510

We can simplify this equation to get:

2Banana pack = $10

Banana pack = $5

Success!

Conclusion

Large Language models surprised us with their level of intelligence. The Scaling Hypothesis clearly works for them so far. The leap from GPT-2 to GPT-3 is not merely a difference in quantity, but it’s an emergence of a new quality. It’s easy to make mistakes on the opposite sides of the spectrum: anthropomorphize them too much as well as underestimate their intelligence capabilities. They have apparent limitations. They are not agents and don’t have any active motives. They know only text and nothing outside of it. Their reasoning is rudimentary and different from ours. But at the same time, it is fascinating how similar, in some cases, it can be.

And GPT-4 is coming soon! Quoting the author of “2 Minute Papers” YouTube channel, “What a time to be alive!”.

References

- GPT-3 playground

- Thinking, Fast and Slow

- Methods of prompt programming

- Yoshua Bengio: From System 1 Deep Learning to System 2 Deep Learning (NeurIPS 2019)

- Kojima et al., Large Language Models are Zero-Shot Reasoners, 2022

- Valmeekam et al., Large Language Models Still can’t plan, 2022

Follow Me

If you find the ideas I share with you interesting, please don’t hesitate to connect here on Medium, Twitter, Instagram, or LinkedIn.

Large Language Models and Two Modes of Human Thinking was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/Ot1aGfn

via RiYo Analytics

No comments