https://ift.tt/aIALxu8 Achieve state of the art results using a LightGBM model with Prophet features Photo by Isaac Smith on Unsplash ...

Achieve state of the art results using a LightGBM model with Prophet features

In my last post, I wrote about time series forecasting with machine learning. I focused on explaining how to use a machine learning based approach for time series forecasting with good results. This post will use more advanced techniques for predicting time series and will try to outperform the model created in my previous article. If you haven’t read my previous post I recommend you to do so because this will be an iteration on my previous model. As always, you will find the code of this new post in this link.

Previous Post

Time Series Forecasting with Supervised Machine Learning

New Approach

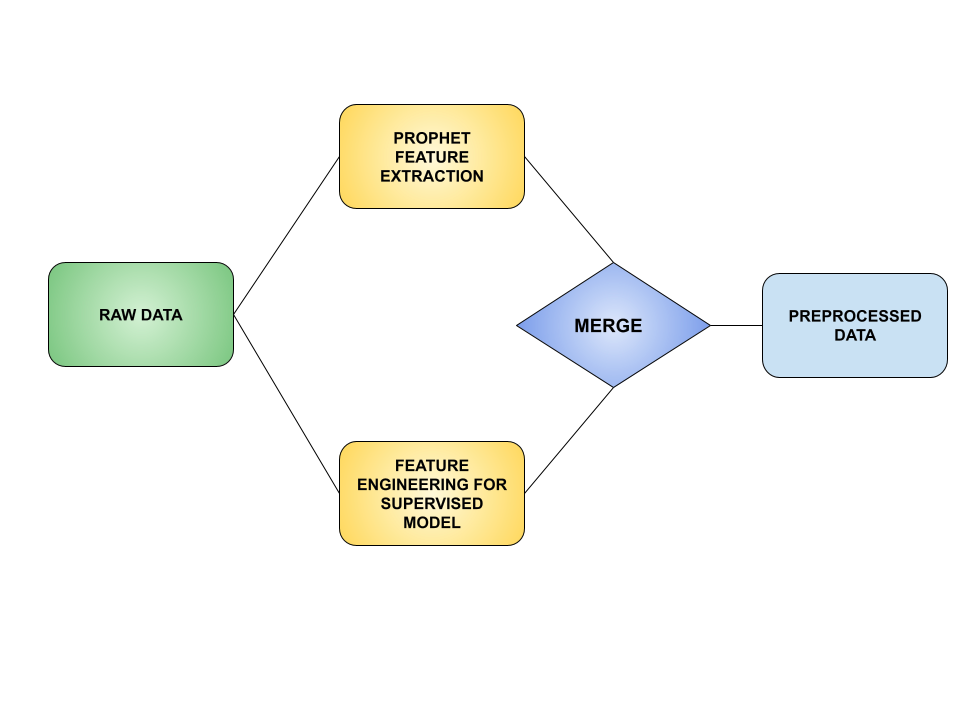

In addition to all the feature engineering in the previous post, we are going to use Prophet to extract new meaningful features such as seasonalities, confidence intervals, trend, etc.

Feature Engineering

Time Series Forecasting

In my previous article, I used a LightGBM model with some lagged features to forecast hourly bike sharing demand for a given city for the next 7 days and it worked pretty well. However, the results can be improved if we use Prophet for extracting new features from our time series. Some of these features are the actual prediction of the Prophet model, the upper and lower bounds of the confidence interval, the daily and weekly seasonalities and the trend. For other kind of problems, Prophet can also help us extracting features that describe the holiday effects.

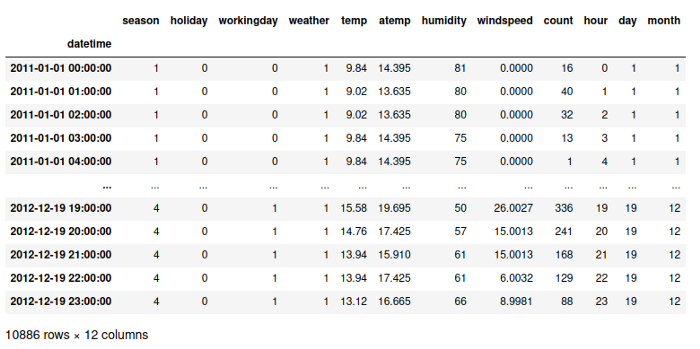

Raw Data

In this post, we will recycle the data used in our previous LightGBM forecasting post. Our data looked like this:

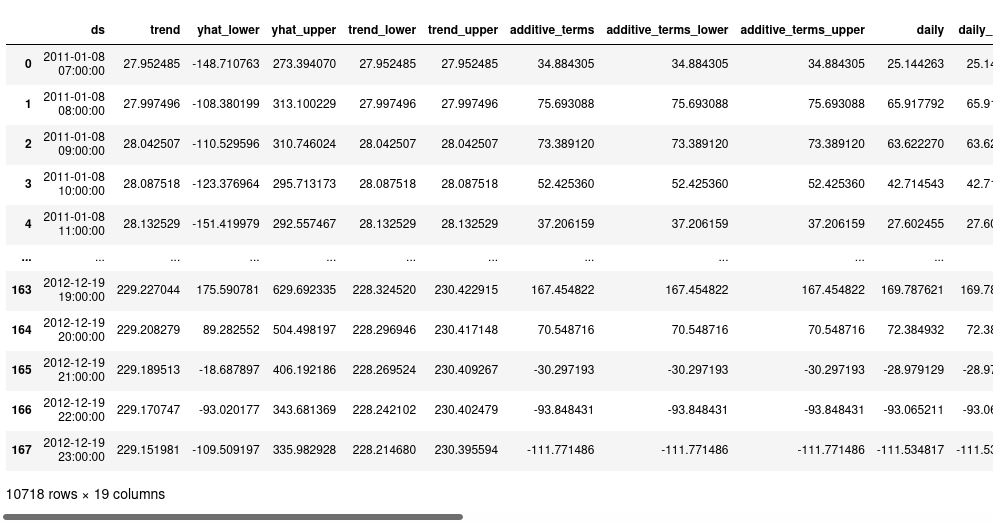

Extracting Features with Prophet

Our first step for our feature engineering is pretty simple. We just have to do predictions using the prophet model like this:

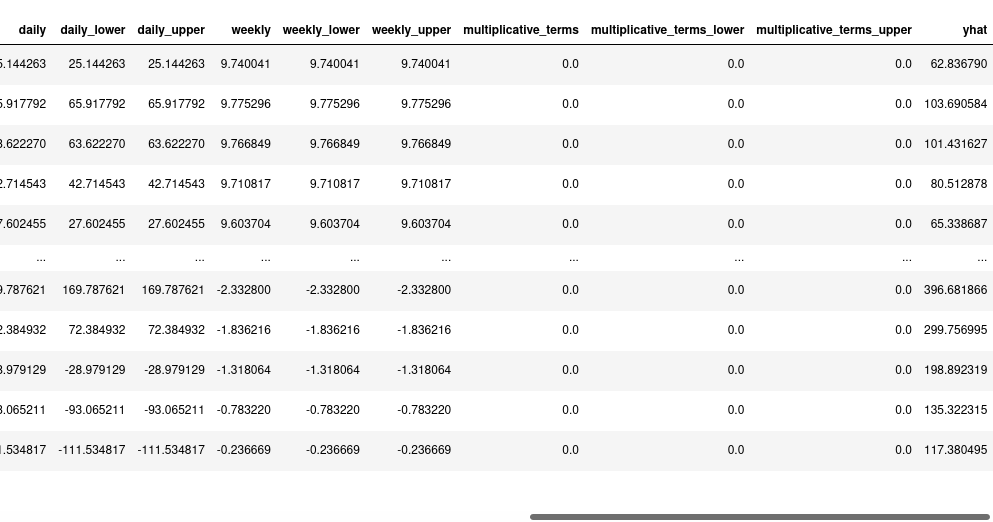

This function will return us a dataframe with a lot of new features for our LightGBM model:

Train Autorregresive LightGBM with Prophet Features

Once we have extracted the new features using Prophet, it’s time to merge our first dataframe with our new Prophet dataframe and make some predictions using LightGBM with some lags. For this purpose, I have implemented a very easy function that will take care of all the process:

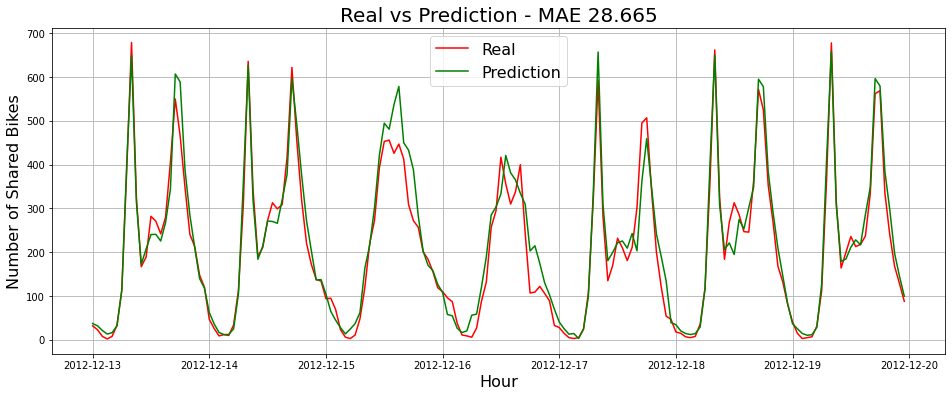

Once we execute the code above, we will merge the feature dataframes, create lagged values, create and train a LightGBM model, make predictions with our trained model and display a plot comparing our predictions to the ground truth. The output will look like this:

Wow! If we look closely to the result, we have been able to improve our previous post’s best model by 14%. In our previous post, the best LightGBM model with the best feature engineering got a MAE of 32.736 and now we got a MAE of 28.665. That's a huge improvement considering that the model and feature engineering from the previous post was already on point.

Conclusion

As we saw in this post, combining supervised machine learning methods with statistical methods such as Prophet, can help us achieve very impressive results. Based on my experience in real world projects, it is very difficult to get better results than these in demand forecasting problems.

References

Boost Your Time Series Forecasts Combining Gradient Boosting Models with Prophet Features was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/OKo7MYs

via RiYo Analytics

No comments