https://ift.tt/0cRmnfk Starting a side-project in a new field you’ve recently learned can be rather intimidating. Where to start? How to fi...

Starting a side-project in a new field you’ve recently learned can be rather intimidating. Where to start? How to find what’s already done in the topic that interests you? How to search and pick a reasonable goal for your project? When we started working on our Deep Learning project we didn’t have the answers to any of these questions. This is how we found them.

Written by Liron Soffer, Dafna Mordechai and Lior Dagan Leib

Where to Start?

Since we all participated in Deep Learning courses and were familiar with basic concepts of neural networks, we knew that the technical challenge that interests us most is building a GAN (Generative Adversarial Network). Soon we also realized we were all excited about art related projects. We decided to choose a paper which combines both, understand its architecture, and then implement it from scratch. Having said that, a paper with a published code was preferable.

We knew we were looking for something similar to a Neural Style Transfer, in which you take two images, A and B, and then create a third image with the content of A “painted” in the artistic style of B. When we searched for it online, we encountered A Neural Algorithm of Artistic Style and this great blog-post by Raymond Yuan: Neural Style Transfer: Creating Art with Deep Learning using tf.keras and eager execution.

This was a good starting point for us as it demonstrated the artistic functionality we searched for, however, this wasn’t a GAN architecture and was released back in 2015.

What Else Is Out There?

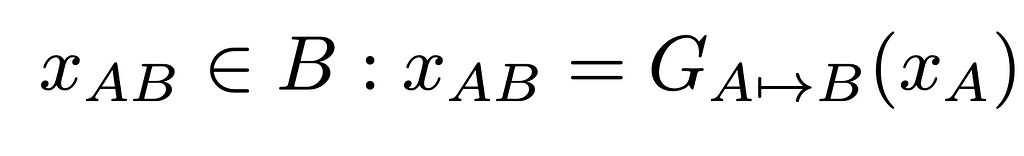

As we further explored this subject, we discovered that Style Transfer is just one type of Image-to-Image translation. As defined in “Image-to-Image Translation: Methods and Applications”, “The goal of Image-to-Image Translation is to convert an input image from a source domain A to a target domain B with the intrinsic source content preserved and the extrinsic target style transferred.” In order to achieve that goal we need to train a mapping G which will take an input source image from A and generate an image in target domain B, so that the result image is indistinguishable from other target domain images.

One notable work in the area of using GANs to perform image-to-image translation is Image-to-Image Translation with Conditional Adversarial Nets (aka pix2pix). The article demonstrates many types of image-to-image translations, including synthesizing photos from label maps, reconstructing objects from edge maps, and coloring images (black and white to full colors).

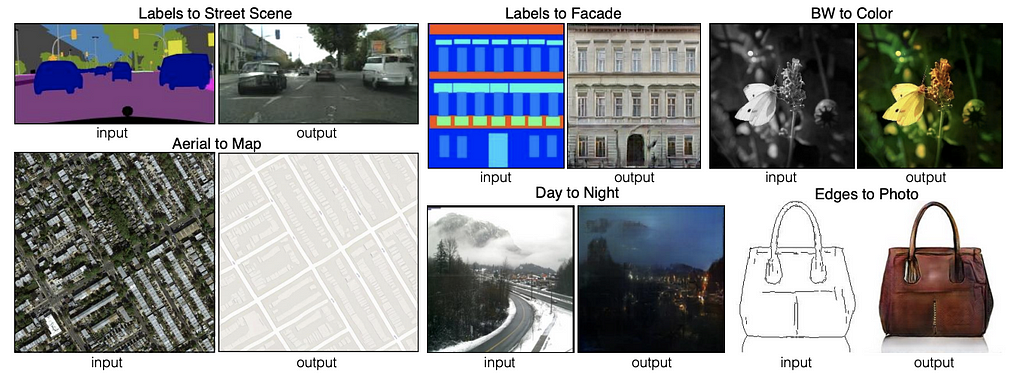

A second notable work is Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks (aka CycleGAN), which presents image-to-image translations of photographs to artwork by famous artists such as Monet, Van Gogh, Cezanne and others. In addition, CycleGAN brilliantly introduced translations of specific objects within the image, such as transforming a horse to a zebra or transforming apples to oranges.

How to Search? How to Pick?

Next, we searched for articles that cited pix2pix and CycleGAN. However, this approach turned out to give too many results. We were looking for a way to quickly review up to date works, and we found Károly Zsolnai-Fehér’s youtube channel Two Minute Papers to be very efficient in this process.

We went through dozens of papers that seemed relevant for the artistic domain, and narrowed them down to about 20 papers. Among the works that we have considered for the project were Nvidia’s paint, AI-Based Motion Transfer, and This Neural Network Restores Old Videos. However, we were all taken by GANILLA which showed impressive results in applying artistic style while preserving original image content. At the end of the day, GANILLA was the chosen one.

Conclusions

We learned a lot from this search process. We ended up finding just what we were looking for, even if at the beginning of this process we didn’t have the terminology to define what it was exactly. We also gained knowledge about the previous and current works that use GAN for various purposes.

Now that we had a winning paper in our hands, the actual work began. In this blog-post we go over GANILLA’s architecture and also give you a taste of the results we got from implementing it.

How Deep Is Your Love? Or, How to Choose Your First Deep-Learning Side-Project was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/yiO7NSk

via RiYo Analytics

No comments