https://ift.tt/3FvlSLf Part 2 of the series on NumPy for Data Science Image created by the author on Canva In the previous article , w...

Part 2 of the series on NumPy for Data Science

In the previous article, we looked at the basics of using NumPy. We discussed

- The need for NumPy and comparison of NumPy array with Python lists and Pandas Series.

- Array attributes like dimensions, shape, etc.

- Special Arrays in NumPy

- Reshaping NumPy arrays

- Indexing and Slicing NumPy arrays

- Boolean Masks in NumPy arrays

- Basic Functions in NumPy

- Vectorized Operations

In the second part of the series, we move to advanced topics in NumPy and their application in Data Science. We will be looking at

- Random Number Generation

- Advanced Array operations

- Handling Missing Values

- Sorting and Searching functions in NumPy

- Broadcasting

- Matrix Operations and Linear Algebra

- Polynomials

- Curve Fitting

- Importing Data into and Exporting Data out of NumPy

If you have not worked with NumPy earlier, we recommend reading the first part of the NumPy series to get started with NumPy concepts.

Advanced Usage of NumPy

Random Number Operations with NumPy

Random number generation forms a critical basis for scientific libraries. NumPy supports the generation of random numbers using the random() module. One of the simplest random number generating methods is rand(). The rand method returns a result from a uniform random distribution between zero and one.

np.random.rand()

0.008305751553221774

We can specify the number of random numbers that we need to generate.

np.random.rand(10)

array([0.03246139, 0.41410126, 0.96956026, 0.43218461, 0.3212331 ,

0.98749094, 0.83184371, 0.33608471, 0.98037176, 0.03072824])

Or specify the shape of the resulting array. For example, in this case, we get fifteen uniformly distributed random numbers in the shape 3 x 5

np.random.rand(3,5)

array([[0.65233864, 0.00659963, 0.60412613, 0.85469298, 0.95456249],

[0.25876255, 0.12360838, 0.20899371, 0.9162027 , 0.74732087],

[0.97992517, 0.1444538 , 0.47195618, 0.38424683, 0.93320447]])

We can also generate integers in a specified range. For this, we use the randint() method.

np.random.randint(4)

0

We can also specify how many integers we want.

np.random.randint(1,6)

4

as with the rand method, in the randint function too, we can specify the shape of the final array

np.random.randint(1,6, (5,3))

array([[1, 1, 2],

[4, 4, 3],

[3, 4, 5],

[5, 3, 4],

[4, 1, 5]])

Sampling

We can also use random number generators to sample a given set population. For example, if we want to choose three colors out of 10 different colors, we can use the choice option.

color_list = ['Red', 'Blue', 'Green', 'Orange', 'Violet', 'Pink', 'Indigo', 'White', 'Cyan', 'Black' ]

np.random.choice(color_list, 5)

array(['Black', 'Black', 'Cyan', 'Black', 'Cyan'], dtype='<U6')

we can also specify if we want the choice to be repeated or not

# Using replace = False to avoid repetitions

np.random.choice(color_list, 5, replace = False)

array(['Black', 'Pink', 'Red', 'Indigo', 'Blue'], dtype='<U6')

As you would expect, if the number of selections is more than the number of choices available, the function will return an error.

# Error when sample is more than the population

np.random.choice(color_list, 15, replace = False)

--------------------------------------------------------------------

ValueError Traceback (most recent call last)

~\AppData\Local\Temp/ipykernel_9040/2963984956.py in <module>

1 # Error when sample is more than the population

----> 2 np.random.choice(color_list, 15, replace = False)

mtrand.pyx in numpy.random.mtrand.RandomState.choice()

ValueError: Cannot take a larger sample than population when 'replace=False'

“Freezing” a Random State

A critical requirement in the Data Science world is the repeatability of results. While we choose random numbers to compute results, to be able to reproduce identical results, we need the same sequence of random numbers. To do this, we need to understand how random numbers are generated.

Most random numbers generated by computer algorithms are called pseudo-random numbers. In simple terms, these are a sequence of numbers that have the same property of random numbers but eventually repeat a pattern because of constraints like memory, disk space, etc. The algorithms use a start value called the seed to generate random numbers. A particular seed to an algorithm will output the same sequence of random numbers. Think of it as the registration number of a vehicle or the social security number. This number can be used to identify the sequence.

To reproduce the same set of random numbers, we need to specify the seed to the sequence. In NumPy, this can be achieved by setting the RandomState of the generator. Let us illustrate this with the following example. We had earlier chosen five colors from ten choices. Each time we execute the code, we may get different results. However, if we fix the RandomState before invoking the choice method, we will get the same result no matter how many times we execute the cell.

Freezing the Random State

# without freezing : each time you execute this cell, you might get a different result

np.random.choice(color_list, 5, replace = False)

array(['White', 'Blue', 'Black', 'Cyan', 'Indigo'], dtype='<U6')

np.random.choice(color_list, 5, replace = False)

array(['Orange', 'Cyan', 'Pink', 'Violet', 'Black'], dtype='<U6')

np.random.choice(color_list, 5, replace = False)

array(['Green', 'Blue', 'Orange', 'Pink', 'White'], dtype='<U6')

# If we fix the random state, we will get the same sequence over and over again

np.random.RandomState(42).choice(color_list, 5, replace = False)

array(['Cyan', 'Blue', 'Pink', 'Red', 'White'], dtype='<U6')

np.random.RandomState(42).choice(color_list, 5, replace = False)

array(['Cyan', 'Blue', 'Pink', 'Red', 'White'], dtype='<U6')

np.random.RandomState(42).choice(color_list, 5, replace = False)

array(['Cyan', 'Blue', 'Pink', 'Red', 'White'], dtype='<U6')

This is very helpful in data science operations like splitting a data set into training and testing data sets. You will find these seeding options in almost all sampling methods — sample, shuffle methods in Pandas, train_test_split method in scikit_learn et al.

Another useful random number operation is shuffle. As the name suggests, the shuffle method well reorders an array’s elements.

my_ar = np.arange(1,11)

my_ar

array([ 1, 2, 3, 4, 5, 6, 7, 8, 9, 10])

np.random.shuffle(my_ar)

my_ar

array([ 6, 3, 8, 10, 4, 2, 1, 9, 5, 7])

my_ar = np.arange(1,11)

np.random.shuffle(my_ar)

my_ar

array([ 6, 7, 10, 8, 1, 9, 3, 4, 2, 5])

my_ar = np.arange(1,11)

np.random.shuffle(my_ar)

my_ar

array([ 7, 6, 2, 4, 5, 8, 10, 9, 1, 3])

The shuffling results can also be “fixed” by specifying a RandomState().

# Using random state to fix the shuffling

my_ar = np.arange(1,11)

my_ar

array([ 1, 2, 3, 4, 5, 6, 7, 8, 9, 10])

np.random.RandomState(123).shuffle(my_ar)

my_ar

array([ 5, 1, 8, 6, 9, 4, 2, 7, 10, 3])

my_ar = np.arange(1,11)

np.random.RandomState(123).shuffle(my_ar)

my_ar

array([ 5, 1, 8, 6, 9, 4, 2, 7, 10, 3])

my_ar = np.arange(1,11)

np.random.RandomState(123).shuffle(my_ar)

my_ar

array([ 5, 1, 8, 6, 9, 4, 2, 7, 10, 3])

Array Operations

NumPy supports a range of array manipulation operations. We had seen some basic ones in the first part of the NumPy series. Let us look at some advanced operations.

Splitting an Array.

We have a wide range of options to break the array in smaller arrays.

split()

The split method offers two major ways of splitting an array.

If we pass an integer, the array is split into equal-sized arrays.

my_ar = np.arange(31,61)

my_ar

array([31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47,

48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60])

np.split(my_ar, 5)

[array([31, 32, 33, 34, 35, 36]),

array([37, 38, 39, 40, 41, 42]),

array([43, 44, 45, 46, 47, 48]),

array([49, 50, 51, 52, 53, 54]),

array([55, 56, 57, 58, 59, 60])]

It will return an error if the array cannot be divided into equal-sized sub-arrays.

# This will result in an error since the array cannot be split into equal parts

np.split(my_ar, 8)

--------------------------------------------------------------------

TypeError Traceback (most recent call last)

c:\users\asus\appdata\local\programs\python\python39\lib\site-packages\numpy\lib\shape_base.py in split(ary, indices_or_sections, axis)

866 try:

--> 867 len(indices_or_sections)

868 except TypeError:

TypeError: object of type 'int' has no len()

During handling of the above exception, another exception occurred:

ValueError Traceback (most recent call last)

~\AppData\Local\Temp/ipykernel_9040/3446337543.py in <module>

1 # This will result in an error since the array cannot be split into equal parts

----> 2 np.split(my_ar, 8)

<__array_function__ internals> in split(*args, **kwargs)

c:\users\asus\appdata\local\programs\python\python39\lib\site-packages\numpy\lib\shape_base.py in split(ary, indices_or_sections, axis)

870 N = ary.shape[axis]

871 if N % sections:

--> 872 raise ValueError(

873 'array split does not result in an equal division')

874 return array_split(ary, indices_or_sections, axis)

ValueError: array split does not result in an equal division

To avoid equal division error, use array_split() method

To overcome this, we can use the array_split() method.

np.array_split(my_ar, 8)

[array([31, 32, 33, 34]),

array([35, 36, 37, 38]),

array([39, 40, 41, 42]),

array([43, 44, 45, 46]),

array([47, 48, 49, 50]),

array([51, 52, 53, 54]),

array([55, 56, 57]),

array([58, 59, 60])]

Instead of specifying the number of sub-arrays, we can pass the indices at which to split the array.

np.split(my_ar, [5,12,15])

[array([31, 32, 33, 34, 35]),

array([36, 37, 38, 39, 40, 41, 42]),

array([43, 44, 45]),

array([46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60])]

We can also use two additional methods

hsplit — to divide the arrays horizontally

hsplit

ar2d = my_ar.reshape(5,6)

ar2d

array([[31, 32, 33, 34, 35, 36],

[37, 38, 39, 40, 41, 42],

[43, 44, 45, 46, 47, 48],

[49, 50, 51, 52, 53, 54],

[55, 56, 57, 58, 59, 60]])

np.hsplit(ar2d, [2,4])

[array([[31, 32],

[37, 38],

[43, 44],

[49, 50],

[55, 56]]),

array([[33, 34],

[39, 40],

[45, 46],

[51, 52],

[57, 58]]),

array([[35, 36],

[41, 42],

[47, 48],

[53, 54],

[59, 60]])]

vsplit to vertically at specified indexes.

np.vsplit(ar2d, [1,4])

[array([[31, 32, 33, 34, 35, 36]]),

array([[37, 38, 39, 40, 41, 42],

[43, 44, 45, 46, 47, 48],

[49, 50, 51, 52, 53, 54]]),

array([[55, 56, 57, 58, 59, 60]])]

Stacking arrays.

Like the split method, we can stack (or combine) arrays. The three commonly used methods are:

Stack: as the name suggests,, this method stacks arrays. For multi-dimensional arrays, we can specify different axis along which to stack.

ar01 = np.ones((2,4)) * 5

ar01

array([[5., 5., 5., 5.],

[5., 5., 5., 5.]])

ar02 = np.ones((2,4))

ar02

array([[1., 1., 1., 1.],

[1., 1., 1., 1.]])

ar03 = np.ones((2,4))*3

ar03

array([[3., 3., 3., 3.],

[3., 3., 3., 3.]])

np.stack([ar01,ar02,ar03], axis = 0)

array([[[5., 5., 5., 5.],

[5., 5., 5., 5.]],

[[1., 1., 1., 1.],

[1., 1., 1., 1.]],

[[3., 3., 3., 3.],

[3., 3., 3., 3.]]])

np.stack([ar01,ar02,ar03], axis = 1)

array([[[5., 5., 5., 5.],

[1., 1., 1., 1.],

[3., 3., 3., 3.]],

[[5., 5., 5., 5.],

[1., 1., 1., 1.],

[3., 3., 3., 3.]]])

np.stack([ar01,ar02,ar03], axis = 2)

array([[[5., 1., 3.],

[5., 1., 3.],

[5., 1., 3.],

[5., 1., 3.]],

[[5., 1., 3.],

[5., 1., 3.],

[5., 1., 3.],

[5., 1., 3.]]])

hstack: this stacks the arrays horizontally and is analogous to the hsplit() method for splitting.

ar01 = np.arange(1,6)

ar01

array([1, 2, 3, 4, 5])

ar02 = np.arange(11,16)

ar02

array([11, 12, 13, 14, 15])

np.hstack([ar01,ar02])

array([ 1, 2, 3, 4, 5, 11, 12, 13, 14, 15])

np.hstack([ar01.reshape(-1,1), ar02.reshape(-1,1)])

array([[ 1, 11],

[ 2, 12],

[ 3, 13],

[ 4, 14],

[ 5, 15]])

vstack: this stacks the arrays vertically and is analogous to the vsplit method for splitting.

np.vstack([ar01,ar02])

array([[ 1, 2, 3, 4, 5],

[11, 12, 13, 14, 15]])

np.vstack([ar01.reshape(-1,1), ar02.reshape(-1,1)])

array([[ 1],

[ 2],

[ 3],

[ 4],

[ 5],

[11],

[12],

[13],

[14],

[15]])

Handling Missing Values

Handling missing values is a vital task in Data Science. While we want to have data without any missing values, unfortunately real-life data may contain missing values. Unlike the Pandas functions which ignore missing values automatically while aggregating, the NumPy aggregation functions do not handle missing values in a similar manner. If one or more missing values are encountered during aggregations, the resultant value will also be missing.

my_ar = np.array([1, np.nan, 3])

my_ar

array([ 1., nan, 3.])

my_ar.sum()

nan

my2dar = np.array([[1, np.nan, 3],[4,5,6]])

my2dar

array([[ 1., nan, 3.],

[ 4., 5., 6.]])

my2dar.sum(axis = 0)

array([ 5., nan, 9.])

my2dar.sum(axis = 1)

array([nan, 15.])

To calculate these aggregate values ignoring the missing values, we need to use the “NaN-Safe” functions. For example, the NaN-Safe version of sum — nansum() will calculate the sum of an array ignoring the missing values, nanmax() will calculate the maximum in an array ignoring the missing values, and so on.

np.nansum(my_ar)

4.0

np.nansum(my2dar, axis = 0)

array([5., 5., 9.])

np.nansum(my2dar, axis = 1)

array([ 4., 15.])

Sorting

Another common operation encountered in Data Science is sorting. NumPy has numerous sorting methods.

The basic sort method allows sorting in ascending order.

my_ar = np.random.randint(1,51, 10)

my_ar

array([48, 37, 45, 48, 31, 22, 5, 43, 2, 21])

np.sort(my_ar)

array([ 2, 5, 21, 22, 31, 37, 43, 45, 48, 48])

For multi-dimensional arrays, one can specify the axis to perform the sorting operation.

my2dar = my_ar.reshape(2,-1)

my2dar

array([[48, 37, 45, 48, 31],

[22, 5, 43, 2, 21]])

np.sort(my2dar, axis = 0)

array([[22, 5, 43, 2, 21],

[48, 37, 45, 48, 31]])

np.sort(my2dar, axis = 1)

array([[31, 37, 45, 48, 48],

[ 2, 5, 21, 22, 43]])

Note: there is no descending sort option. One can use the flip method on the sorted array to reverse the sorting process. Or use the slicer.

np.sort(my_ar)[::-1]

array([48, 48, 45, 43, 37, 31, 22, 21, 5, 2])

np.flip(np.sort(my_ar))

array([48, 48, 45, 43, 37, 31, 22, 21, 5, 2])

np.sort(my2dar, axis = 0)[::-1, :]

array([[48, 37, 45, 48, 31],

[22, 5, 43, 2, 21]])

np.sort(my2dar, axis = 1)[:, ::-1]

array([[48, 48, 45, 37, 31],

[43, 22, 21, 5, 2]])

NumPy also has indirect sorting methods. Instead of returning the sorted array, these methods return the indexes of the sorted array. On passing these indexes to the slicer, we can get the sorted array.

my_ar

array([48, 37, 45, 48, 31, 22, 5, 43, 2, 21])

np.argsort(my_ar)

array([8, 6, 9, 5, 4, 1, 7, 2, 0, 3], dtype=int64)

my_ar[np.argsort(my_ar)]

array([ 2, 5, 21, 22, 31, 37, 43, 45, 48, 48])

np.argsort(my2dar, axis = 0)

array([[1, 1, 1, 1, 1],

[0, 0, 0, 0, 0]], dtype=int64)

np.argsort(my2dar, axis = 1)

array([[4, 1, 2, 0, 3],

[3, 1, 4, 0, 2]], dtype=int64)

Another indirect sorting option available is the lexsort. The lexsort method allows sorting across different arrays in a specified order. Suppose there are two arrays — the first containing the age of five persons and the second containing the height. If we want to sort these on age and then height, we can use the lexsort method. The result will be the indexes that will consider both the sorting orders.

age_ar = np.random.randint(20,45,5)

age_ar

array([26, 31, 39, 33, 25])

height_ar = np.random.randint(160,185,5)

height_ar

array([180, 176, 174, 172, 177])

np.lexsort((age_ar, height_ar))

array([3, 2, 1, 4, 0], dtype=int64)

Searching

Like sorting methods, NumPy provides multiple searching methods too. The most frequently used ones are -

argmax (and argmin): these return the index of the maximum value (or the minimum value).

my_ar

array([48, 37, 45, 48, 31, 22, 5, 43, 2, 21])

np.argmax(my_ar)

0

np.argmin(my_ar)

8

my2dar

array([[48, 37, 45, 48, 31],

[22, 5, 43, 2, 21]])

np.argmax(my2dar, axis = 0)

array([0, 0, 0, 0, 0], dtype=int64)

np.argmax(my2dar, axis = 1)

array([0, 2], dtype=int64)

The NaN-Safe methods are also available that disregard the missing values in nanargmax and nanargmin

where: this returns the indexes of an array that satisfy the specified condition.

np.where(my_ar > 30)

(array([0, 1, 2, 3, 4, 7], dtype=int64),)

In addition, it also has the option of manipulating the output based on whether the element satisfies the condition or not. For example, if we want to return all the elements greater than 30 as positive numbers and the rest as negative numbers, we can achieve it in the following manner.

np.where(my_ar > 30, my_ar, -my_ar)

array([ 48, 37, 45, 48, 31, -22, -5, 43, -2, -21])

One can also perform these operations on multi-dimensional arrays.

np.where(my2dar > 30, my2dar, -my2dar)

array([[ 48, 37, 45, 48, 31],

[-22, -5, 43, -2, -21]])

argwhere: this returns the indices of an array that satisfies where condition elementwise.

np.argwhere(my_ar > 30)

array([[0],

[1],

[2],

[3],

[4],

[7]], dtype=int64)

np.argwhere(my2dar > 30)

array([[0, 0],

[0, 1],

[0, 2],

[0, 3],

[0, 4],

[1, 2]], dtype=int64)

Broadcasting

One of the most powerful concepts in NumPy is broadcasting. The broadcasting feature in NumPy allows us to perform arithmetic operations on arrays of differing shapes under certain circumstances. In the previous part of this series, we had seen how we could perform arithmetic operations on two arrays of identical shapes. Or a scalar with an array. Broadcasting extends this concept to two arrays that are not of identical shape.

However, not all arrays are compatible with broadcasting. To check if two arrays are suited to broadcasting, NumPy matches the shapes of the array element-wise, starting from the outermost axis and going all the way through to the innermost axis. The arrays are considered suitable for broadcasting if the corresponding dimensions are

- Identical

- Or at least one of them is equal to one.

To illustrate the process, let us take a few examples.

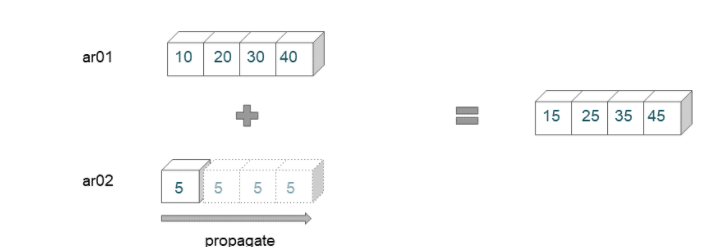

Broadcasting Example 01

ar01 = np.arange(10,50,10)

ar01

array([10, 20, 30, 40])

ar02 = 5

ar01 + ar02

array([15, 25, 35, 45])

Here we are trying to broadcast an array of shape (4,) with a scalar. The scalar value is propagated across the shape of the first array.

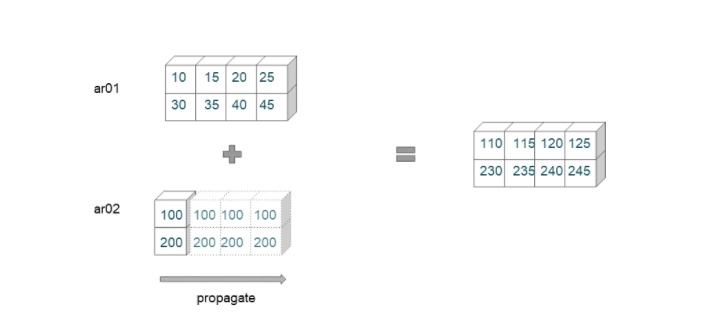

Broadcasting Example 02

ar01 = np.arange(10,50,5).reshape(2,-1)

ar01

array([[10, 15, 20, 25],

[30, 35, 40, 45]])

ar01.shape

(2, 4)

ar02 = np.array([[100],[200]])

ar02

array([[100],

[200]])

ar02.shape

(2, 1)

ar01 + ar02

array([[110, 115, 120, 125],

[230, 235, 240, 245]])

This example has two arrays of shape (2,4) and (2,1), respectively. The broadcasting rules are applied from the rightmost dimension.

- First, to match dimension 4 with dimension 1, the second array is extended.

- Then the program checks for the next dimension. Since these two dimensions are identical, no propagation is required.

- The first array and the propagated second arrays now have the same dimensions, and they can be added elementwise.

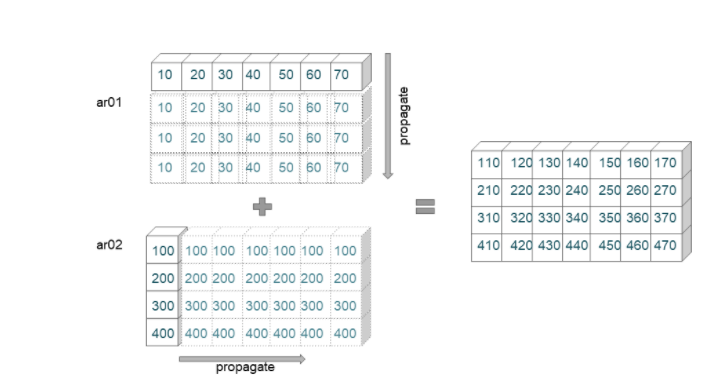

Broadcasting Example 03

ar01 = np.arange(10,80,10)

ar01

array([10, 20, 30, 40, 50, 60, 70])

ar01.shape

(7,)

ar02 = np.arange(100,500,100).reshape(-1,1)

ar02

array([[100],

[200],

[300],

[400]])

ar02.shape

(4, 1)

ar01 + ar02

array([[110, 120, 130, 140, 150, 160, 170],

[210, 220, 230, 240, 250, 260, 270],

[310, 320, 330, 340, 350, 360, 370],

[410, 420, 430, 440, 450, 460, 470]])

In this example, we are trying to add an array of shape (7,) with an array of shape (4,1). The broadcasting proceeds in the following manner.

- We first check the rightmost dimensions (7) and (1). Since the second array dimension is 1, it is extended to fit the size of the first array (7).

- Further, the next dimensions are checked () with (4). To match the dimensions of the second array, the first array is extended to fit the size of the second array (4).

- Now the extended arrays are of dimensions (7,4) and are added elementwise.

We finish off we look at two arrays that are not suited to be broadcast together.

ar01 = np.arange(10,80,10).reshape(-1,1)

ar01

array([[10],

[20],

[30],

[40],

[50],

[60],

[70]])

ar01.shape

(7, 1)

ar02

array([[100],

[200],

[300],

[400]])

ar02.shape

(4, 1)

Here we are trying to add an array of shape (7,1) with an array of shape (4,1). We start with the rightmost dimension — both the arrays have 1 element each along this dimension. However, when we check the next dimensions (7) and (4), the dimensions are not compatible for broadcasting. Therefore, the program throws a ValueError.

ar01 + ar02

--------------------------------------------------------------------

ValueError Traceback (most recent call last)

~\AppData\Local\Temp/ipykernel_9040/1595926737.py in <module>

----> 1 ar01 + ar02

ValueError: operands could not be broadcast together with shapes (7,1) (4,1)

Matrix Operations and Linear Algebra

NumPy provides numerous methods for performing matrix and linear algebra operations. We look at some of these.

Transposing a Matrix:

For a two-dimensional array, transposing refers to interchanging rows to columns and columns to rows. One can simply invoke the .T attribute of a ndarray to get the transposed matrix.

my2dar

array([[48, 37, 45, 48, 31],

[22, 5, 43, 2, 21]])

my2dar.T

array([[48, 22],

[37, 5],

[45, 43],

[48, 2],

[31, 21]])

For multi-dimensional arrays, we can use the transpose() method and specify the axis to be transposed.

Determinant of a matrix:

For square matrices, we can calculate the determinant. Determinants have numerous applications in higher mathematics and Data Science. These are used in solving a system of linear equations using the Cramer’s Rule, calculation of EigenValues which are used in Principal Component Analysis, among others. To find the determinant of a square matrix, we can invoke the det() method in the linalg sub-module of NumPy.

sq_ar = np.random.randint(10,50,9).reshape(3,3)

sq_ar

array([[34, 42, 34],

[12, 16, 33],

[22, 33, 12]])

np.linalg.det(sq_ar)

-4558.000000000001

sq_ar2 = np.array([[12,15], [18,10]])

sq_ar2

array([[12, 15],

[18, 10]])

np.linalg.det(sq_ar2)

-149.99999999999997

Matrix Multiplication:

Multiplying two matrices forms the basis of numerous applications in higher mathematics and Data Science. The matmul() function implements the matrix multiplication feature in NumPy. To illustrate matrix multiplication, we multiply a matrix with the identity matrix created using the eye() method. We should get the original matrix as the resultant product.

sq_ar

array([[34, 42, 34],

[12, 16, 33],

[22, 33, 12]])

np.matmul(sq_ar, np.eye(3,3))

array([[34., 42., 34.],

[12., 16., 33.],

[22., 33., 12.]])

sq_ar2

array([[12, 15],

[18, 10]])

np.matmul(sq_ar2, np.eye(2,2))

array([[12., 15.],

[18., 10.]])

Inverse of a matrix:

For a square matrix M, the inverse of a matrix M-1 is defined as a matrix such that M M-1 = In where In denotes the n-by-n identity matrix. We can find the inverse of a matrix in NumPy by using the inv() method of the linalg submodule in NumPy.

inv2 = np.linalg.inv(sq_ar2)

inv2

array([[-0.06666667, 0.1 ],

[ 0.12 , -0.08 ]])

We can verify that product of a matrix and its inverse equals the identity matrix.

np.matmul(sq_ar2, inv2)

array([[ 1.00000000e+00, -8.32667268e-17],

[ 0.00000000e+00, 1.00000000e+00]])

Equality of NumPy arrays.

Given the differences in floating-point precision, we can compare if two NumPy arrays are element-wise equal within a given tolerance. This can be done using the allclose method.

np.allclose(np.eye(2,2), np.matmul(sq_ar2, inv2))

True

Solver.

The linalg module of NumPy has the solve method that can compute the exact solution for a system of linear equations.

Solving Linear Equations

x1 + x2 = 10

2x1 + 5x2 = 41

This should give x1 = 3 and x2 = 7

coeff_ar = np.array([[1,1],[2,5]])

ord_ar = np.array([[10],[41]])

np.linalg.solve(coeff_ar, ord_ar)

array([[3.],

[7.]])

Polynomials

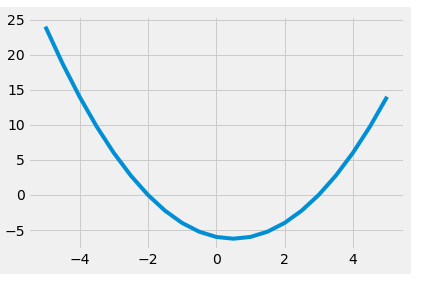

Like the linear algebra sub-module, NumPy also supports polynomials algebra. The module has several methods as well as the Polynomial class that contains the usual arithmetic operations. Let us look at some functionalities of the polynomial sub-module. To illustrate this, we use the polynomial in x f(x) as

f(x) = x² - x - 6

we can create the polynomial from the coefficients. Note, coefficients must be listed in increasing order of the degree. So the coefficient of the constant term (-6) is listed first, then the coefficient of x (-1), and finally the coefficient of x² (1)

from numpy.polynomial.polynomial import Polynomial

poly01 = Polynomial([-6,-1,1])

poly01

x → -6.0-1.0x+1.0x²

The expression can also be factorized as

f(x) = (x-3)(x+2)

Here 3 and -2 are the roots of equation f(x) = 0. We can find the roots of a polynomial by invoking the roots() method.

poly01.roots()

array([-2., 3.])

We can form the equation from the roots using the fromroots() method.

poly02 = Polynomial.fromroots([-2,3])

poly02

x → -6.0-1.0x+1.0x²

The polynomial module also contains the linspace() method that can be used to create equally spaced pairs of x and f(x) across the domain. This can be used to plot the graph conveniently.

polyx, polyy = poly01.linspace(n = 21, domain = [-5,5])

plt.plot(polyx, polyy)

[<matplotlib.lines.Line2D at 0x26006fd2e80>]

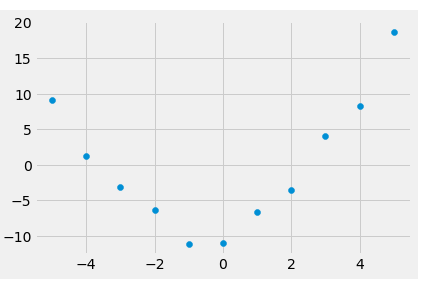

Curve Fitting

NumPy polynomial sub-module also provides the least-squares fit of a polynomial to data. To illustrate this, let us create a set of data points and add some randomness to a polynomial expression.

numpoints = 11

x_vals = np.linspace(-5,5,numpoints)

y_vals = x_vals**2 + x_vals - 12 + np.random.rand(numpoints)*4

plt.scatter(x_vals, y_vals)

<matplotlib.collections.PathCollection at 0x26008086940>

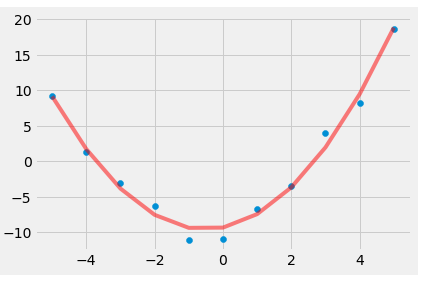

We then invoke the polyfit() method on these points and find the coefficients of the fitted polynomial.

from numpy.polynomial import polynomial

fit_pol = polynomial.polyfit(x_vals, y_vals, 2)

fit_pol

array([-9.35761397, 0.96843091, 0.93303665])

We can also visually verify the fit by plotting the values. To do this we use the polyval method to evaluate the polynomial function at these points.

fit_y = polynomial.polyval(x_vals, fit_pol)

plt.scatter(x_vals, y_vals)

plt.plot(x_vals, fit_y, c = 'Red', alpha = 0.5 )

[<matplotlib.lines.Line2D at 0x26008102c10>]

Importing and Exporting Data in NumPy

Till now we have created arrays on the fly. In real-life Data Science scenarios, we usually have the data available to us. NumPy supports importing data from a file and exporting NumPy arrays to an external file. We can either use the save and load methods to export and import data in native NumPy native (.npy and .npz formats).

save

with open("numpyfile.npy", "wb") as f:

np.save(f, np.arange(1,11).reshape(2,-1))

load

with open("numpyfile.npy", "rb") as f:

out_ar = np.load(f)

out_ar

array([[ 1, 2, 3, 4, 5],

[ 6, 7, 8, 9, 10]])

We can also read from and write data to text files using the loadtxt and savetxt methods.

savetxt

np.savetxt("nptxt.csv", np.arange(101,121).reshape(4,-1))

loadtxt

np.loadtxt("nptxt.csv")

array([[101., 102., 103., 104., 105.],

[106., 107., 108., 109., 110.],

[111., 112., 113., 114., 115.],

[116., 117., 118., 119., 120.]])

Conclusion

In this series, we looked at NumPy which is the fundamental library in the Python Data Science ecosystem for scientific computing. If you are familiar with Pandas, then moving to NumPy is very easy. Comfort with NumPy is expected from an aspiring Data Scientist or Data Analyst proclaiming proficiency in Python.

As with any skill, all one needs to master NumPy is patience, persistence, and practice. You try these and many other data science interview problems from actual data science interviews on StrataScratch. Join a community of over 40,000 like-minded data science aspirants. You can practice over 1,000 coding and non-coding problems of various difficulty levels. Join StaraScratch today and make your dream of working at top tech companies like Apple, Netflix, Microsoft, or hottest start-ups like Noom, Doordash, et al a reality. All the code examples are available on Github here.

Originally published at https://www.stratascratch.com.

NumPy for Data Science Interviews: Part 02 was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/3fo2t4A

via RiYo Analytics

No comments