https://ift.tt/3opWNfs Leveraging zero-shot learning for text summarisation with Hugging Face’s Pipeline API Photo by David Dvořáček on ...

Leveraging zero-shot learning for text summarisation with Hugging Face’s Pipeline API

What is this about?

This is the second part of a tutorial on setting up a text summarisation project. For more context and an overview of this tutorial, please refer back to the introduction as well as part 1 in which we created a baseline for our project.

In this blog post we will leverage the concept of zero-shot learning (ZSL) which means we will use a model that has been trained to summarise text but hasn’t seen any examples of the arXiv dataset. It’s a bit like trying to paint a portrait when all you have been doing in your life is landscape painting. You know how to paint, but you might not be too familiar with the intricacies of portrait painting.

The code for the entire tutorial can be found in this Github repo. For today’s part we will use this notebook, in particular.

Why Zero-Shot Learning (ZSL)?

ZSL has become popular over the past years because it allows leveraging state-of-the-art NLP models with no training. And their performance is sometimes quite astonishing: The Big Science Research Workgroup has recently released their T0pp (pronounced “T Zero Plus Plus”) model, which has been trained specifically for researching zero-shot multitask learning. It can often outperform models 6x larger on the BIG-bench benchmark, and can outperform the 16x larger GPT-3 on several other NLP benchmarks.

Another benefit of ZSL is that it takes literally two lines of code to use it. By just trying it out we can create a second baseline, which we can use to quantify the gain in model performance once we fine-tune the model on our dataset.

Setting up a zero-shot learning pipeline

To leverage ZSL models we can use Hugging Face’s Pipeline API. This API enables us to use a text summarisation model with just two lines of code while it takes care of the main processing steps in an NLP model:

- The text is preprocessed into a format the model can understand.

- The preprocessed inputs are passed to the model.

- The predictions of the model are post-processed, so you can make sense of them.

It leverages the summarisation models that are already available on the Hugging Face model hub.

So, here’s how to use it:

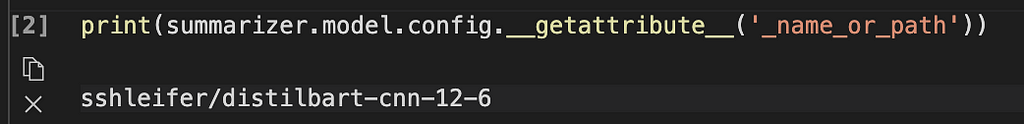

That’s it, believe it or not. This code will download a summarisation model and create summaries locally on your machine. In case you’re wondering which model it uses, you can either look it up in the source code or use this command:

When we run this command we see that the defaukt model for text summarisation is called sshleifer/distilbart-cnn-12–6:

We can find the model card for this model on the Hugging Face website, where we can also see that the model has been trained on two datasets: The CNN Dailymail dataset and the Extreme Summarization (XSum) dataset. It is worth noting that this model is not familiar with the arXiv dataset and is only used to summarise texts that are similar to the ones it has been trained on (mostly news articles). The numbers 12 and 6 in the model name refer to the number of encoder layers and decoder layers, respectively. Explaining what these are is outside the scope of this tutorial, but you can read more about it in this blog post by Sam Shleifer, who created the model.

We will use the default model going forward, but I encourage you to try out different pre-trained models. All the models that are suitable for summarisation can be found here. To use a different model you can specify the model name when calling the Pipeline API:

Sidebar: Extractive vs abstractive summarisation

We haven’t spoken yet about two possible but different approaches to text summarisation: Extractive vs Abstractive. Extractive summarisation is the strategy of concatenating extracts taken from a text into a summary, while abstractive summarisation involves paraphrasing the corpus using novel sentences. Most of the summarisation models are based on models that generate novel text (they are Natural Language Generation models, like, for example, GPT-3). This means that the summarisation models will also generate novel text, which makes them abstractive summarisation models.

Generating zero-shot summaries

Now that we know how to use it, we want to use it on our test dataset, exactly the same dataset we used in part 1 to create the baseline. We can do that with this loop:

Note that we have the min_length and max_length parameters to control the summary the model generates. In this example we set min_length to 5 because we want the title to be at least 5 words long. And by eye-balling the reference summaries (i.e. the actual titles for the research papers) it looks like 20 could be a reasonable value for max_length. But again, this is just a first attempt and once the project is in the experimentation phase, these two parameters can and should be changed to see if the model performance changes.

Sidebar: Beam search, sampling, etc.

If you’re already familiar with text generation you might know there are many more parameters to influence the text a model generates, such as beam search, sampling, and temperature. These parameters give you more control over the text that is being generated, for example make the text more fluent, less repetitive, etc. These techniques are not available in the Pipeline API — you can see in the source code that min_length and max_length are the only parameters that will be considered. Once we train and deploy our own model, however, we will have access to those parameters. More on that in part 4 of this series.

Model evaluation

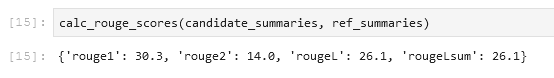

Once we have the generated the zero-shot summaries, we can use our ROUGE function again to compare the candidate summaries with the reference summaries:

Running this calculation on the summaries that were generated with the ZSL model, we get the following results:

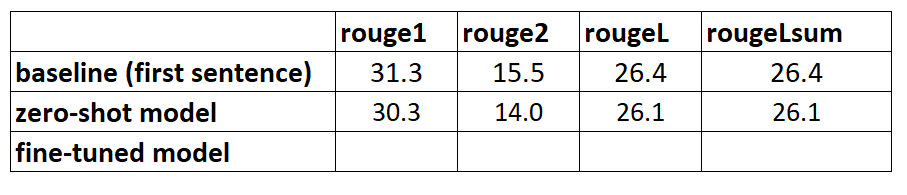

When we compare those with our baseline from part 1, we see that this ZSL model is actually performing worse that our simple heuristic of just taking the first sentence. Again, this is not unexpected: While this model knows how to summarise news articles, it has never seen an example of summarising the abstract of an academic research paper.

Conclusion

We now have created two baselines, one using a simple heuristic and one with an ZSL model. By comparing the ROUGE scores we see that the simple heuristic currently outperforms the deep learning model:

In the next part we will take this very same deep learning model and try to improve its performance. We will do so by training it on the arXiv dataset (this step is also called fine-tuning): We leverage the fact that it already knows how to summarise text in general. We then show it lots of examples of our arXiv dataset. Deep learning models are exceptionally good at identifying patterns in datasets once they get trained on it, so we do expect the model to get better at this particular task.

Setting up a Text Summarisation Project (Part 2) was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/3lykctq

via RiYo Analytics

No comments