https://ift.tt/3c8ROc8 A platform built on top of Knative, ideal for both serving and eventing architectures Serverless has been in discus...

A platform built on top of Knative, ideal for both serving and eventing architectures

Serverless has been in discussion for quite some time now, and it is here to stay. Moving infra management away from developers translates to their time and efforts aligned towards building and improving the product, and a faster time to delivery to market.

Cloud Run lets you run containerised applications on a fully managed serverless platform. In this blog, I will walk you through the following —

a. Background

b. Steps for deploying your application

c. Overview of a sample app to showcase serving and eventing

Let’s dive in then!

a. Background

Cloud Run is built on top of Knative, which is a serverless framework that aims to provide the building blocks needed to make serverless applications a reality on Kubernetes and not necessarily be a full-blown solution by itself. If you have used k8s or even a managed version of k8s, you know it takes some (read a lot of!) time to get the hang of it. Cloud Run, as you will see with the sample application discussed in this blog, has simplified this management for you.

Interested in a more holistic understanding of when you should use cloud run? Head to this blog.

Ps: If you are a student or somebody planning to have a portfolio hosted somewhere, I would really recommend giving Cloud Run a try. 👩🏻💻

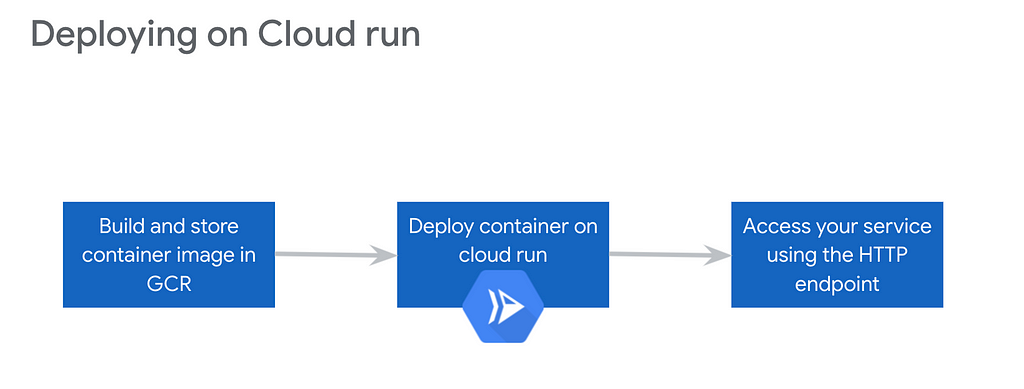

b. The 3 simple (magical?) steps

- Build — Package your application

The sample app I am using here is present on Github. You can clone the repo for starters and experiment with the source code.

Now assuming you have your application code ready, note that it has a Dockerfile in it. We will create a container image using this file, and it will be stored in Container Registry. This image will be used by our Cloud Run service when it needs to autoscale. Behind the hood it is a lot of work but for you this step only means cd to your source code folder where you have your Dockerfile and running this command —

gcloud builds submit --tag gcr.io/project-id/image-name .

You will be prompted to answer whether or not would you want to allow public invocation of the HTTP endpoints of your services. Answer accordingly, but of course this is something you can edit later on as well through the Cloud Run console.

Recommended: You can understand this command and it's parameters here. This documentation also provides a comprehensive way to understand what happens during the 'build' step. - Deploy

To deploy your app, simply run — gcloud run deploy

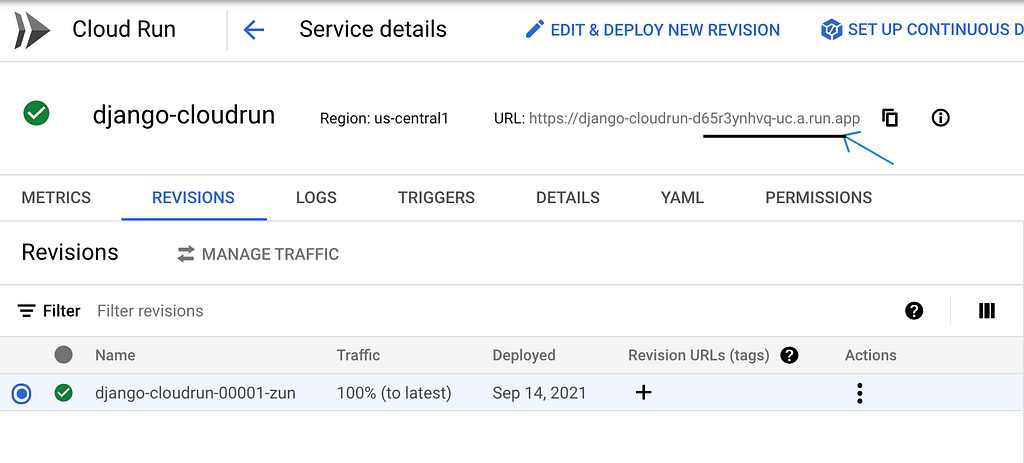

This step takes some time and you will be able to see the progress as your service is deployed from the image and the revision starts serving traffic. If the deployment is successful, the final result will show what percentage of traffic is being served by the deployed revision and what is the HTTP url you can use to access the service. Unsuccessful deployment will return an error with a url to the logs. These logs can be used to debug the issues with your deployment.

If you wish to deploy from an already existing container image, use this command — gcloud run deploy <service-name> --image <image-url> - Accessing service

On successful deployment you can access your service using the service url that is output of the last command or find it on the console.

curl <service_url> -H “Authorization: Bearer $(gcloud auth print-identity-token)"

This identity-token contains user information and, is required when you have not allowed public invocation of your service.

c. Overview — Serving and Eventing

For most people, Serverless means Function-as-a-Service. The question sometimes is, when we can use ‘Cloud Functions’ which is GCP’s Faas offering directly for eventing architectures, then why Cloud Run?

For me, it is —

- Having the abstraction as containers which can be moved across platforms with little to no-code change

- Ability to use any languages/runtime binaries

- The choice to be able to try out advanced capabilities available with k8s deployments like traffic splitting, canary deployments, etc without having to have an added service mesh like Istio.

You can do these tasks right from the UI, or the gcloud command line if you prefer the terminal.

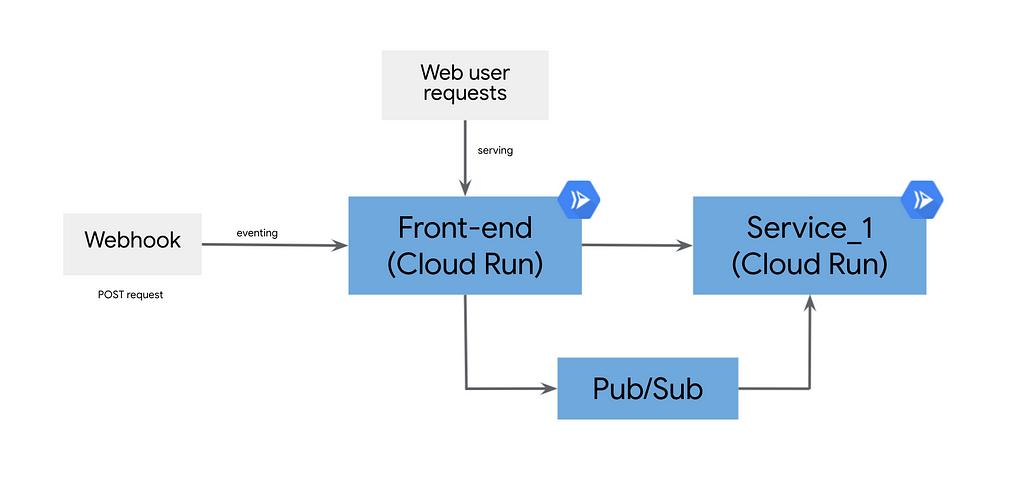

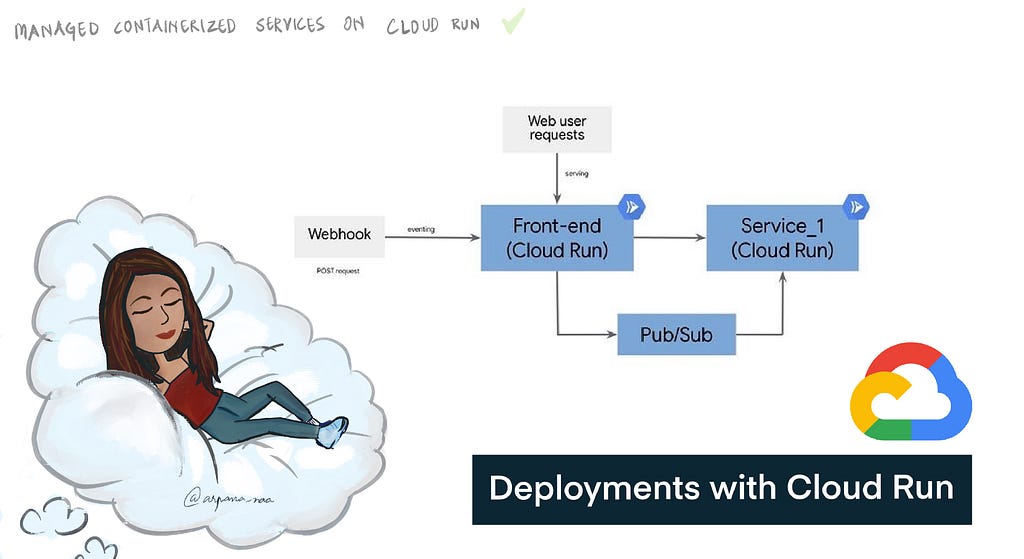

By ‘serving’ on Cloud Run I mean that your service is listening for requests at a particular PORT exposed by you. On the other hand, ‘eventing’ means that only when an action happens (event), a Cloud Run service is triggered. We will be using Pub/Sub as the connecting medium between our ‘event’ and ‘service’. Pub/Sub is where we will define ‘when X action happens, trigger Y service’.

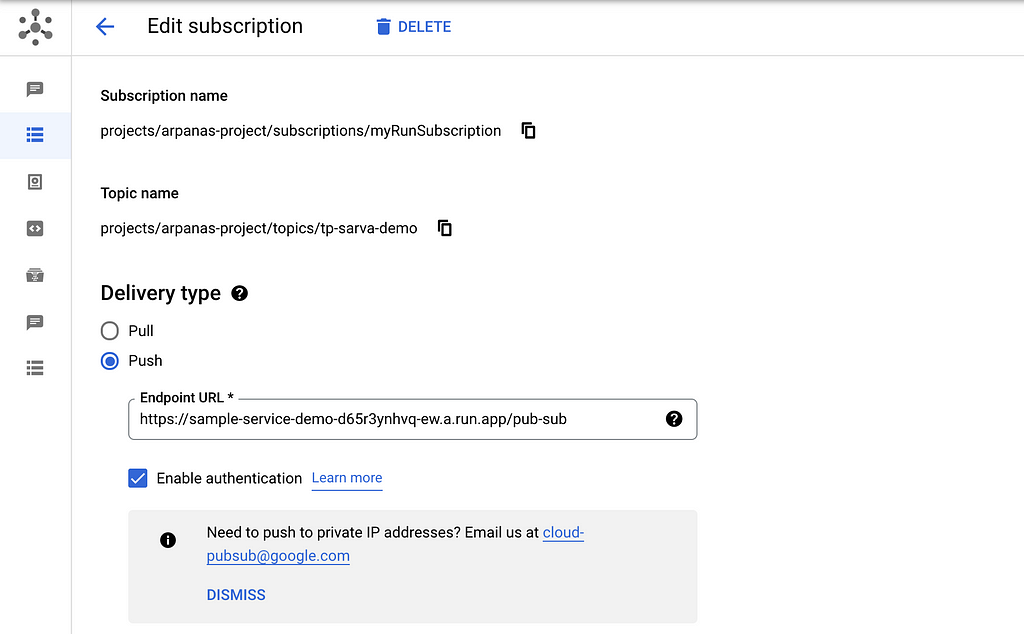

Now coming to our sample app, if you are interested in getting hands-on with the cloud-run and you can fork this repo and follow the steps in the readme to proceed. Authentication, IAM roles and permissions needed for you to allow pub/sub to be able to trigger an internal service are well-defined in GCP docs. Please have a look at these.

Serving — Process web traffic

In our sample application, serving is done via a ‘GET’ request that calls the endpoint associated with the service you deployed. When you hit the endpoint, an instance of your service is created and webpage is served. If another request is made to that endpoint within a certain period (timeout period) then the same instance continues to serve the new requests. Basically, Cloud run deployments can handle upto 80 concurrent requests. If your service does not receive any requests for 60 seconds the instance is killed.

Eventing — Process asynchronous requests

A common use-case in a lot of organisations is a web-hook triggering a service on an action by some user. I have created a simple web-hook in our sample itself (we all know, web-hooks are basically POST requests!) and also deployed a service (let’s call it service_1).

This web-hook will be manually triggered by us like a user on a website doing some action. This event will send a message to the pub/sub topic. The message is received by the subscription of this topic, which have their endpoint defined in push mode as the url for service_1 deployment.

The sample application we are deploying in this blog has pub/sub integration needed and so you will need to have those APIs enabled and will need to follow steps to integrate pub/sub but if you are looking for some ready to be deployed stateless applications, you can pick and deploy any service from this GitHub repository and use it’s url as the endpoint to be triggered.

Steps — build, deploy, access endpoint!

Hope you learnt something about the possibilities with Cloud Run. Please feel free to comment in any questions you might have with this deployment procedure or create an issue within the repo if you face any issues with the application code.

Serverless on GCP with Cloud Run was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/3oqesCx

via RiYo Analytics

No comments