https://ift.tt/3x2C0kV The Importance of Dimensional Modeling When I was in high school, I had a superb chemistry teacher, something...

The Importance of Dimensional ModelingWhen I was in high school, I had a superb chemistry teacher, something I, unfortunately, failed to appreciate until long after I went to college. For the first year of AP chemistry, we spent a huge amount of time working on what was at the time called unit analysis, though from a modeling perspective this is now known as dimensional analysis. It is, sadly, something of a lost art, and it's something that trips up people far more often than it should. Dimensional analysis, in its purest form, can be summarized as the statement "You can't compare apples to oranges." Put another way, if you add three apples to two oranges, you do not have five apples. You have five pieces of fruit. In other words, the operation "add" in this particular case actually forces a categorical change, as apples are not oranges, but they both can be classified as a type of fruit. If I add three oranges to two oranges, I get five oranges. Ditto with apples. It is only when attempting to add disjoint types of entities that you are forced to go up the stack to a more general category. The more disparate two things are, the more strained and generalized the units have to be. For instance, if I add two sailors to an aircraft carrier, we don't really even talk about three things anymore as being meaningful. Instead, the statement above is usually interpreted as "I have added two sailors to the complement of sailors aboard the aircraft carrier". If I add a destroyer and three frigates to an aircraft carrier, however, I can talk about five ships in a fleet. The single operator "add" actually performs a lot of semantic action behind the scenes, which is one of the reasons that ontologies are so important - they help to navigate the complexities of dimensional analysis. This kind of confusion carries over to other forms of data analysis. For instance, consider a vector in three dimensions. From a programming standpoint, such a vector can be seen simply as an ordered list of three numbers. However, this can get you into serious trouble if you're not paying attention to units. In areas such as 3D graphics (critical to the metaverse) a vector is not just that list - every one of those numbers has to be of the same unit type. This has definite implications things: first, the vector v = (3,4,5) may actually be shorthand for v = (3 m, 4 m, 5 m), which are dimensions of length (extent in one dimension) along each orthogonal axis. The length of this vector, relative to its origin, also shares this unit. For instance. | v | = sqrt((3m)^2 + (4m)^2 + (5m)^2) = 7.071m. Moreover, if the first value is in meters, the second in centimeters and the third in kilometers, you have to convert each of these in meters before you calculate length, or your answer will be nonsense. One technique that people working with machine learning frequently do is to normalize data: convert it into a unitless number between zero and one by (typically) subtracting the current value from the smallest value then dividing this with the maximum vs. minimum difference. This does serve to make the number unitless as well (because both numerator and denominator have the same units and so cancel out). The problem with such dimensionless numbers, however, is that they don't necessarily have any meaning. If I have one dimensionless number that represents the normalized value of aircraft carriers relative to the fleet and another dimensionless number that represents the normalized value of people on an aircraft carrier, mathematically there's NOTHING stopping me from adding those two values together, but the resulting number is gibberish. Indeed, one of the biggest reasons that such models fail is because the dimensional analysis wasn't performed, and the significance of the semantics was ignored. This is also one of the reasons that one can't simply suck in data from a spreadsheet or database into a knowledge graph and be ready to go from day 1. Extracting data values from such a data source is trivial. Making sure that the dimensional analysis is correct (and correcting it if it isn't) can be far more time-consuming. This is part of the reason why, when teaching data modeling to my students, I stress the importance of dimensional analysis, and also recommend that students create unit types such as "35"^^Units:_Degrees_Celsius rather than just "35"^^xsd:float. The former gives me the ability to compare apples to oranges and convert when necessary, the latter does not. The lesson to take from this is simple: Don't be lazy. Tracking metadata such as units is a fundamental part of ensuring the integrity of the data that you work with. It is a common (and expensive) problem in data engineering, and it almost invariably comes down to poor data design and lack of metadata management percolating its way through the data pipeline, until what comes out the other end is sludge. And nobody wants sludge. Community Editor, To subscribe to the DSC Newsletter, go to Data Science Central and become a member today. It's free! Data Science Central Editorial CalendarDSC is looking for editorial content specifically in these areas for December, with these topics having higher priority than other incoming articles.

DSC Featured Articles

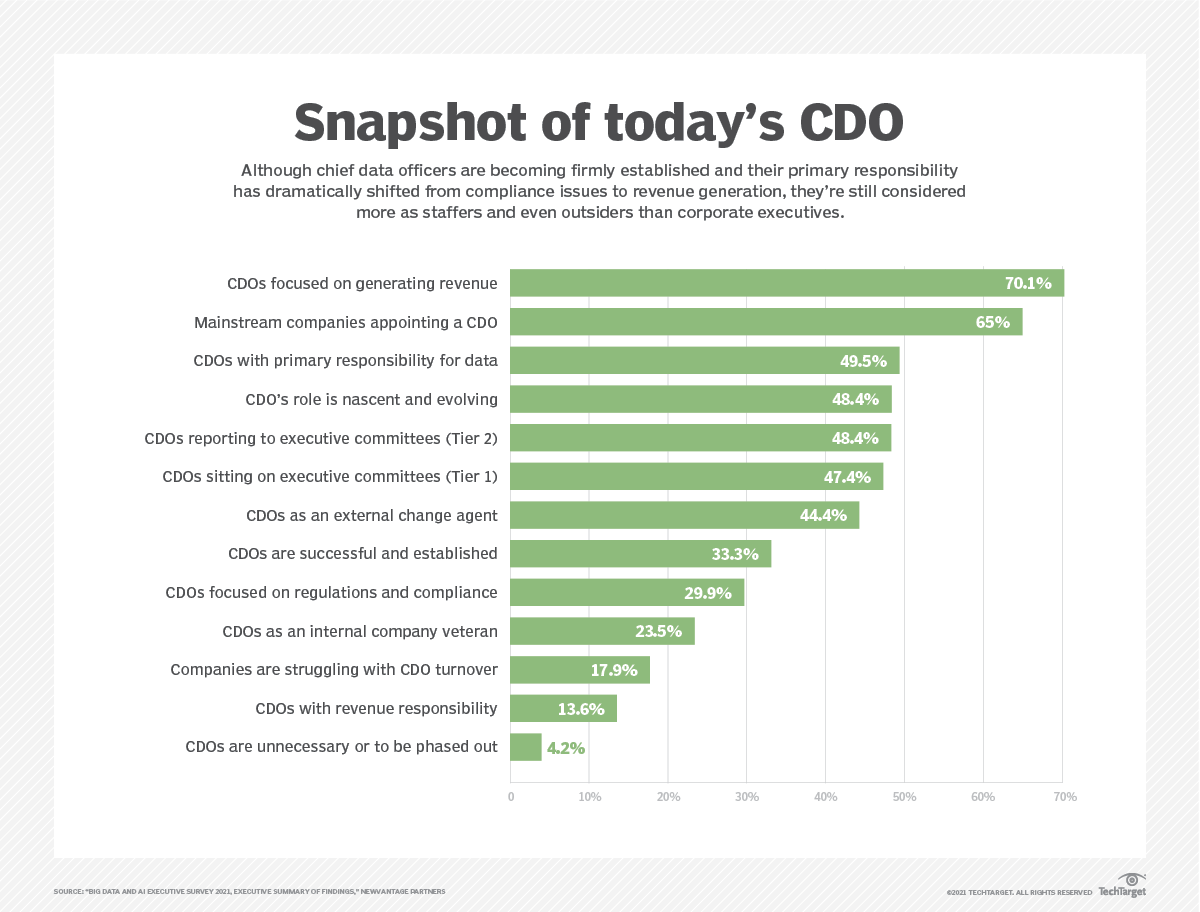

Picture of the Week

|

from Featured Blog Posts - Data Science Central https://ift.tt/3oHeS7P

via RiYo Analytics

ليست هناك تعليقات