https://ift.tt/32CbxA7 What if behind every modern deep neural network a “lottery ticket” was hidden? A much smaller sub-network that when ...

What if behind every modern deep neural network a “lottery ticket” was hidden? A much smaller sub-network that when trained it would achieve the same or even better performance that the entire trained network

In 2019 a paper by Frankle and Carbin[1] appeared with a very intriguing conjecture, based on experimental observation of current large neural networks it seemed that one could grab a small portion of the same network and train it to achieve results not only with the same accuracy but sometimes even better than the original neural network.

“The Lottery Ticket Hypothesis: A randomly-initialized, dense neural network contains a sub-network that is initialized such that — when trained in isolation — it can match the test accuracy of the original network after training for at most the same number of iterations.”-J. Frankle and M. Carbin

As incredible as this conjecture appeared to be, this was only the beginning…. that same year a new paper emerged from Ramanujan et al.[2] Defining a much stronger conjecture and actually showing an algorithm to find this hidden sub-network. The team of researchers started to realize that some of the modern oversized networks they were working with, not only contained a “lottery ticket” but actually the sub-network itself was already having the same accuracy as other trained networks with nothing more than a random initialization, no training involved.

“Hidden in a randomly weighted Wide ResNet-50 [32] we find a subnetwork (with random weights) that is smaller than, but matches the performance of a ResNet-34 [9] trained on ImageNet [4]. Not only do these “untrained subnetworks” exist, but we provide an algorithm to effectively find them.” -Ramanujan et al.

So then the stronger conjecture says that given a large enough neural network, it must contains a sub-network that even without training has comparable accuracy to the original trained neural network.

Now let us stop here for a second and marvel at the sheer combinatorial power that this implies. Imagine we were trying to train a neural network for example as a simple classifier, to distinguish between images of dogs and cats, then the conjecture says, given a large enough neural network that is initialized with random weights your desired neural network must be with high probability somewhere inside there and already trained just by the initialization alone.

Now there are two main questions one may ask…

- what does ”large enough” actually mean?

- how does one find this new and more powerful sub-network?

For the first question we have to go to the beginning of 2020 when a paper by Malach et al.[3] gave a complete proof of the stronger conjecture, they have shown that with high probability if you have a network you wanted to approximate, you can get as close as you want by creating a new network whose size is polynomial in the size of the target network (which means that the size of the new network is defined by some polynomial equation that is dependent on the amount of layers and neurons your target network has and how well you want your approximation to be) and then finding the appropriate sub network.

To be able to prove this polynomial bound, strong restrictions on the norms of input and weights had to be assumed, this restrictions seemed to be a problem and if taken out then the bound would grow exponentially on the number of layers…. Lucky for us the story is not over, near the end of 2020 a new paper emerged, this time by L.Orseau et al.[4] from DeepMind.

In this paper , they generalized the ending of the proof that was done by Malach et al.[3] in order to get rid of the aforementioned assumptions and using new insights that take advantage of the composability of neural networks they were able to improve the bound significantly:

“the overparameterized network only needs a logarithmic factor (in all variables but depth) number of neurons per weight of the target subnetwork.”-L.Orseau et al.

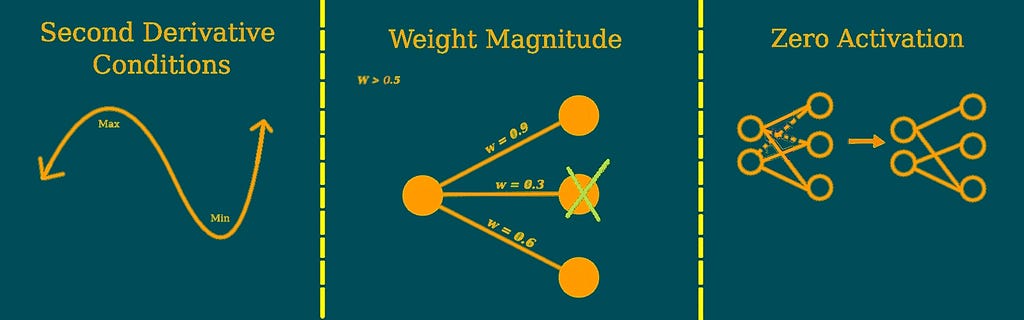

Now we are ready to try to attack the second question…. This time we have to go back to 1990 when a paper from Le Cun et al.[8] called “Optimal Brain Damage”(OBD) appeared. The main idea behind it was being able to adapt the size of a neural network making it smaller by removing unimportant weight of a network, this allowed for greater generalizations, fewer training examples required and a speed improvement(This kind of techniques live under the name of “Pruning”)

“The basic idea of OBD is that it is possible to take a perfectly reasonable network, delete half (or more) of the weights and wind up with a network that works just as well, or better.”-Le Cun et al.

Lets try to grasp the idea behind OBD. Lets say you would want to erase parameters in order to make the network smaller, one would want to erase specific parameters such that it will have the least effect on training error and so maintaining current network accuracy. One way to do this is with the objective function (the function that it is desired to maximize or minimize) so one could in principle delete a parameter and see the amount of change of the objective function…..yet this technique would be too complex to actually perform since it would require to temporary delete each parameter and then reevaluate the objective function (we might be dealing with very large networks with millions of parameters!!)

So the solution to this problem was achieve by approximating the objective function and then calculating the change that was being made by each parameter via second order derivatives and an iterative method(for more information on second derivative pruning methods go to [6])

Since then this idea of eliminating unnecessary weights from neural networks or “pruning” has been greatly improved, although missing theoretical guaranties ,experimentation has showed that one can prune more than 90% of the network without loosing accuracy.

Now one may ask, if all we are doing is trying to reduce the size of the network… then why not just use a smaller network?

Modern experimentation seems to point out that bigger over-parametrized networks tend to have better advantages over small networks, for example Belkin et al[7]. defined the “double descent” risk curve in order to explain why modern machine learning methods were obtaining very accurate predictions on new data while at the same time having very low or zero training risk, so what would normally return a highly over-fitted system, was now giving very good predictions

“Although the learned predictors obtained at the interpolation threshold typically have high risk, we show that increasing the function class capacity beyond this point leads to decreasing risk, typically going below the risk achieved at the sweet spot in the “classical” regime.”-Belkin et al.

Another interesting example was given by Ma et al.[5] were they used convex analysis to explain the phenomenon of fast convergence of Stochastic Gradient Decent (SGD) in modern large scales machine learning architectures producing the previously mention empirical loss go to zero (zero training risk) .

SGD being the backbone of most modern neural networks algorithms gives high importance to understanding this phenomenon and it was also this work that gave rise to Belkin et al. work on the double descent risk curve.

Conclusion

“Then it is natural to ask: could the most important task of gradient descent be pruning?”- L.Orseau et al.

Is Stochastic Gradient Decent just a pruning mechanism via weight actualization? The proofs on this conjecture and bounds seem to indicate that networks must contain many more “lottery tickets” than previously shown and questions are still open on the theory and connection between learning and pruning, work of Malach et al.[3] showed that surprisingly given a random network, pruning achieves competitive results with respect to weight optimization algorithms.

“One could even conjecture that the effect of

pruning is to reach a vicinity of the global optimum, after which gradient descent can perform local quasi-convex optimization.”- L.Orseau et al.

The lottery ticket hypothesis has been proven and many tools are yet to be built in this vibrant field of understanding the theoretical power and limitations of neural networks, there are still plenty of open questions and the future of pruning might be yet to be seen.

References

[1]J. Frankle and M. Carbin. The lottery ticket hypothesis: Finding sparse, trainable neural networks. (2019)In ICLR .

[2]V. Ramanujan, M. Wortsman, A. Kembhavi, A. Farhadi, and M. Rastegari. What’s hidden in a randomly weighted neural network? (2019) arXiv preprint arXiv:1911.13299.

[3]E. Malach, G. Yehudai, S. Shalev-Shwartz, and O. Shamir. Proving the lottery ticket hypothesis: Pruning is all you need.(2020) arXiv preprint arXiv:2002.00585. To appear in ICML-2020.

[4]L. Orseau, M.Hutter, O. Rivasplata.Logarithmic Pruning is All You Need.(2020)arXiv preprint arXiv:2006.12156

[5]S. Ma, R. Bassily, and M. Belkin. The power of interpolation: Understanding the effectiveness of sgd in modern over-parametrized learning. (2018)In International Conference on Machine Learning, pages 3325–3334.

[6] B. Hassibi and D. G. Stork. Second order derivatives for network pruning: Optimal brain surgeon. In Advances in neural information processing systems.(1993) pages 164–171.

[7]M. Belkin, D. Hsu, S. Ma, and S. Mandal.Reconciling modern machine learning practice and the bias-variance trade-off.(2019)arXiv preprint arXiv:1812.11118

[8] Yann LeCun, John S Denker, and Sara A Solla. Optimal brain damage. In Advances in neural information processing systems.(1990) pp. 598–605.

Searching for lottery tickets inside large neural networks was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/3G0ivgo

via RiYo Analytics

ليست هناك تعليقات