https://ift.tt/3nlEMi5 Over 2 TBs of labeled audio datasets publicly available and parseable on DagsHub October is over and so is the Dags...

Over 2 TBs of labeled audio datasets publicly available and parseable on DagsHub

October is over and so is the DagsHub’s Hacktoberfest challenge. When announcing the challenge, we didn’t imagine we’d reach the finish line with almost 40 new audio datasets, publicly available and parseable on DagsHub! Big kudos to our community for doing wonders and pulling off such a fantastic effort in so little time. Also, to Digital Ocean, GitHub, and GitLab for organizing the event.

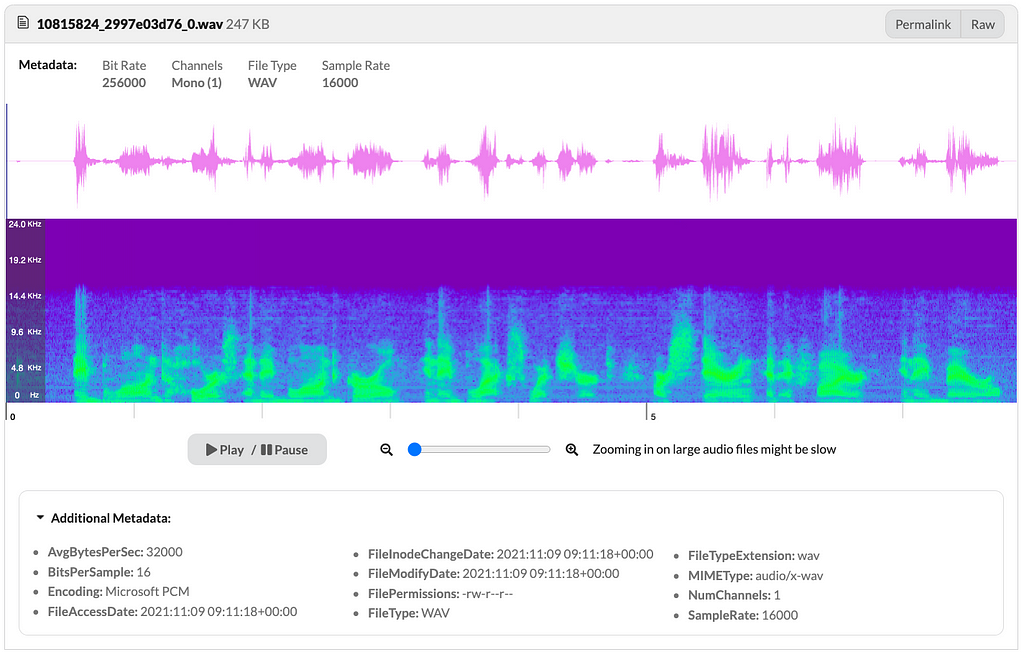

This year we focused our contribution on the audio domain. For that, we improved DagsHub’s audio catalog capabilities. Now, you can listen to samples hosted on DagsHub without having to download anything locally. For each sample, you get additional information like waveforms, spectrograms, and file metadata. Last but not least, the dataset is versioned by DVC, making it easy to improve and ready to use.

To make it easier for audio practitioners to find the dataset they’re looking for, we gathered all Hacktoberfest’s contributions to this post. We have datasets from seven(!) different languages, various domains, and sources. If you’re interested in a dataset that is missing here, please let us know, and we’ll make sure to add it.

Although the month-long virtual festival is over, we’re still welcoming contributions to open source data science. If you’d like to enrich the audio datasets hosted on DagsHub, we’d be happy to support you in the process! Please reach out on our Discord channel for more details.

See you on Hacktoberfest 2022 🍻

Acted Emotional Speech Dynamic Database

The Acted Emotional Speech Dynamic Database (AESDD) is a publicly available speech emotion recognition dataset. It contains utterances of acted emotional speech in the Greek language. It is divided into two main categories, one containing utterances of acted emotional speech and the other controlling spontaneous emotional speech. You can contribute to this dataset by submitting recordings of emotional speech to the site. They will be validated and be provided publicly for non-commercial research purposes.

- Contributed by: Abid Ali Awan

- Original dataset

Arabic Speech Corpus

The Arabic Speech Corpus has been developed as part of Ph.D. work by Nawar Halabi at the University of Southampton. The corpus was recorded in south Levantine Arabic (Damascian accent) using a professional studio. Synthesized speech as an output using this corpus has produced a high-quality, natural voice.

- Contributed by: Mert Bozkır

- Original dataset

Att-hack: French Expressive Speech

This data is acted expressive speech in French, 100 phrases with multiple versions/repetitions (3 to 5) in four social attitudes: friendly, distant, dominant, and seductive. This research has been supported by the French Ph2D/IDF MoVE project on modeling of speech attitudes and application to an expressive conversational agent and funded by the Ile-de-France region. This database has led to a publication for the 2020 Speech Prosody conference in Tokyo. For a more detailed account, see the research article.

- Contributed by: Filipp Levikov

- Original dataset

Audio MNIST

This repository contains code and data used in Interpreting and Explaining Deep Neural Networks for Classifying Audio Signals. The dataset consists of 30,000 audio samples of spoken digits (0–9) from 60 different speakers. Additionally, it holds the audioMNIST_meta.txt, which provides meta information such as the gender or age of each speaker.

- Contributed by: Mert Bozkır

- Original dataset

BAVED: Basic Arabic Vocal Emotions

The Basic Arabic Vocal Emotions Dataset (BAVED) contains 7 Arabic words spelled in different levels of emotions recorded in an audio/ wav format. Each word is recorded in three levels of emotions, as follows:

- Level 0 — The speaker is expressing a low level of emotion. This is similar to feeling tired or down.

- Level 1 — The “standard” level where the speaker expresses neutral emotions.

- Level 2 — The speaker is expressing a high level of positive or negative emotions.

- Contributed by: Kinkusuma

- Original dataset

Bird Audio Detection

This data set is part of a challenge hosted by the Machine Listening Lab from the Queen Mary University of London In collaboration with the IEEE Signal Processing Society. It contains datasets collected in real live bio-acoustics monitoring projects and an objective, standardized evaluation framework. The freefield1010 hosted on DagsHub has a collection of over 7,000 excerpts from field recordings worldwide, gathered by the FreeSound project and then standardized for research. This collection is very diverse in location and environment.

- Contributed by: Abid Ali Awan

- Original dataset

CHiME-Home

The CHiME-Home dataset is a collection of annotated domestic environment audio recordings. The audio recordings were originally made for the CHiME project. In the CHiME-Home dataset, 4-second audio chunks are each associated with multiple labels, based on a set of 7 labels associated with sound sources in the acoustic environment.

- Contributed by: Abid Ali Awan

- Original dataset

CMU-Multimodal SDK

CMU-MOSI is a standard benchmark for multimodal sentiment analysis. It is especially suited to train and test multimodal models since most of the newest works in multimodal temporal data use this dataset in their papers. It holds 65 hours of annotated video from more than 1000 speakers, 250 topics, and 6 Emotions (happiness, sadness, anger, fear, disgust, surprise).

- Contributed by: Michael Zhou

- Original dataset

CREMA-D: Crowd-sourced Emotional Multimodal Actors

CREMA-D is a dataset of 7,442 original clips from 91 actors. These clips were from 48 male and 43 female actors between the ages of 20 and 74 coming from various races and ethnicities (African America, Asian, Caucasian, Hispanic, and Unspecified). Actors spoke from a selection of 12 sentences. The sentences were presented using six different emotions (Anger, Disgust, Fear, Happy, Neutral, and Sad) and four different emotion levels (Low, Medium, High and Unspecified). Participants rated the emotion and emotion levels based on the combined audiovisual presentation, the video alone, and the audio alone. Due to the large number of ratings needed, this effort was crowd-sourced, and a total of 2443 participants each rated 90 unique clips, 30 audio, 30 visual, and 30 audio-visual.

- Contributed by: Mert Bozkır

- Original dataset

Children’s Song

Children’s Song Dataset is an open-source dataset for singing voice research. This dataset contains 50 Korean and 50 English songs sung by one Korean female professional pop singer. Each song is recorded in two separate keys resulting in a total of 200 audio recordings. Each audio recording is paired with a MIDI transcription and lyrics annotations in both grapheme-level and phoneme-level.

- Contributed by: Kinkusuma

- Original dataset

Device and Produced Speech

The DAPS (Device and Produced Speech) dataset is a collection of aligned versions of professionally produced studio speech recordings and recordings of the same speech on common consumer devices (tablet and smartphone) in real-world environments. It has 15 versions of audio (3 professional versions and 12 consumer device/real-world environment combinations). Each version consists of about 4 1/2 hours of data (about 14 minutes from each of 20 speakers).

- Contributed by: Kinkusuma

- Original dataset

Deeply Vocal Characterizer

The latter is a human nonverbal vocal sound dataset consisting of 56.7 hours of short clips from 1419 speakers, crowdsourced by the general public in South Korea. Also, the dataset includes metadata such as age, sex, noise level, and quality of utterance. This repo holds only 723 utterances (ca. 1% of the whole corpus) and is free to use under CC BY-NC-ND 4.0. For accessing the complete dataset under a more restrictive license, please contact deeplyinc.

- Contributed by: Filipp Levikov

- Original dataset

EMODB

The EMODB database is the freely available German emotional database. The database was created by the Institute of Communication Science, Technical University, Berlin. Ten professional speakers (five males and five females) participated in data recording. The database contains a total of 535 utterances. The EMODB database comprises seven emotions: anger, boredom, anxiety, happiness, sadness, disgust, and neutral. The data was recorded at a 48-kHz sampling rate and then down-sampled to 16-kHz.

- Contributed by: Kinkusuma

- Original dataset

EMOVO Corpus

EMOVO Corpus database built from the voices of 6 actors who played 14 sentences simulating six emotional states (disgust, fear, anger, joy, surprise, sadness) plus the neutral state. These emotions are well-known found in most of the literature related to emotional speech. The recordings were made with professional equipment in the Fondazione Ugo Bordoni laboratories.

- Contributed by: Abid Ali Awan

- Original dataset

ESC-50: Environmental Sound Classification

The ESC-50 dataset is a labeled collection of 2000 environmental audio recordings suitable for benchmarking methods of environmental sound classification. The dataset consists of 5-second-long recordings organized into 50 semantical classes (with 40 examples per class) loosely arranged into 5 major categories:

- Animals.

- Natural soundscapes & water sounds.

- Human, non-speech sounds.

- Interior/domestic sounds.

- Exterior/urban noises.

Clips in this dataset have been manually extracted from public field recordings gathered by the Freesound.org project. The dataset has been prearranged into five folds for comparable cross-validation, ensuring that fragments from the same original source file are contained in a single fold.

- Contributed by: Kinkusuma

- Original dataset

EmoSynth: Emotional Synthetic Audio

EmoSynth is a dataset of 144 audio files, approximately 5 seconds long and 430 KB in size, which 40 listeners have labeled for their perceived emotion regarding the dimensions of Valence and Arousal. It has metadata about the classification of the audio based on the dimensions of Valence and Arousal.

- Contributed by: Abid Ali Awan

- Original dataset

Estonian Emotional Speech Corpus

The Estonian Emotional Speech Corps (EEKK) is a corps created at the Estonian Language Institute within the framework of the state program “Estonian Language Technological Support 2006–2010”. The corpus contains 1,234 Estonian sentences that express anger, joy, and sadness or are neutral.

- Contributed by: Abid Ali Awan

- Original dataset

Flickr 8k Audio Caption Corpus

The Flickr 8k Audio Caption Corpus contains 40,000 spoken audio captions in .wav audio format, one for each caption included in the train, dev, and test splits in the original corpus. The audio is sampled at 16000 Hz with 16-bit depth and stored in Microsoft WAVE audio format.

- Contributed by: Michael Zhou

- Original dataset

Golos: Russian ASR

Golos is a Russian corpus suitable for speech research. The dataset mainly consists of recorded audio files manually annotated on the crowd-sourcing platform. The total duration of the audio is about 1240 hours.

- Contributed by: Filipp Levikov

- Original dataset

JL Corpus

Emotional speech in New Zealand English. This corpus was constructed by maintaining an equal distribution of 4 long vowels. The corpus has five secondary emotions along with five primary emotions. Secondary emotions are important in Human-Robot Interaction (HRI), where the aim is to model natural conversations among humans and robots.

- Contributed by: Hazalkl

- Original dataset

LJ Speech

a public domain speech dataset consisting of 13,100 short audio clips of a single speaker reading passages from 7 non-fiction books. A transcription is provided for each clip. Clips vary in length from 1 to 10 seconds and have a total length of approximately 24 hours. The texts were published between 1884 and 1964 and are in the public domain. The audio was recorded in 2016–17 by the LibriVox project and is also in the public domain.

- Contributed by: Kinkusuma

- Original dataset

MS SNSD

This dataset contains a large collection of clean speech files and various environmental noise files in .wav format sampled at 16 kHz. It provides the recipe to mix clean speech and noise at various signal-to-noise ratio (SNR) conditions to generate a large, noisy speech dataset. The SNR conditions and the number of hours of data required can be configured depending on the application requirements.

- Contributed by: Hazalkl

- Original dataset

Public Domain Sounds

A wide array of sounds can be used for object detection research. The dataset is small (543MB) and divided into subdirectories by its format. The audio files vary from 5 seconds to 5 minutes.

- Contributed by: Abid Ali Awan

- Original dataset

RSC: sounds from RuneScape Classic

Extract RuneScape classic sounds from cache to wav (and vice versa). Jagex used Sun's original .au sound format, which is headerless, 8-bit, u-law encoded, 8000 Hz pcm samples. This module can decompress original sounds from sound archives as headered WAVs, and recompress (+ resample) new WAVs into archives.

- Contributed by: Hazalkl

- Original dataset

Speech Accent Archive

This dataset contains 2140 speech samples, each from a different talker reading the same reading passage. Talkers come from 177 countries and have 214 different native languages. Each talker is speaking in English.

- Contributed by: Kinkusuma

- Original dataset

Speech Commands Dataset

The dataset (1.4 GB) has 65,000 one-second long utterances of 30 short words by thousands of different people, contributed by public members through the AIY website. This is a set of one-second .wav audio files, each containing a single spoken English word.

- Contributed by: Abid Ali Awan

- Original dataset

TESS: Toronto Emotional Speech Set

The Northwestern University Auditory Test №6 was used to create these stimuli. Two actresses (aged 26 and 64 years) recited a set of 200 target words in the carrier phrase “Say the word _____,” and recordings were produced of the set depicting each of seven emotions (anger, disgust, fear, happiness, pleasant surprise, sadness, and neutral). There are a total of 2800 stimuli.

- Contributed by: Hazalkl

- Original dataset

URDU

The URDU dataset contains emotional utterances of Urdu speech gathered from Urdu talk shows. There are 400 utterances of four basic emotions in the book: Angry, Happy, Neutral, and Emotion. There are 38 speakers (27 male and 11 female). This data is created from YouTube.

- Contributed by: Abid Ali Awan

- Original dataset

VIVAE: Variably Intense Vocalizations of Affect and Emotion

The Variably Intense Vocalizations of Affect and Emotion Corpus (VIVAE) consists of a set of human non-speech emotion vocalizations. The full set, comprising 1085 audio files, features eleven speakers expressing three positive (achievement/ triumph, sexual pleasure, and surprise) and three negatives (anger, fear, physical pain) affective states. Each parametrically varied from low to peak emotion intensity.

- Contributed by: Mert Bozkır

- Original dataset

FSDD: Free Spoken Digit Dataset

A simple audio/speech dataset consisting of recordings of spoken digits in wav files at 8kHz. The recordings are trimmed so that they have near minimal silence at the beginning and ends.

- Contributed by: Kinkusuma

- Original dataset

LEGOv2 Corpus

This spoken dialogue corpus contains interactions captured from the CMU Let’s Go (LG) System by Carnegie Mellon University in 2006 and 2007. It is based on raw log files from the LG system. 347 dialogs with 9,083 system-user exchanges; emotions classified as garbage, non-angry, slightly angry, and very angry.

- Contributed by: Kinkusuma

- Original dataset

MUSDB18

Multi-track music dataset for music source separation. There are two versions of MUSDB18, the compressed and the uncompressed(HQ).

- MUSDB18 — consists of a total of 150 full-track songs of different styles and includes both the stereo mixtures and the original sources, divided between a training subset and a test subset.

- MUSDB18-HQ — the uncompressed version of the MUSDB18 dataset. It consists of a total of 150 full-track songs of different styles and includes both the stereo mixtures and the original sources, divided between a training subset and a test subset.

- Contributed by: Kinkusuma

- Original dataset

Voice Gender

The VoxCeleb dataset (7000+ unique speakers and utterances, 3683 males / 2312 females). The VoxCeleb is an audio-visual dataset consisting of short clips of human speech, extracted from interview videos uploaded to YouTube. VoxCeleb contains speech from speakers spanning a wide range of different ethnicities, accents, professions, and ages.

- Contributed by: Abid Ali Awan

- Original dataset

40 Open-Source Audio Datasets for ML was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/3DmiNNC

via RiYo Analytics

No comments