https://ift.tt/3Gwox9f Locate the reasoning by detecting the conclusions Image by Vern R. Walker, CC BY 4.0 . One challenging problem i...

Locate the reasoning by detecting the conclusions

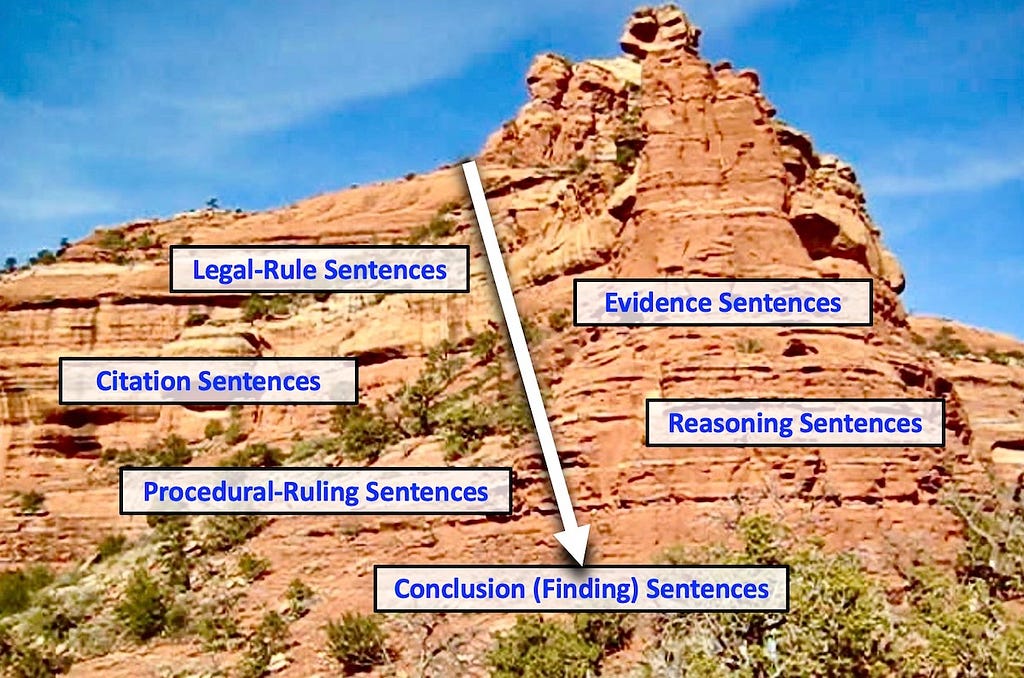

One challenging problem in data science is mining the reasoning from a legal decision document. To locate that reasoning within the entire document, we can use the decision’s conclusions on the issues to lead us to relevant evidence, specific reasoning, and legal rules. Research indicates that machine-learning (ML) algorithms can automatically classify the sentences that state the factual conclusions of a tribunal, with a precision that is adequate for many use cases. Therefore, training classifiers to automatically identify the legal conclusions is an important first step.

In general, a first stage in mining any reasoning or arguments from documents is to detect which sentences function as components of a chain of reasoning (often called “argumentative sentences”) and which do not (“non-argumentative sentences”). An argumentative sentence is then classified either as a “conclusion” or as a “premise” (a sentence supporting the conclusion) of a unit of reasoning. In the influential model by Stephen Toulmin (Stephen E. Toulmin, The Uses of Argument: Updated Edition; Cambridge University Press, 2003), premises can then be classified as either “data” or “warrant.” The “data” are the facts on which the reasoning rests, while “warrants” are conditionals or generalizations that authorize the inference from the facts to the conclusion. To classify reasoning patterns, we will ultimately need to determine which sentences are parts of the same unit of reasoning, the nature of the inferential relations within the reasoning unit, which type of reasoning the unit is, and so forth. To make headway on all these tasks, we can begin by identifying the conclusion of the reasoning, and we can use that conclusion to anchor our further search and analysis.

This same general workflow applies to mining arguments and reasoning specifically from legal documents. The types of conclusions, types of premises, and types of argument vary depending on the type of legal document. For example, the reasoning types found in regulatory decisions are frequently different than the types found in appellate court decisions, and different again are the types found in trial-court decisions. But what these examples have in common is the need for the decision maker to use language that signals which sentences state the legal conclusions. Parties, lawyers, and reviewing courts need to be able to determine whether all the legal issues have been addressed. In this article, I use examples from fact-finding decisions in trial-level courts or agencies, but the same analysis has parallels for other types of governmental decisions. My examples come from decisions of the Board of Veterans’ Appeals (BVA) announcing conclusions about claims by U.S. military veterans for benefits due to service-related disabilities.

Definitions and Identifying Features

A “legal finding” (also called a “finding of fact,” or simply a “finding”) is an official determination by the trier of fact about a factual issue, based on the evidence presented in the case. The trier of fact might be a jury or a judge in a judicial proceeding, or an administrative body or officer in an administrative proceeding. A “finding sentence,” therefore, is a sentence that primarily states one or more findings of fact. Because the substantive legal rules specify the factual issues to be decided, the findings in a case are the tribunal’s decisions on those issues. Put another way, the findings state whether one or more conditions of the substantive legal rules have been satisfied in the legal case at hand.

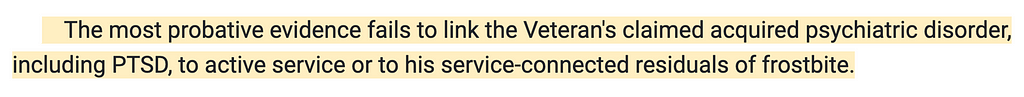

The following is an example of a finding sentence (as displayed in a web application developed by Apprentice Systems, Inc., with the yellow background color used to encode finding sentences):

How do we lawyers know which sentences state findings of fact? There are some typical linguistic features that help signal a finding sentence. Examples are:

· An appropriate linguistic cue for attributing a proposition to the trier of fact (e.g., “the Board finds that,” or “the Court is persuaded that”); or

· Words or phrases expressing satisfaction of, or failure to satisfy, a proof obligation (e.g., “did not satisfy the requirement that,” “succeeded in proving,” or “failed to prove”); or

· Wording that signals the completion of the tribunal’s legal tasks (e.g., “after a review of all the evidence”); or

· Definite noun phrases that refer to particular persons, places, things, or events involved in the case, instead of merely the indefinite types used to state the rules themselves (e.g., “the Veteran” instead of “a veteran”).

While judges might not write finding sentences using a specific format, there are generally enough linguistic features to enable attorneys to identify such sentences in a decision.

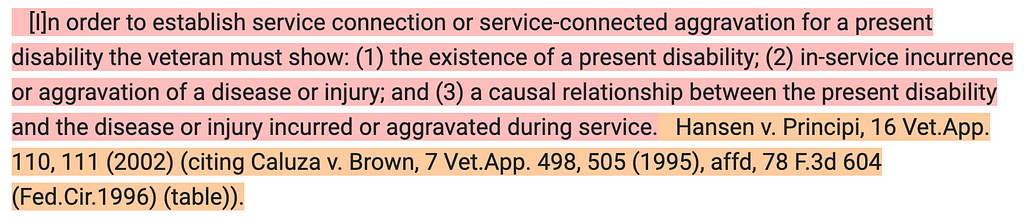

In addition, because finding sentences necessarily announce decisions on the contested legal issues, judges often write finding sentences using the key terminology used to define the legal issue. For example, consider this legal-rule sentence from Shedden v. Principi, 381 F.3d 1163, 1166–67 (Fed. Cir. 2004) (as shown in the Apprentice Systems web application, with the appropriate background colors):

The first sentence (highlighted in light red) states a legal rule, and the second sentence (highlighted in light orange) provides the appropriate citation to legal authority. In announcing decisions on each of these three numbered conditions, judges might use:

· The phrase “present disability” or similar wording in stating a finding on Condition (1);

· The phrases “in-service incurrence” or “in-service aggravation” in a finding on Condition (2); and

· The phrase “causal relationship” in a finding on Condition (3).

The judge writing the decision has a strong incentive to clearly signal which sentences state the findings on which legal issues.

Moreover, logic helps us identify the set of finding sentences. For example, for the claiming veteran to win the decision, there must be positive findings (for the veteran) on all the required rule conditions. On the other hand, if the veteran loses the decision, then there must be at least one negative finding (against the veteran). And in such a case, because a single negative finding disposes of the claim, the judge might not even reach definitive findings on the other conditions. Logic therefore helps us decide how many findings to look for.

A Machine-Learning (ML) Dataset

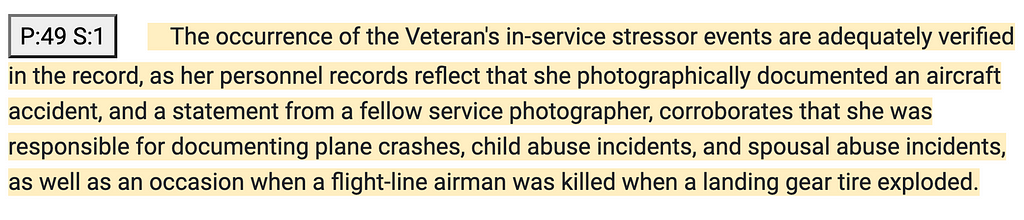

But are there enough linguistic features to allow ML models to identify and label finding sentences correctly? To answer this question, Hofstra Law’s Law, Logic & Technology Research Laboratory (LLT Lab) manually labeled the finding sentences in 50 decisions by the Board of Veterans’ Affairs (the labeled data are publicly available on GitHub). The LLT Lab classified as a finding sentence any sentence that contained a finding of fact. These decisions contained 5,797 manually labeled sentences after preprocessing, only 490 of which were finding sentences. An example is this complex finding sentence (highlighted in yellow):

As indicated in the icon preceding the sentence text, this sentence is from paragraph 49 of a decision, the first sentence. The LLT Lab labeled this as a finding sentence because the Lab placed a priority on not overlooking any findings. But this complex sentence also states some of the evidence and reasoning relevant to that finding. If a more granular classification is needed, we could parse complex finding sentences down to at least the clause level, and then classify clause roles rather than sentence roles.

Machine-Learning Results

Machine-learning models can perform reasonably well in classifying these BVA finding sentences. We trained a Logistic Regression model on the LLT Lab’s dataset of 50 BVA decisions. The model classified finding sentences with precision = 0.81 and recall = 0.78. We later trained a neural network (NN) model on the same BVA dataset. The NN model’s precision for finding sentences was 0.75, and the recall was 0.79.

Error Analysis

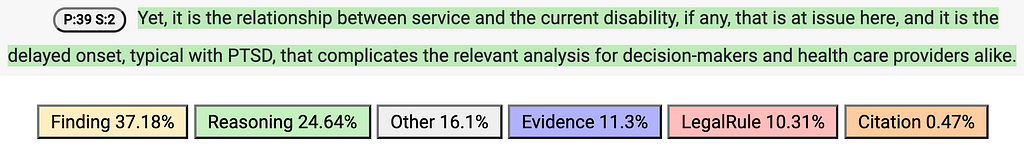

Of the 173 sentences predicted by the NN model to be finding sentences, 44 were misclassifications (precision = 0.75). Understandably, the most frequent confusion was with reasoning sentences, which accounted for almost half the errors (21). Reasoning sentences often contain inferential wording similar to that found in finding sentences, but the conclusions are intermediary in nature. An example of a reasoning sentence (highlighted in green) that the trained NN model misclassified as a finding sentence is:

The image above is a screenshot from the Legal Apprentice web application (this example is the second sentence from paragraph 39 of a decision). The prediction scores of the NN model are arrayed below the sentence text. As you can see, the model predicted this to be a finding sentence (score = 37.18%), although the prediction score for it being a reasoning sentence was second (24.64%).

This sentence primarily states reasoning rather than a finding. The reasoning is that the delayed onset complicates any decision on the causal relationship between what happened to the veteran in active service and the veteran’s current disability. This sentence does not state a finding because it does not tell us the tribunal’s final decision on that issue. However, in explaining the complication, the tribunal uses terminology from the relevant legal rule (see the Shedden quotation above). The predicted classification as a finding sentence is therefore hardly surprising. Sentences such as this help explain the somewhat low precision of 0.75.

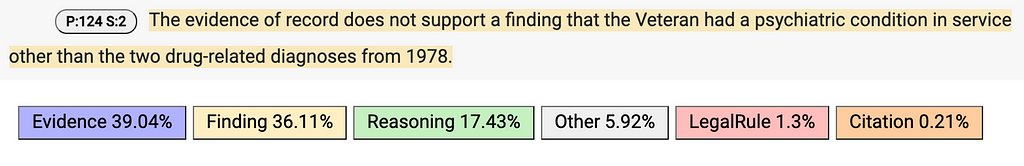

Because we lawyers do not want to overlook any actual finding sentences, recall is also extremely important. In the test set for the NN model, there were 163 manually labeled finding sentences. While 129 of those were correctly identified (recall = 0.79), the model failed to identify 34 of them. Not surprisingly, the model erroneously predicted 15 of those 34 actual finding sentences to be reasoning sentences. The model predicted another 13 actual finding sentences to be evidence sentences. An example of the model’s misclassifying a finding sentence (highlighted in yellow) as an evidence sentence is the following sentence (from paragraph 124 of a decision, the second sentence):

Despite the clear statement of a negative finding (“does not support a finding that”), the rest of the wording is very consistent with a statement of evidence. Note that the prediction scores for this being an evidence sentence (39.04%) and a finding sentence (36.11%) are very close in value, which reflects the nearness of the confusion.

Importance of the Use Case

The practical cost of a classification error depends upon the use case and the type of error. If the use case is extracting and presenting examples of legal reasoning, then the precision and recall noted above might be acceptable to a user. This might be especially true if many errors consist of confusing a reasoning sentence with a finding sentence. A sentence classified either as reasoning or finding might still be part of an illustrative argument, if the user is interested in viewing different examples of argument or reasoning.

By contrast with such a use case, if the objective is to generate statistics about how often arguments are successful or unsuccessful, then precision of only 0.75 and recall of 0.79 would probably be unacceptable. The only way to determine whether an argument on an issue was successful is to identify the relevant finding of fact, map it to the appropriate legal issue, and determine whether that finding was positive or negative (its “polarity”). (You can read our paper on the automatic detection of the polarity of finding sentences.) To generate accurate statistics, we would need a high level of confidence that we have automatically and accurately performed these three tasks.

Summary

In sum, finding sentences are very important components of legal decisions. They state the conclusions of the tribunal on the legal issues, and they inform the parties about the success or failure of their individual arguments. Finding sentences provide the bridge between the legal rules, on the one hand, and the appropriate legal arguments and reasoning in the decision, on the other hand. Such sentences also provide an anchor position for extracting from the decision the related evidence, reasoning, and legal rules. In other words, finding sentences can lead us to the evidence and reasoning sentences that are bound together as a single unit of argument or reasoning.

Conclusions as Anchors for Mining Legal Reasoning was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/3k6eyOH

via RiYo Analytics

No comments