https://ift.tt/jfhaxSR Application programming interfaces (APIs) and web scraping are powerful tools for gathering data in Python, and they...

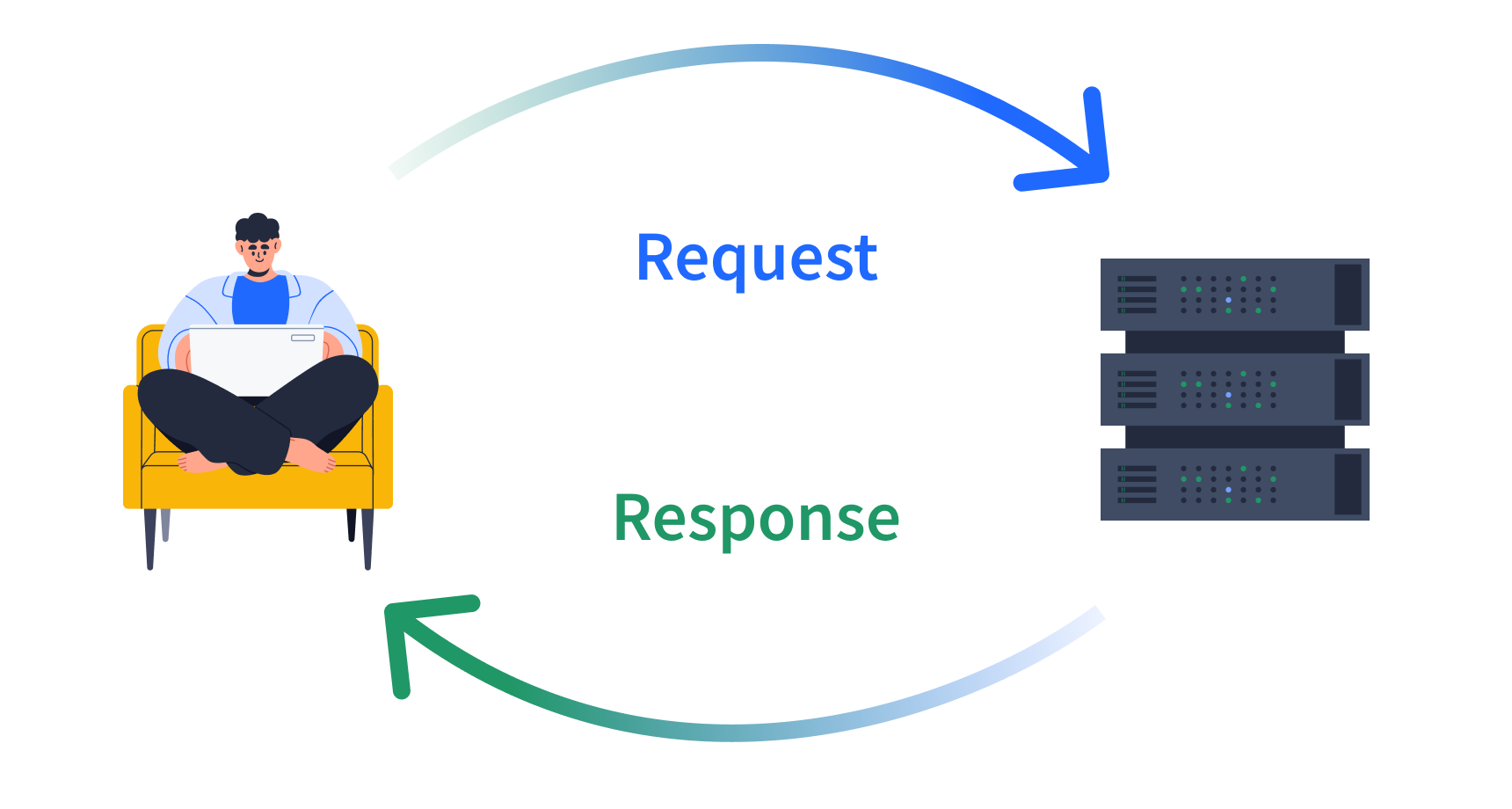

Application programming interfaces (APIs) and web scraping are powerful tools for gathering data in Python, and they can significantly expand your data science capabilities. When I first learned these techniques, I was amazed at how they allowed me to gather data beyond static datasets. Every data scientist should be comfortable interacting with APIs, handling JSON data, and extracting information from web pages. With these skills, you can collect and analyze data from a wide range of online sources, opening up new possibilities for your projects.

I first realized the usefulness of APIs during a machine learning project focused on predicting weather events. Instead of manually copying and pasting data from meteorological websites, I accessed vast amounts of structured weather data through APIs with just a few lines of code. They drastically reduced my data collection time and helped standardize my datasets.

But APIs are just one piece of the puzzle. Web scraping offers access to data sources that don't have official APIs. Suddenly, I could gather data from many different websites, building custom datasets when the information I needed wasn't readily available through a formal API.

As I got deeper into these techniques, I quickly realized the importance of understanding API documentation. Confidence in navigating documentation meant I could retrieve exactly the data I needed, saving time and computational resources.

One key lesson I learned during my weather project was the importance of balancing data freshness with API rate limits. Effective data retrieval strategies are crucial to getting the most up-to-date information without exceeding the limits set by API providers.

The real magic happened when I started combining data from both APIs and web scraping for my weather prediction project. By integrating real-time weather data from an API with historical weather patterns scraped from various climate research websites, I created richer, more diverse datasets that improved the accuracy of my predictions.

Handling JSON data is another essential skill when working with APIs. Python's json library became invaluable, allowing me to easily parse and manipulate complex JSON responses.

If you're ready to learn these powerful techniques, our APIs and Web Scraping in Python for Data Science course can guide you through the process. You'll learn to:

- Use Python's requests library to interact with APIs

- Process and manipulate JSON data structures

- Implement API authentication and handle rate limits

- Utilize optional query parameters for refined data retrieval

- Apply web scraping techniques to extract data from HTML

- Integrate API and web-scraped data with Pandas for analysis

By the end of the course, you'll be well-equipped to gather and analyze data from various online sources, opening up new opportunities for your data science projects.

As you explore APIs and web scraping, consider how these techniques could enhance your current work. Are there public APIs that could provide valuable data for your projects? What websites contain information that could complement your existing datasets? With these skills in your toolkit, you'll be ready to explore and make the most of the wealth of information available online.

We also invite you to join our Dataquest Community to connect with fellow learners and share your experiences. It's a great place to find support, exchange ideas, and continue growing as a data scientist.

from Dataquest https://ift.tt/BvoxV5p

via RiYo Analytics

ليست هناك تعليقات