https://ift.tt/CHT37ju Image source: Adobe Stock. Assessing where we are on the journey to deep, meaningful communication between people...

Assessing where we are on the journey to deep, meaningful communication between people and their AI

As people interact with conversational artificial intelligence (AI) systems, clear communication is a key factor in getting the intended outcome that will best serve and augment our lives. In the broader sense, what language should be used for the control system and conversations with machines? In this blog post, we evaluate the progression of methods to guide and converse with machines based on recent technology innovations, such as OpenAI’s ChatGPT and GPT-4, and explore the next needed steps in conversational AIs toward mastering natural conversation like a human confidant. Machines have made a categorical leap from prompt engineering to “human speak,” however other aspects of intelligence are still awaiting discovery.

Until recently in 2022, getting an AI to respond properly and utilize its strengths required specialized knowledge such as sophisticated prompt engineering. The introduction of ChatGPT was a major advancement in the conversational ability of machines, making it so that even high school students can chat with high power AI and get impressive results. This is a significant milestone. However, we also need to assess where we are on the journey of human-machine communication and what is still required to achieve meaningful conversations with AI.

The interaction between people and machines has two broad objectives: To instruct the machine on needed tasks, and second, to exchange information and guidance during the performance of the tasks. The first objective is traditionally done by programming, but it is now evolving to where the dialog with the user can define a new task, such as asking AI to create a Python script to perform a task. The exchange within a task was addressed through natural language processing (NLP) or natural language understanding (NLU) coupled with generation of machine response. Let us take the assumption that the central characteristics — if not the endpoint — of the human-to-machine interaction progression is when people can communicate with machines the same as they do with a long-time friend, including all the free-form syntactic, semantic, metaphoric, and cultural aspects that are assumed in such an interaction. What must be created for AI systems to partake in this natural communication fully?

Machines have made a categorical leap from prompt engineering to “human speak,” however other aspects of intelligence are still awaiting discovery.

Past Conversational AI: Transformer Architecture Redefines NLP Performance

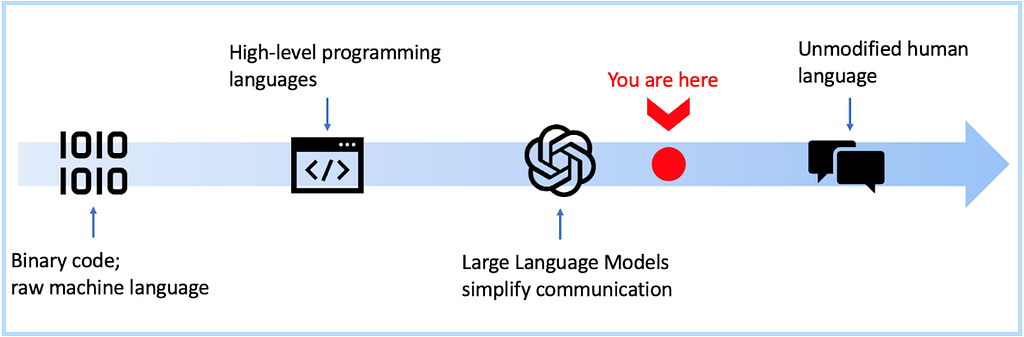

In the beginning of compute, humans and machines could only communicate through machine code, a low-level computer language of binary digits — strings of 0s and 1s that hold little resemblance to human communication. Over the last century, there’s been a gradual journey to make communication with machines closer to human language. Today, the fact that we can tell machines to generate a picture of a cat playing chess is evidence of great progress. This communication has improved gradually with the evolution of programming languages from low to high level code, from assembler to C to Python, and the introduction of human speech-like constructs such as the if-then statement. Now the final step is to eliminate prompt engineering or other sensitivities to tweaks in input phrasing so that machines and humans can interact in a natural way. Human-machine dialogue should allow for incremental references to continue the conversation from a past “save point.”

NLP deals with the interactions between computers and human languages to process and analyze large amounts of natural language data, while NLU undertakes the difficult task of detecting the user’s intention. Virtual assistants such as Alexa and Google use NLP, NLU, and machine learning (ML) to acquire new knowledge as they operate. By using predictive intelligence and analytics, the AI can personalize conversations and responses based on user preferences. While virtual assistants reside in people’s homes like a trusted friend, they are currently limited to basic command language tautology. People have adapted to this by speaking “keyword-ese” to get the best results, but their conversational AI still lags in understanding natural language interactions. When there’s a communications breakdown with their virtual assistant, people use repair strategies such as simplification of utterances, variations on the amount of information given to the system, semantic and syntactical adjustments to queries, and repetition of commands.

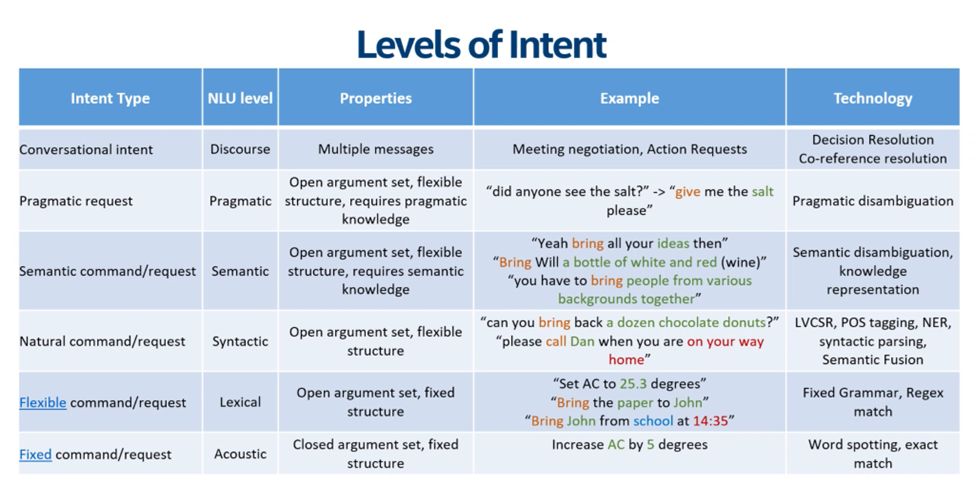

This is where NLU is critical in understanding intent. NLU analyzes text and speech to determine its meaning (see Figure 2). Using a data model of semantic and pragmatic definitions for human speech, NLU focuses on intent and entity recognition. The introduction of Transformer neural network architecture in 2018 has led to gains in NLP performance in virtual assistants. These types of networks use self-attention mechanisms to process input data, allowing them to effectively capture dependencies in human language. Introduced by researchers at Google AI Language, BERT addresses 11 of the most common NLP tasks in one model, improving on the traditional method of using separate models for each specific task. BERT pre-trains language representations by training a general purpose language understanding model on a large text corpus such as Wikipedia, and then applying the model to downstream NLP tasks such as question answering and language inference.

Beyond virtual assistants, the advances with ChatGPT are synergistic with Transformer models gains in performance for NLP. GPT-3 Transformer technology was introduced in 2021, but its major breakthrough in popularity and use was achieved with ChatGPT and its innovation in the ability to have a human-like conversational interface, which was made possible by utilizing reinforcement learning from human feedback (RLHF). ChatGPT enables LLMs to process and understand natural language inputs and generate outputs as human-like as possible.

Present: Large Language Models Dominate Conversational AI

Since its release by OpenAI in November 2022, ChatGPT has dominated the news with its seemingly well-written language generation for essays and tests, and successful passing of medical license and MBA exams. ChatGPT passed all three exams for the U.S. Medical Licensing Examination (USMLE) without any training or reinforcement. This led to researchers to conclude that “large language models may have the potential to assist with medical education, and potentially, clinical decision-making.” A Wharton School professor at the University of Pennsylvania tested ChatGPT on an Operations Management MBA final exam, and it received a B to B- grade. ChatGPT performed well on basic operations management and process analysis questions based on case studies, providing correct answers and solid explanations. When ChatGPT failed to match the problem with the right solution method, hints from a human expert helped the model correct its answer. While these results are promising, ChatGPT has limitations in reaching conversation at the human level (we’ll discuss in the next section).

As an autoregressive language model with 175 billion parameters, ChatGPT’s large model size helps it to perform well on understanding user intent. Based on the levels of intent in Figure 2, ChatGPT can process pragmatic requests from users that contain an open argument set and flexible structure by analyzing what the text prompt is trying to achieve. ChatGPT can write highly detailed responses and articulate answers, demonstrating a breadth and depth of knowledge across different domains, such as medicine, business operations, computer programming, and more. GPT-4 is also showing impressive strengths, such as adding multimodal capabilities and improving scores on advanced human tests. GPT-4 is reported to have scored in the 90th percentile of the Uniform Bar Exams (versus the 10th percentile for ChatGPT) and in the 99th percentile on the USA Biology Olympiad (vs the 31st percentile for ChatGPT).

ChatGPT can also do machine programming, albeit to a degree. It can create programs in multiple languages including Python, JavaScript, C++, Java, and more. It can also analyze code for bugs and performance issues. However, so far it seems to be best utilized as part of a joint programmer/AI combination.

While OpenAI’s models are attracting much attention, other models are progressing in similar direction, such as Google Brain’s open source 1.6 trillion-parameter Switch Transformer which debuted in 2021, and Google Bard, which uses LaMDA (Language Model for Dialogue Applications) technology to search the web and provide real-time answers. Bard is currently only available to beta testers, so its performance against ChatGPT is unknown.

While LLMs have made great strides toward having natural conversations with humans, key growth areas need to be addressed.

To reach the next level of intelligence and human-level communication, key areas need to undergo a leap in capabilities — restructuring of knowledge, integration of multiple skills, and providing contextual adaptation.

Future: What is Missing from the Human-Machine Conversation?

Four key elements are still missing in moving conversational AI to the next level in carrying on natural conversations. To reach this level of intimacy and shared purpose, the machine needs to understand the meaning of the person’s symbolic communication, and answer with trustworthy custom responses that are meaningful to the person.

1) Producing trustworthy responses. AI systems should not hallucinate! Epistemological problems affect the way AI builds knowledge and differentiates between known and unknown information. The machine can make mistakes, producing biased results or even hallucinating when providing answers about things it doesn’t know. ChatGPT has difficulty with capturing source attribution and information provenance. It can generate plausible sounding, but incorrect or nonsensical answers. In addition, it lacks factual correctness and common sense with physical, spatial, and temporal questions, and struggles with math reasoning. According to OpenAI, it has difficulty with questions such as: “If I put cheese into the fridge, will it melt?” It performs poorly when planning or thinking methodically. When tested on the MBA final exam, it made surprising mistakes in 6th grade level math, which could cause massive errors in operations. The research found that “Chat GPT was not capable of handling more advanced process analysis questions, even when they are based on standard templates. This includes process flows with multiple products and problems with stochastic effects such as demand variability.”

2) Deep understanding of human symbology and idiosyncrasies. AI needs to work within the full symbolic world of humans, including the ability to do abstraction, customize, and understand partial references. The machine must be able to interpret people’s speech ambiguities and incomplete sentences in order to have meaningful conversations.

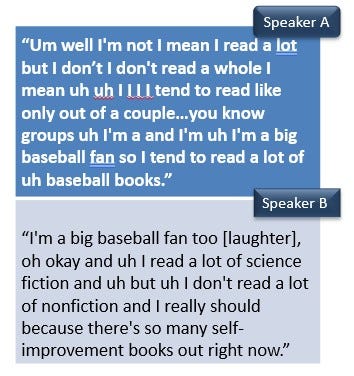

As shown in Figure 3, human speech patterns are often unintelligible. Should AI speak exactly like a human? Realistically, it could be the same as a person talking with a friend with loosely structured language, including a reasonable amount of “hmms,” “likes,” incomplete or ill formed sentences, ambiguities, semantic abstractions, personal references, and common-sense inferences. But these idiosyncrasies of human speech should not overpower the communication, rendering it unintelligible.

3) Providing custom responses. AI needs the ability to customize and be familiar with the world of the user. ChatGPT often guesses the user’s intent instead of asking clarifying questions. In addition, as a fully encapsulated information model, ChatGPT does not have the ability to browse or search the internet to provide custom answers for the user. According to OpenAI, ChatGPT is limited in its custom answers because “it weights every token equally and lacks a notion of what is most important to predict and what is less important. With self-supervised objectives, task specification relies on forcing the desired task into a prediction problem, whereas ultimately, useful language systems (like virtual assistants) might be better thought of as taking goal-directed actions rather than just making predictions.” In addition, it would be helpful to have a multi-session context of the human-machine conversations as well as a theory of mind model of the user.

4) Becoming purpose-driven. When people work with a companion, the coordination is not just based on a text exchange, but on a shared purpose. AI needs to move beyond contextualized answers to become purpose driven. In the evolving human-machine relationship, both parties need to become a part of a journey to accomplish a goal, avoid or alleviate a problem, or share information. ChatGPT and other LLMs have not reached this level of interaction yet. As I explored in a previous blog, intelligent machines need to go beyond input-to-output replies and conversations as a chatbot.

To reach the next level of intelligence and human-level communication, key areas need to undergo a leap in capabilities — restructuring of knowledge, integration of multiple skills, and providing contextual adaptation.

The Path to the Next Level in Conversational AIs

LLMs like ChatGPT still have gaps in the cognitive skills needed to take conversational AI to the next level. Missing competencies include logical reasoning, temporal reasoning, numeric reasoning, and the overall ability to be goal-driven and define subtasks to achieve a larger task. Knowledge-related limitations in ChatGPT and other LLMs can be addressed by a Thrill-K approach, by adding retrieval and continuous learning. Knowledge resides in three places for AI:

1) Instantaneous knowledge. Knowledge commonly used and continuous functions that can be effectively approximated, available in the fastest and most expensive layer within the parametric memory for the neural network or other working memory for other ML processing. ChatGPT currently uses this end-to-end deep learning system, but it needs to expand to include other knowledge sources to be more effective as a human companion.

2) Standby knowledge. Knowledge that is valuable to the AI system but not as commonly used, available in an adjacent structured knowledge base with as-needed extraction. It requires increased representation strength for discrete entities, or needs to be kept generalized and flexible for a variety of novel uses. Actions or outcomes based on standby knowledge require processing and internal resolution, enabling the AI to learn and adapt as a human companion.

3) Retrieved external knowledge. Information from a vast online repository, available outside the AI system for retrieval when needed. This allows the AI to customize answers for the human companion with several modalities of information, provide reasoned analysis, and explain the sources of information and the path to conclusion.

Summary

The journey from machine language to human speak has evolved from humans inputting simple binary digits into a computer, to bringing virtual assistants into our homes to perform simple tasks, to asking and receiving articulate answers from LLMs such as ChatGPT. Despite this great progress in recent innovations in LLMs, the path to the next level of conversational AI requires knowledge restructuring, multiple intelligences, and contextual adaptation to build AI that are true human companions.

References

- Mavrina, L., Szczuka, J. M., Strathmann, C., Bohnenkamp, L., Krämer, N. C., & Kopp, S. (2022). “Alexa, You’re Really Stupid”: A Longitudinal Field Study on Communication Breakdowns Between Family Members and a Voice Assistant. Frontiers in Computer Science, 4. https://doi.org/10.3389/fcomp.2022.791704

- Devlin, J. (2018, October 11). BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv.org. https://arxiv.org/abs/1810.04805

- Wikipedia contributors. (2023). Reinforcement learning from human feedback. Wikipedia. https://en.wikipedia.org/wiki/Reinforcement_learning_from_human_feedback

- Introducing ChatGPT. (n.d.). https://openai.com/blog/chatgpt

- Kung, T. H., Cheatham, M., Medenilla, A., Sillos, C., De Leon, L., Elepaño, C., Madriaga, M., Aggabao, R., Diaz-Candido, G., Maningo, J., & Tseng, V. (2022). Performance of ChatGPT on USMLE: Potential for AI-Assisted Medical Education Using Large Language Models. medRxiv (Cold Spring Harbor Laboratory). https://doi.org/10.1101/2022.12.19.22283643

- Needleman, E. (2023). Would Chat GPT Get a Wharton MBA? New White Paper By Christian Terwiesch. Mack Institute for Innovation Management. https://mackinstitute.wharton.upenn.edu/2023/would-chat-gpt3-get-a-wharton-mba-new-white-paper-by-christian-terwiesch/

- OpenAI. (2023). GPT-4 Technical Report. arXiv (Cornell University). https://doi.org/10.48550/arxiv.2303.08774

- Gewirtz, D. (2023, April 6). How to use ChatGPT to write code. ZDNET. https://www.zdnet.com/article/how-to-use-chatgpt-to-write-code/

- How Many Languages Does ChatGPT Support? The Complete ChatGPT Language List. (n.d.). https://seo.ai/blog/how-many-languages-does-chatgpt-support

- Tung, L. (2023, February 2). ChatGPT can write code. Now researchers say it’s good at fixing bugs, too. ZDNET. https://www.zdnet.com/article/chatgpt-can-write-code-now-researchers-say-its-good-at-fixing-bugs-too/

- Fedus, W., Zoph, B., & Shazeer, N. (2021). Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity. arXiv (Cornell University). https://doi.org/10.48550/arxiv.2101.03961

- Pichai, S. (2023, February 6). An important next step on our AI journey. Google. https://blog.google/technology/ai/bard-google-ai-search-updates/

- Dickson, B. (2022, July 31). Large language models can’t plan, even if they write fancy essays. TNW | Deep-Tech. https://thenextweb.com/news/large-language-models-cant-plan

- Brown, T., Mann, B. F., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D. M., Wu, J. C., Winter, C., . . . Amodei, D. (2020). Language Models are Few-Shot Learners. arXiv (Cornell University). https://doi.org/10.48550/arxiv.2005.14165

- Singer, G. (2022, August 17). Beyond Input-Output Reasoning: Four Key Properties of Cognitive AI. Medium. https://towardsdatascience.com/beyond-input-output-reasoning-four-key-properties-of-cognitive-ai-3f82cde8cf1e

- Singer, G. (2022, January 6). Thrill-K: A Blueprint for The Next Generation of Machine Intelligence. Medium. https://towardsdatascience.com/thrill-k-a-blueprint-for-the-next-generation-of-machine-intelligence-7ddacddfa0fe

Have Machines Just Made an Evolutionary Leap to Speak in Human Language? was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium

https://towardsdatascience.com/have-machines-just-made-an-evolutionary-leap-to-speak-in-human-language-319237593aa4?source=rss----7f60cf5620c9---4

via RiYo Analytics

ليست هناك تعليقات