https://ift.tt/Q4GDVSJ ChatGPT is an artificial intelligence chatbot developed by OpenAI (image via ChatGPT). You’ve probably heard of Ch...

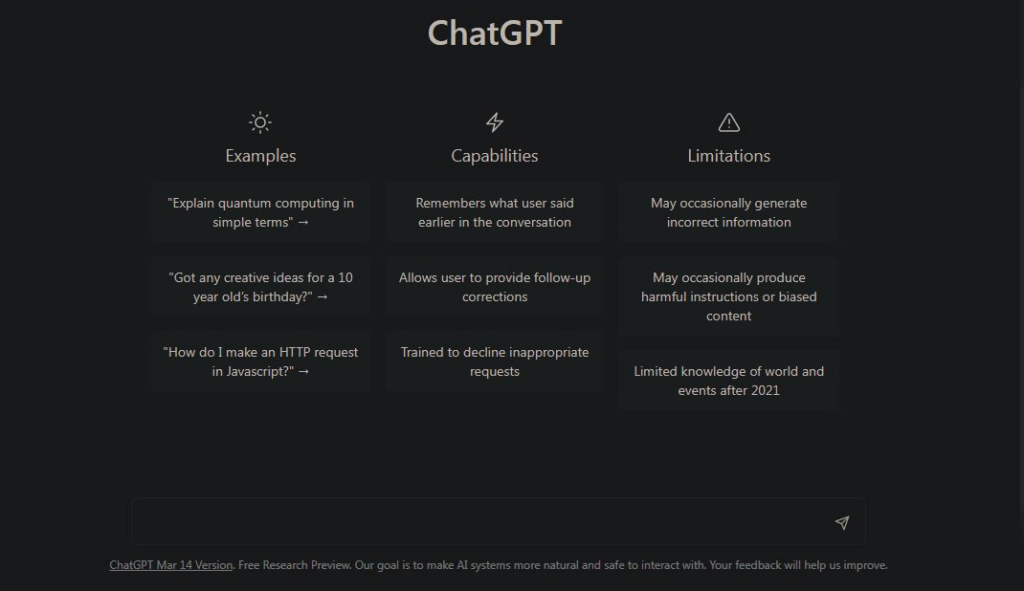

ChatGPT is an artificial intelligence chatbot developed by OpenAI (image via ChatGPT).

You’ve probably heard of ChatGPT at this point. People use it to do their homework, code frontend web apps, and write scientific papers. Using a language model can feel like magic; a computer understands what you want and gives you the right answer. But under the hood, it’s just code and data.

When you prompt ChatGPT with an instruction, like Write me a poem about cats, it turns that prompt into tokens. Tokens are fragments of text, like write, or poe. Every language model has a different vocabulary of tokens.

Computers can’t directly understand text, so language models turn the tokens into embeddings. Embeddings are similar to Python lists — they look like this [1.1,-1.2,2,.1,...]. Semantically similar tokens are turned into similar lists of numbers.

ChatGPT is a causal language model. This means it takes all of the previous tokens, and tries to predict the next token. It predicts one token at a time. In this way, it’s kind of like autocomplete — it takes all of the text, and tries to predict what comes next.

It makes the prediction by taking the embedding list, and passing it through multiple transformer layers. Transformers are a type of neural network architecture that can find associations between elements in a sequence. They do this using a mechanism called attention. For example, if you’re reading the question Who is Albert Einstein?, and you want to come up with the answer, you’ll mostly pay attention to the words Who and Einstein.

Transformers are trained to identify which words in your prompt to pay attention to in order to generate a response. Training can take thousands of GPUs and several months! During this time, transformers are fed gigabytes of text data so that they can learn the correct associations.

To make a prediction, transformers turn the input embeddings into the correct output embeddings. So you’ll end up with an output embedding like [1.5, -4, -.1.3, .1,...], which you can turn back into a token.

If ChatGPT is only predicting one token at a time, you might wonder how it can come up with entire essays. This is because it’s autoregressive. This means that it predicts a token, then adds it back to the prompt and feeds it back into the model. So the model actually runs once for every token in the output. This is why you see the output of ChatGPT word by word instead of all at once.

ChatGPT stops generating the output when the transformer layers output a special token called a stop token. At this point, you hopefully have a good response to your prompt.

The cool part is that all of this can be done using Python code! PyTorch and Tensorflow are the most commonly used tools for creating language models. If you want to learn more, check out our new course, Zero to GPT. This will take you from no deep learning knowledge to training a GPT model.

Interested in learning more? Our new course “Zero to GPT” will take you from zero deep learning experience to training your own GPT model. You’ll learn everything from the basics of neural networks to cutting-edge techniques for optimizing transformer models. Don’t miss this early opportunity to upskill with GPT!

Sign up for free today!

from Dataquest https://ift.tt/azkiAIs

via RiYo Analytics

ليست هناك تعليقات