https://ift.tt/1TFrJQc Improving your big-data analysis workflows with an open-source library If you’re a data scientist working with larg...

Improving your big-data analysis workflows with an open-source library

If you’re a data scientist working with large datasets, you must have run out of memory (OOM) when performing analytics or training machine learning models.

That’s not surprising. The memory available on a desktop or laptop computer can easily exceed large datasets, making loading the entire dataset nearly impossible. We are forced to work with only a small subset of data at a time, which can lead to slow and inefficient data analysis.

Worse, performing data analysis on large datasets can take a long time, especially when using complex algorithms and models. Data scientists may have trouble exploring their data quickly and efficiently, resulting in less effective data analysis.

Disclaimer: I am not affiliated with vaex.

Vaex: a data analysis library for large datasets.

Enter vaex. It is a powerful open-source data analysis library for working with large datasets. It helps data scientists speed up their data analysis by allowing them to work with large datasets that would not fit in memory using an out-of-core approach. This means that vaex only loads the data into memory as needed, allowing data scientists to work with datasets that are larger than the memory on their computers.

4 key features of vaex

Some of the key features of vaex that make it useful for speeding up data analysis include:

- Fast and efficient handling of large datasets: vaex uses an optimized in-memory data representation and parallelized algorithms to quickly and efficiently work with large datasets. vaex works with huge tabular data, processes >10 to the power of 9 rows/second.

- Flexible and interactive data exploration: it allows you to interactively explore their data using a variety of built-in visualizations and tools, including scatter plots, histograms, and kernel density estimates.

- Easy-to-use API: vaex has a user-friendly API. The library also integrates well with popular data science tools like pandas, numpy, and matplotlib.

- Scalability: vaex scales to very large datasets and can be used on a single machine or distributed across a cluster of machines.

Getting started with vaex

To use Vaex in your data analysis project, you can simply install it using pip:

pip install vaex

Once Vaex is installed, you can import it into your Python code and use it to perform various data analysis tasks.

Here is a simple example of how to use Vaex to calculate the mean and standard deviation of a dataset.

import vaex

# load an example dataset

df = vaex.example()

# calculate the mean and standard deviation

mean = df.mean()

std = df.std()

# print the results

print("mean:", mean)

print("std:", std)

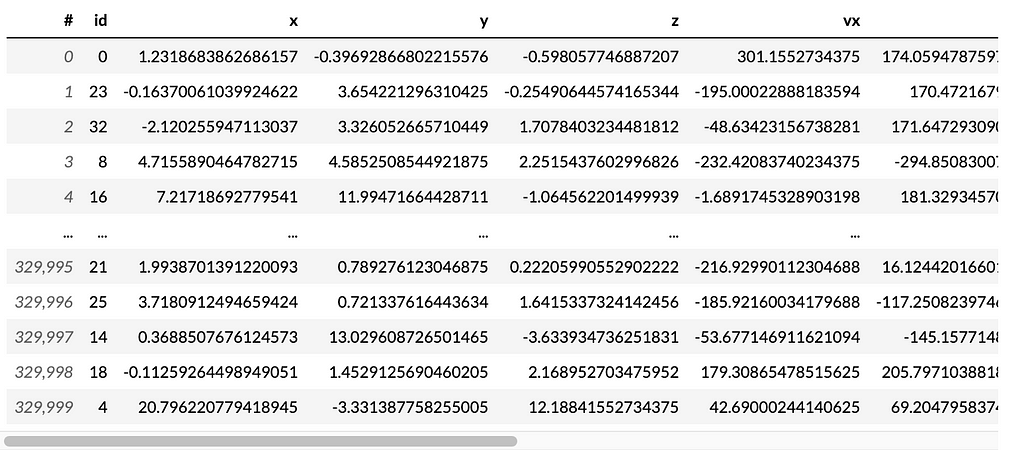

In this example, we use the vaex.open() function to load an example dataframe (screenshot above), and then use the mean() and std() methods to calculate the mean and standard deviation of the dataset.

Vaex syntax is similar to pandas

Filtering with vaex

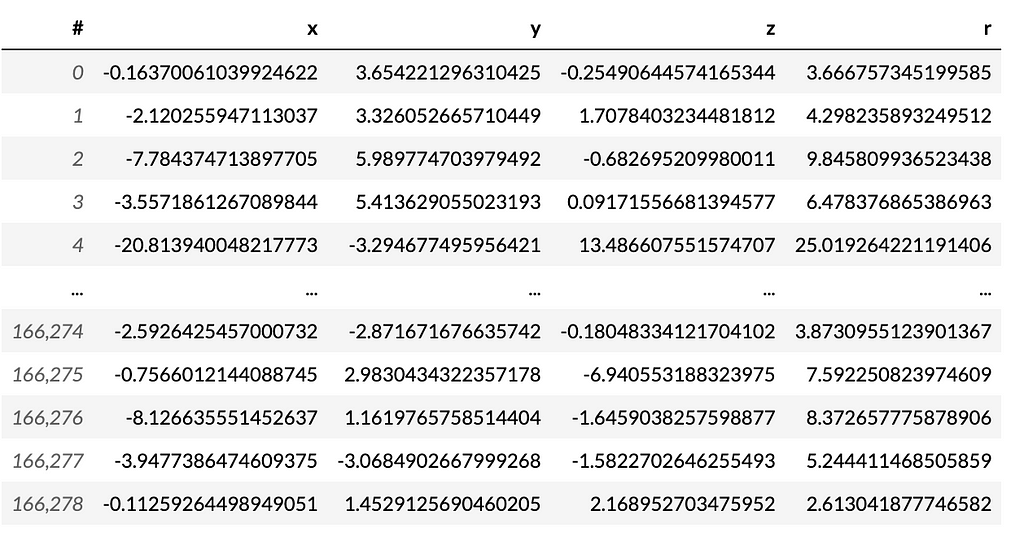

Many functions in vaex are similar to pandas. For example, for filtering data with vaex, you can use the following.

df_negative = df[df.x < 0]

df_negative[['x', 'y', 'z', 'r']]

Grouping by with vaex

Aggregating data is essential for any analytics. We can use vaex to perform the same function as we do for pandas.

# Create a categorical column that determines if x is positive or negative

df['x_sign'] = df['x'] > 0

# Create an aggregation based on x_sign to get y's mean and z's min and max.

df.groupby(by='x_sign').agg({'y': 'mean',

'z': ['min', 'max']})

Other aggregation, including count, first,std, var, nunique are available.

Performing machine learning with vaex

You can also use vaex to perform machine learning. Its API has very similar structure to that of scikit-learn.

To use that we need to perform pip install.

import vaex

We will illustrate how one can use vaex to predict the survivors of Titanic.

First, need to load the titanic dataset into a vaex dataframe. We will do that using the vaex.open() method, as shown below:

import vaex

# Download the titanic dataframe (MIT License) from https://www.kaggle.com/c/titanic

# Load the titanic dataset into a vaex dataframe

df = vaex.open('titanic.csv')

Once the dataset is loaded into the dataframe, we can then use vaex.mlto train and evaluate a machine learning model that predicts whether or not a passenger survived the titanic disaster. For example, the data scientist could use a random forest classifier to train the model, as shown below.

import vaex.ml.model

# Train a random forest classifier on the titanic dataset

model = vaex.ml.model.RandomForestClassifier()

model.fit(df, 'survived')

Of course, other preprocessing steps and machine learning models (including neural networks!) are available.

Once the model is trained, the data scientist can evaluate its performance using the vaex.ml.model.Model.evaluate() method, as shown below:

# Evaluate the model's performance on the test set

accuracy = model.evaluate(df, 'survived')

print(f'Accuracy: {accuracy}')

Using vaex to solve the titanic problem is an absolute overkill, but this serves to illustrate that vaex can solve machine learning problems.

Use vaex to supercharge your data science pipelines

Overall, vaex.ml provides a powerful and efficient way for data scientists to perform machine learning on large datasets. Its out-of-core approach and optimized algorithms make it possible to train and evaluate machine learning models on datasets that would not fit in memory, allowing data scientists to work with even the largest datasets.

We didn’t cover many of the functions available to vaex. To do that, I strongly encourage you to look at the documentation.

Follow me for more content.

I am a data scientist working in tech. I share data science tips like this regularly on Medium and LinkedIn. Follow me for more future content.

How to Speed Up Data Processing in Pandas was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium

https://towardsdatascience.com/how-to-speed-up-data-processing-in-pandas-a272d3485b24?source=rss----7f60cf5620c9---4

via RiYo Analytics

ليست هناك تعليقات