https://ift.tt/wotuG4O As Computer Vision applications are becoming omnipresent in our lives, understanding the functioning principles of C...

As Computer Vision applications are becoming omnipresent in our lives, understanding the functioning principles of Convolutional Neural Networks is essential for every Data Science practitioner

In my previous article, I built a Deep Neural Network without using popular modern deep learning libraries such as Tensorflow, Pytorch, and Keras. I later used that network to classify handwritten digits. The obtained results were not state-of-the-art level, but they were nevertheless satisfactory. Now I want to take a step further and my objective is to develop a Convolutional Neural Network (CNN) using Numpy only.

The motivation behind this task is the same as the one for the creation of a fully connected network: Python deep-learning libraries, despite being powerful tools, prevent practitioners from understanding what is happening at a low level. For CNNs this is especially true, as the process is less intuitive than the one carried out by classical deep networks. The only solution for this is to get our hands dirty and try to implement these networks ourselves.

I intend this article as a practical hands-on guide and not a comprehensive guide to the functioning principles of CNNs. As a consequence, the theoretical part is narrow and mostly serves the understanding of the practical section. For readers that need to better grasp the concept of how convolutional networks work, I leave a couple of great resources. Check this video from The Independent Code and this complete guide.

What are Convolutional Neural Networks?

Convolutional Neural Networks use particular architecture and operations that make them well-suited for image-related tasks like image classification, object localization, image segmentation, and many others. They roughly mimic the human visual cortex, where each biological neuron reacts only to a small portion of the visual field. Moreover, higher-level neurons react to the output of other low-level neurons [1].

As demonstrated in my previous article, even classical neural networks can be used for tasks like image classification. The issue is that they work fine only with small-sized images and they become extremely inefficient when applied to medium or large images. The reason for that is the huge number of parameters required by classical neural networks. For example, a 200x200 pixel image has 40'000 pixels, and if the first layer of the network has 1'000 units, it results in 40 million weights just for the first layer. This problem is highly mitigated by CNNs as they implement partially connected layers and weight sharing.

The main components of a Convolutional Neural Network are:

- convolutional layers

- pooling layers

Convolutional Layers

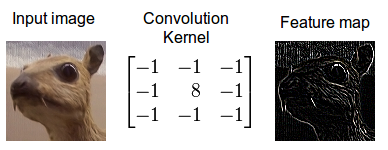

A convolutional layer consists of a set of filters (also called kernels) that when applied to the layer’s input perform some kind of modification of the original image. A filter is a matrix whose element values define the kind of modification performed on the original image. A 3x3 kernel like the following has the effect of highlighting the vertical edges in the image:

Differently, this kernel accentuates the horizontal edges:

The value of the elements in the kernels are not to be chosen manually but are parameters that the network learns during training.

The role of convolutions is to isolate different features present in the image. Dense layers later use these features.

Pooling Layers

Pooling layers are quite simple. The task of a pooling layer is to shrink the input images to reduce the computational load and memory consumption of the network. Reducing the image dimensions, in fact, means reducing the number of parameters.

What pooling layers do is use a kernel (usually of dimension 2x2) and aggregate a portion of the input image to a single value. A 2x2 max pooling kernel, for example, takes 4 pixels of the input image and returns only the pixel with the maximum value.

Python Implementation

All the code is available in this GitHub repository.

GitHub - andreoniriccardo/CNN-from-scratch: Convolutional Neural Network from scratch

The idea behind this implementation is to create Python classes representing the convolutional and max pooling layers. Additionally, as this code is later applied to the MNIST classification problem, I create a class for the softmax layer.

Each class contains the methods for implementing forward propagation and backpropagation.

The layers are later concatenated in a list to generate the actual CNN.

Convolutional Layer Implementation

The constructor takes as inputs the number of kernels of the convolutional layer and their size. I assume to use only squared kernels of size kernel_size by kernel_size.

In line 5, I generate random filters of shape (kernel_num, kernel_size, kernel_size) and I divide each element by the squared kernel size for normalization.

The patches_generator() method is a generator. It yields the portions of the images on which to perform each convolution step.

The forward_prop() method carries out the convolution for each patch generated by the method above.

Finally, the back_prop() method is responsible for computing the gradient of the loss function with respect to each weight of the layer and updates the weights’ values correspondingly. Note that the loss function mentioned here is not the global loss of the network. It is instead the loss function passed by the max pooling layer to the previous convolutional layer.

To show the actual effect of this class, I instantiate a ConvolutionLayer object with 32 3x3 filters and I apply the forward propagation method to an image. The output consists in 32 slightly smaller images.

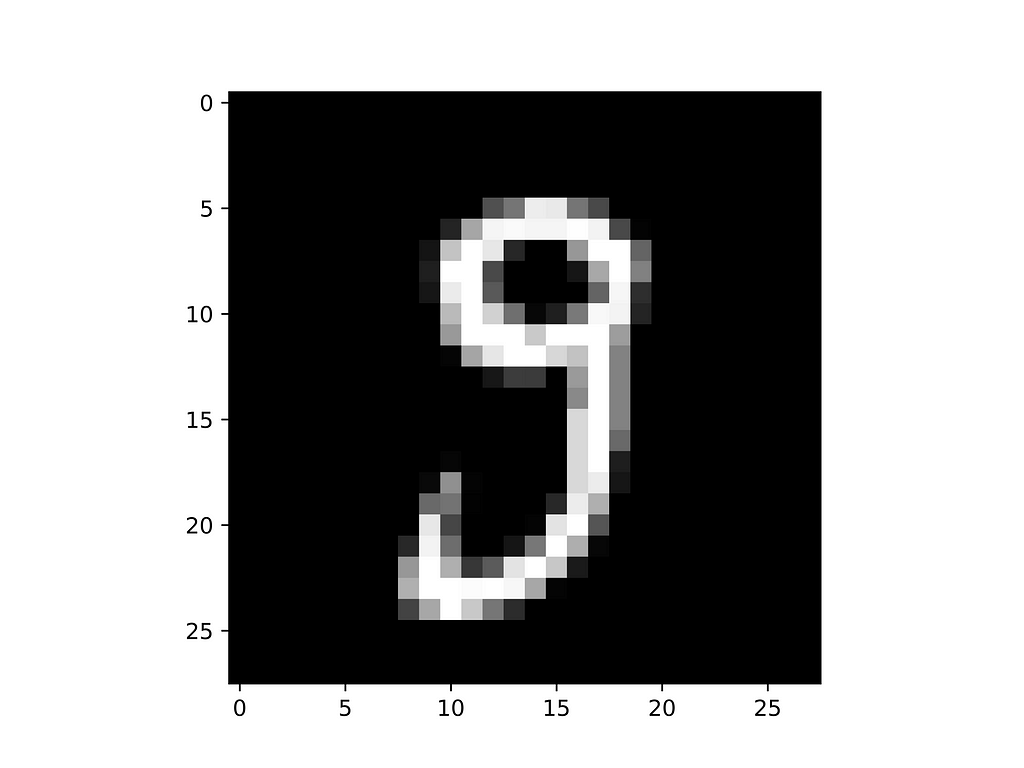

The original input image has size 28x28 pixels and it is the following:

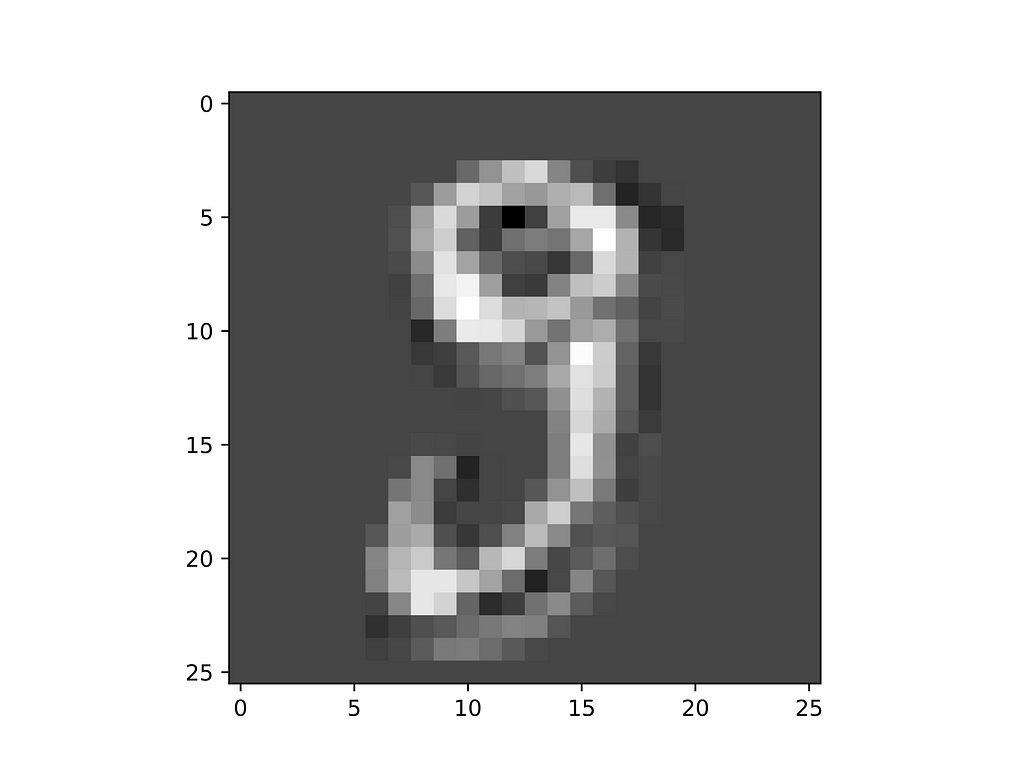

After applying the forward propagation method of the convolutional layer I obtain 32 images of size 26x26. Here I plot one of them:

As you can check, the image is slightly smaller and the handwritten digit is becoming less clear. Consider that this operation was performed by a filter filled with random values, so it doesn’t represent what a trained CNN actually performs. Still, you can get the idea that these convolutions provide smaller images where the object features are isolated.

Max Pooling Layer Implementation

The constructor method assigns only the kernel size value. The following methods work similarly to the ones for the convolutional layer, with the main difference being that the backpropagation function doesn't update any weight. In fact, the pooling layer doesn’t relay on weight to perform aggregation.

Sigmoid Layer Implementation

The softmax layer flattens the output volume fed by the max pooling and outputs 10 values. They can be interpreted as the probability of an image corresponding to the digits 0–9.

Conclusions

You can clone the GitHub repository containing the code and play with the main.py script. The network doesn’t of course achieve state-of-the-art performances but reaches a 96% accuracy after a few epochs.

References

[1]: Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow, 2nd Edition — Aurélien Géron

[2]: Deep Learning 54: CNN_6 — Implementation of CNN from Scratch in Python

[3]: Convolutional Neural Networks, DeepLearning.AI

Building a Convolutional Neural Network from Scratch using Numpy was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/rU49eQ2

via RiYo Analytics

ليست هناك تعليقات