https://ift.tt/pk17LdZ Deploy Ingress Controller on AWS EKS the right way. Photo by Andrea Zanenga on Unsplash The feature image high...

Deploy Ingress Controller on AWS EKS the right way.

The feature image highlights the significance of orchestration at scale, which is the promise and one of the many reasons of going for Kubernetes!

Prerequisites

The general guidance in this article is for those with considerable knowledge of Kubernetes, Helm, and AWS cloud solutions. Also, remember that the approach presented here is my attempt at setting up a Kubernetes cluster for my company, and you may decide to deviate at any time to suit your use case or preference.

With those out of the way, let’s jump right into it.

So, What Exactly is an Ingress Controller?

As with other “controllers” inside Kubernetes, responsible for bringing the current state to the desired state, the Ingress Controller is responsible for creating an entry point to the cluster so that Ingress rules can route traffic to the defined Services.

Simply put, if you send network traffic to port 80 or 443, the Ingress Controller will determine the destination of that traffic based on the Ingress rules.

You must have an Ingress controller to satisfy an Ingress. Only creating an Ingress resource has no effect [source].

To give a practical example, if you define an Ingress rule to route all the traffic coming to the example.com host with a prefix of /. This rule will have no effect unless you have an Ingress Controller that can distinguish the traffic’s destination and route it according to the defined Ingress rule.

Now, let’s see how Ingress Controllers work outside AWS.

How do Other Ingress Controllers work?

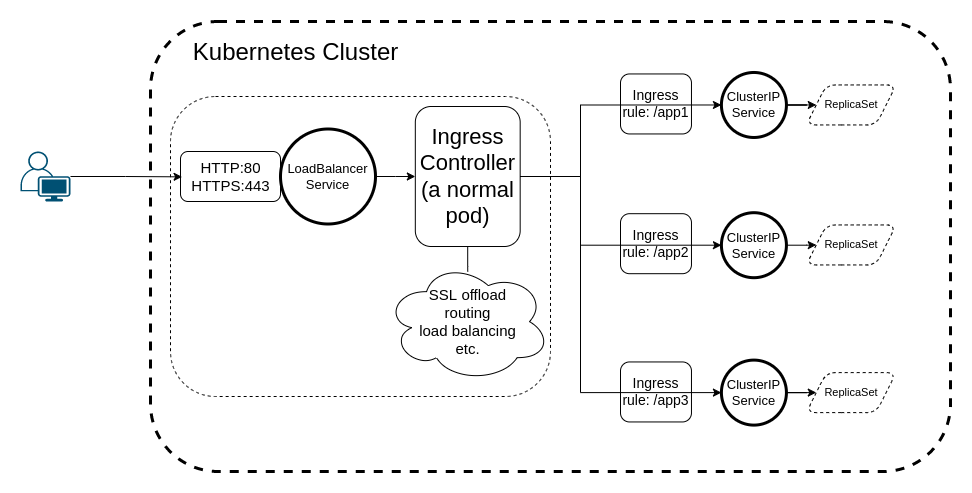

All the other Ingress Controllers I’m aware of are working this way: You expose the controller as a LoadBalancer Service, which will be the responsible frontier for handling traffic (SSL offload, routing, etc.).

What this results in is, having created a LoadBalancer, you’ll have the following in your cluster:

Here’s a command to verify that your LoadBalancer Service is in place.

What’s Wrong With This Approach?

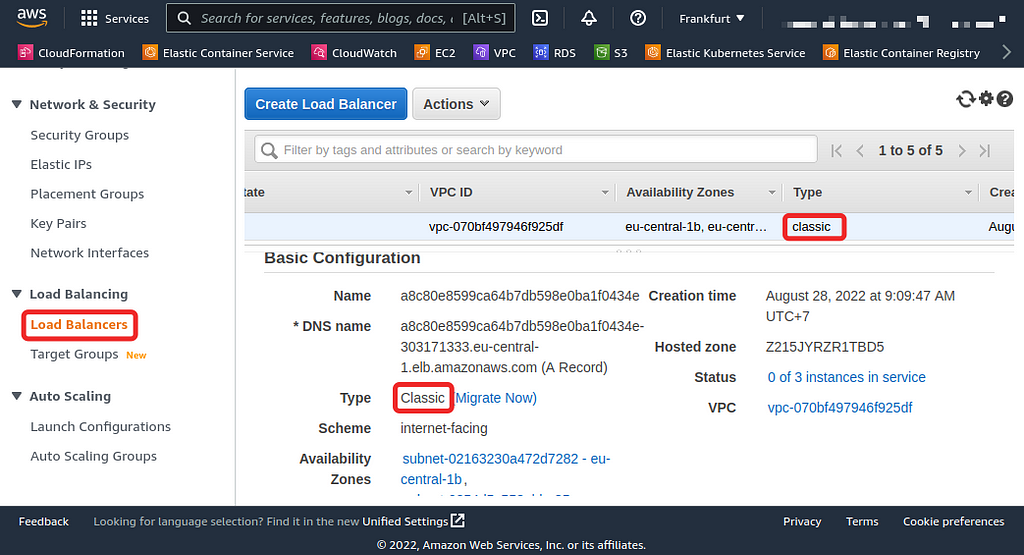

The LoadBalancer service might be ideal for other cloud providers, but not for AWS, for one key reason. Upon creating that Service, if you head over to the Load Balancer tab of your EC2 Console, you’ll see that the type of load balancer is of type “classic” (AWS calls this the “legacy” load balancer) and calls for immediate migration for a reason described below.

Since the classic load balancer has reached its end of life by August 15, 2022, you should not be using it inside the AWS platform. Therefore, you never expose any Service as a LoadBalancer because any Service of that type will result in a “classic” load balancer. They recommend either of the newer version of load balancers (Application, Network, or Gateway).

Avoiding a “classic” load balancer is the main reason we’re going for the alternative solution supported by AWS, as it lets us leverage the Kubernetes workload.

So What is the Alternative?

I was shocked when I first observed that, unlike other Ingress controllers (HAProxy, Nginx, etc.), the AWS Load Balancer Controller does not expose the LoadBalancer Service inside the Kubernetes cluster. Ingress controller is one of the essential topics on the CKA exam [source].

But, just because AWS provides poor support for other ingress controllers, you don’t want to lose any of Kubernetes’ rich features, jump right into vendor-specific products, and become vendor-locked-in; at least, I know I don’t want to.

My personal preference is to have a managed Kubernetes control plane with my own self-managed node but of course, you’re free to choose your own design over here!

The alternative here is to use the AWS ingress controller, which will allow you to have the same workload and resources if you’d run your Kubernetes elsewhere, just with a tiny bit of configuration difference, explained below.

But How Does Ingress Controller Work in AWS’s World?

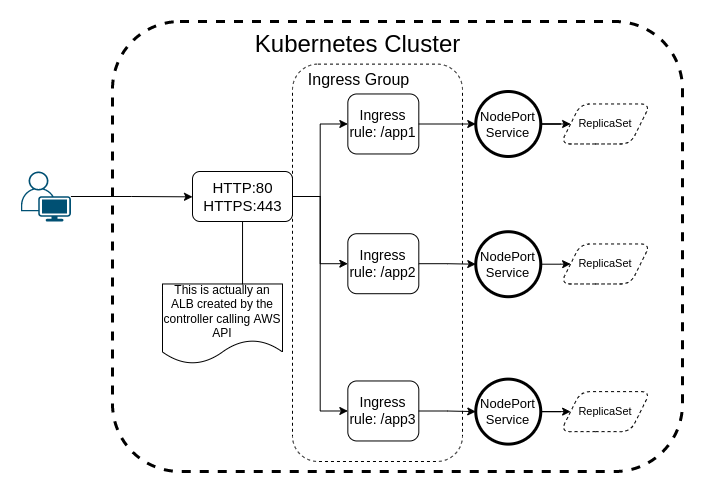

The ingress controller works inside the AWS EKS by “grouping” the ingress resources under a single name, making them accessible and routable from a single AWS Application Load Balancer. You should specify the “grouping” either by IngressClassParams or through annotations in each Ingress you create in your cluster. I don’t know about you, but I prefer the former!

The “Ingress Group” is not a tangible object, nor does it have any fancy feature. It’s just a set of Ingress resources annotated the same way (As mentioned before, you can skip annotating each Ingress by passing the “group” name in the IngressClass). These Ingress resources share a group name and can be assumed to be a unit, sharing the same ALB as a boundary to manage traffic, routing, etc.

This last part mentioned above is the difference between Ingress resources inside and outside AWS. Because although in Kubernetes’ world, you don’t have to deal with all these groupings, when you’re using AWS EKS, this approach is the way to go!

I am not uncomfortable implementing this and managing the configurations, especially since the Nginx, HAProxy, and other ingress controllers all have their own sets of annotations for their tuning and configurations, e.g., to rewrite the path in the Nginx Ingress Controller, you’d use nginx.ingress.kubernetes.io/rewrite-target annotation [source].

One last important note to mention here is that your Services are no longer ClusterIPs, but NodePorts, which makes them publish Services on every worker node in the cluster (if the pod is not on a node, iptables redirects the traffic) [source]. You will be responsible for managing Security Groups to ensure nodes can communicate on the ephemeral port range [source]. But if you use eksctl to create your cluster, it will take care of all this and many more.

Now, let’s jump into deploying the controller to be able to create some Ingress resources.

What are the Steps to Deploy AWS Ingress Controller?

You first need to install the controller in the cluster for any of your Ingress resources to work.

This “controller” is the guy responsible for talking to AWS’s API to create your Target Groups.

- To install the controller, you can use Helm or try it directly from kubectl.

NOTE: It’s always a best practice to lock your installations to a specific version and upgrade after test & QA.

2. The second step is installing the controller itself, which is still doable with Helm. I am providing my custom values; therefore, you can see both the installation and value files below.

Now that we get the controller installation out of the way let’s create an Ingress resource that will receive traffic from the world wide web!

NOTE: When the controller is initially installed, no LoadBalancer service has been created, nor an AWS load balancer of any kind, and the load balancer will only be created when the first Ingress resource is created.

Talk is Cheap, Show me the Code!

Alright, alright! After all the above steps are satisfied, here is the complete Nginx stack.

A couple of notes worth mentioning here are the following.

- If your target-type is an instance, you must have a service of the type NodePort. As a result, the load balancer will send the traffic to the instance’s exposed NodePort. If you’re more interested in sending the traffic directly to your pod, your VPC CNI must support that [source].

- The AWS load balancer controller uses the annotations you see on the Ingress resource to set up the Target Group, Listener Rules, Certificates, etc. The list of things you can specify includes, but is not limited to, the health checks (done by Target Groups), the priority of the load balancer listener rule, and many more. Read the reference for a complete overview of what is possible.

- The ALB picks up the certificate information from the config defined under the tls section from the ACM, and all you need to do is to ensure those certificates are present and not expired.

Applying the above definition, you’d get the following in your cluster:

Conclusion

Managing Kubernetes in a cloud solution such as AWS has its challenges. They have a good marketing reason to enforce such challenges, I believe, because they want you to buy as many of their products as possible, and using a cloud-agnostic tool such as Kubernetes is not helping that cause a lot!

In this article, I explained how to set up the AWS Ingress Controller to be able to create Ingress resources inside AWS EKS.

As a final suggestion, if you’re going with AWS EKS for your managed Kubernetes cluster, I highly recommend trying it with eksctl since it makes your life a lot easier.

Have an excellent rest of the day, stay tuned and take care!

References

- https://kubernetes.io/docs

- https://helm.sh

- https://aws.amazon.com/eks/

- https://eksctl.io

- https://github.com/cncf/curriculum

- https://kubernetes-sigs.github.io/aws-load-balancer-controller/v2.4/

- https://github.com/aws/eks-charts

- https://artifacthub.io

If you liked this article, check out my other contents that you might also find helpful.

- What Is iptables and How to Use It?

- Stop Writing Mediocre Docker-Compose Files

- Clean Architecture Simplified

- Tmux: A Beginner’s Guide

How to Set Up Ingress Controller in AWS EKS was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/oWFtnyj

via RiYo Analytics

No comments