https://ift.tt/YmzVQlu Image by author When data science meets production engineering Think back to a time when you were asked to turn ...

When data science meets production engineering

Think back to a time when you were asked to turn your data science analysis into a repeatable, supported report or dashboard that fits in with your company’s production data pipelines and data architecture. Perhaps you previously conducted prototype analysis and the solution was informative and working well, so your boss asked you to put it into production. Perhaps you found yourself constantly re-extracting data for the same type of analysis, training, and testing a new model, and you wanted to automate the whole process to free up precious time.

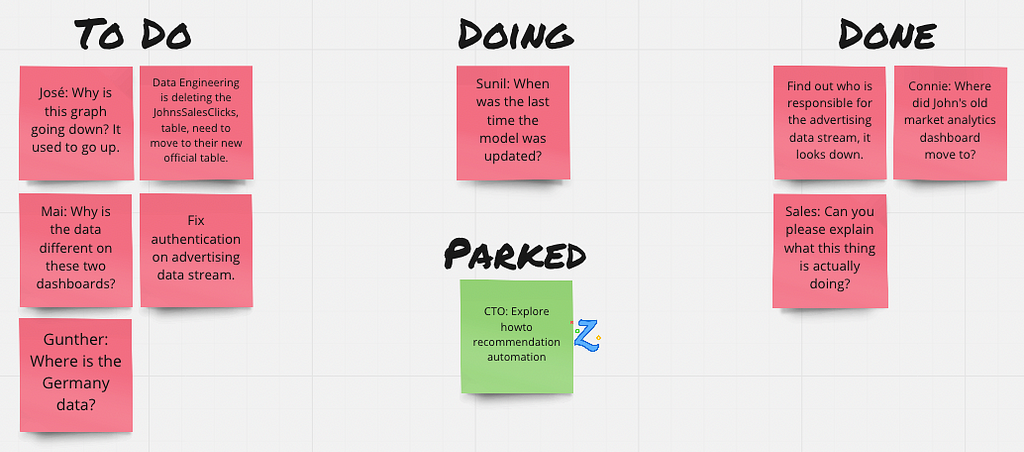

Alternatively, you may be experiencing issues with your production data products. Perhaps you have data science-supported dashboards that you have developed and put in production, but users are getting frustrated with them and losing trust. Perhaps you find yourself wasting time supporting your existing production dashboards and reports impeding your ability to work on newer, more interesting projects.

Moving data products into production is an art in and of itself. Done well, automation can increase the accuracy, credibility, and velocity of the data science team’s work. Done poorly, automation can lead to the compounding of errors, which can spread frustration and distrust.

Many initial data science analyses start from scratch and process all of the data through a hand-crafted pipeline. Because they start with a bespoke data process, the data scientist later can become bogged down personally in the responsibility for managing the pipeline. They spend extra time examining their pipelines to detect emerging problems, fix them in a timely manner, and do what’s necessary to recover lost trust when the consumers of the product lose trust.

When putting a dashboard into production, you are looking not only at what the data, reporting, and users are like, but also how the data and user base will change in the future. A report may be clear and accurate to current users now, especially since you are around to answer any questions they may have. However, data drift may cause inaccuracies in the report in the future. A manager you do not normally interact with may come across your report, not clearly understand it, and not know where to go to get help to understand it. Thus, both the quality of the data product and the support for using and interpreting it degrade over time.

Over the years, I have worked both as a lead data architect, designing the pipelines which feed into the data processes, and as a lead data scientist, leveraging the engineering data to develop models used in production processes and visualizations.

This article codifies some of the lessons I have learned along the way. I describe four desirable properties for a production data pipeline — self-explanatory, trustworthy, adaptable and resilient. For the data pipeline to function well, you need to provide scaffolding at three different data processing phases — with the data you are using as input (the first mile), the way you present results to the user (the last mile), and the in the functioning and execution of the data processing and model development (everything in between). Considered together, these two axes provide 12 different touch points each of which has one or more important considerations to address. Here are some of the considerations and the important concerns I address at each of these touch points, before I release my production reports, dashboards and/or models into the wild.

Three Pipeline Phases

We say that a chain is only as strong as its weakest link. This is true also in data pipelines. The strength of your data product is only as strong as the weakest part of the data, pipeline, and processes that are used to form the product. Yet, in the case of a data product, many of these ‘links’ are not under your control.

How do you build the strongest product possible? You pay attention to the most important things to strengthen at each of the three phases of your particular part of the pipeline — input, output and execution.

Your first mile: Are you starting with the right stuff? The concerns in this phase focus on the input data that you are putting into your pipeline or model. Your ability to support your users depends on the quality of the input data you are starting with. Failures and degradation in the input data are often out of your control, but have a serious impact on how well your data product is received and accepted.

Your last mile: Are you delivering understanding, trust and confidence along with your data product? You may have an awesome data product that may really meet a need, yet all of this is moot unless the user understands the report and has confidence in the freshness and accuracy of its underlying data. The concerns in the last mile focus around building and maintaining this trust not only from your current report viewers, but also for anyone who may view the report in the future.

The journey between: Are you making things easy for you, future you, and your future replacement? A good data product will live on over a long period of time. Longevity and maintainability considerations focus on your own specific extracts, transformations, and load processes. Data pipelines go down, can be degraded due to scheduling inefficiencies and can develop scale issues. Data inputs change or go away. Over time, you may see data drift, or model drift in the models produced using your data.

Supporting a production process can be time-consuming, and you want to facilitate the maintenance process for yourself, others working with you who may share that load, and anyone who may replace you.

Four Important Criteria

The second axis comprises the different quality criteria — the ones that make your product stronger. I look at four criteria to evaluate how resilient my data product is to future data issues and user challenges. Is the product self-explanatory? Does it inspire trust and confidence? Is it easy to adapt as data inputs change? Is it reliable, accurate, and maintained? Careful attention to these when developing your production pipeline helps reduce the amount of time necessary for future maintenance tasks.

Self-Explanatory: As soon as you put your data product into production, people who you don’t interact with directly may be looking at it. The more self-explanatory the data product is, the easier it will be to support those future users. You want to be out of the loop as much as possible while still providing good support.

Over time, either you or someone else will need to do a certain amount of monitoring and maintenance to ensure that the data product stays updated and accurate. Keeping a low required mental load for picking the report back up and learning, or reminding yourself of how the report works, facilitates this kind of maintenance.

Trustworthy: Trust is hard to gain and easy to lose. Usually, when you first deliver a data product, there is a lot of hope and some trust. For a report or dashboard, trust really develops as the user gains a longer-term sense that the report or dashboard is:

- answering their questions

- using accurate data

- being kept up-to-date

- provides a reliable way to resolve issues or potential problems they have with what they see

If the user starts to doubt any of these things, trust starts to erode and the report no longer is as useful as it was at the beginning. Repeated or overlapping concerns mean that the product eventually drops off their radar, and the user moves to trying to answer their questions using a different method.

Adaptable: Data inputs and shapes change over time. Schemas change. The distribution of key values changes. Places stop reporting data. Data sets can grow and scale beyond what your data processing and queries can effectively handle. All of these impact the ability of an automated process to deliver an accurate and timely product.

As a data scientist, you do not want to spend your time looking through each of your products frequently and in detail to ensure that you notice all glitches in the data and all places where things may not look right. Rather, it is better to delegate the monitoring task to an automated system, and do a detailed deep dive into each report on a slower cadence. Things you can monitor for automatically include:

- relevant changes to the schema for one or more input data streams

- relevant changes to the shapes of the data in key fields that you are using

- significant increases or decreases in data volume

- significant lag between the most recent record used and the current time

- concerning trends in data volume or lag

In addition to monitoring relevant issues, when someone notices a problem, you need to be set up to react and formulate a solution without undue complications. Knowing exactly where to look is the first step, but setting up your data processes in a way that is easy to adapt and maintain is also key to success.

When you are designing and building your pipeline, build it with your future self (or possibly your replacement) in mind. Automate the process of periodically retraining your models to take into account changes in the data. Have a cadence for looking over your data and models to ensure they still conform to the assumptions you made when you set up the initial process.

Reliable: Reliability is all about how well the execution of your pipelines and models is working. In this context, pipeline reliability relates to process execution and timing in the pipeline. This contrasts with adaptability (above), which is related to the actual data being processed.

To ensure reliability, you need to ensure that the different parts that make up your pipeline are reliable and the processes that orchestrate those parts are robust.

When the data and model-building pipelines are automated, you have no immediate and centralized feedback into what is executing, whether any issues were encountered, or even what the resulting data product looks like. To this end, to understand the reliability of the different parts or stages, it helps to have a monitoring system that centralizes this information and alerts you to things you need to pay attention to.

To monitor your entire process for each processing stage, monitor each stage’s reliability. Monitoring a stage includes checking such things as:

- its input data was updated in a timely manner

- the stage started to execute at the expected time

- the stage completed successfully

- the stage completed before any stages downstream needed to read its results

Putting Things Together

When shaping my initial system for production quality, I ask specific questions at each phase of the pipeline, focused on the aforementioned concerns. Working through these questions systematically, I am able to address quality concerns at all 12 of the touch points.

Self-Explanatory / First Mile:

- Is the data I am using well-documented?

- Am I using the data in line with its intended use?

- Am I reusing as much of the existing engineering pipeline as possible to lower overall maintenance effort and ensure that my use is consistent with other uses of the same data items?

Self-Explanatory / Last Mile:

- Is the report or dashboard presented in a way that is easily accessible and understandable even to people who will be viewing it, and without explanation from me?

- Am I using vocabulary and visualizations that the end user understands, and that they understand in exactly the same way I do?

- Am I providing good supporting documentation and information in a way that is intuitive and easily accessible from the data product itself?

Self-Explanatory / In Between:

- Are the requirements, constraints and implementation of the data process documented well enough that someone else who may be taking over maintenance from me can understand it?

Trustworthy / First Mile:

- Am I connected into the input data in a way that is well-supported in the production pipeline?

- Do I have explicit buy-in from those maintaining the data sets I am using?

- Is this input data likely to be maintained for a significant time in the future?

- When might I need to check back to be sure the data is still being maintained?

- How do I report any problems that I see in the input data?

- Who is responsible for notifying me of issues with the data?

- Who is responsible for fixing those issues?

Trustworthy / Last Mile:

- How does the user know when they can trust the report is accurate and up to date?

- Is there an efficient and/or automated way of communicating possible problems to the end user?

- Is there a clear and accessible process in place for the user to report concerns with the data or report, and for the user to be notified of any remediation processes in place?

Trustworthy / In Between:

- Have I set up a regular schedule to review the data and report to ensure that the data pipeline is still functioning well and the report is conforming to the requirements?

- What are the conditions under which this report should be marked as deprecated?

- How can I ensure that the user is informed should the report become deprecated?

Adaptable / First Mile:

- What features in the input data am I depending on for my analysis?

- How will I know if those features stop being supported, are affected by a schema change, or change shape in a way that may affect my analysis?

- How will I know if the scale of the data grows to a point where I need to refactor my process in order to keep up with my product’s requirements for freshness?

Adaptable / Last Mile:

- Is the product or report set up in a way that it is easy to request a change and/or a new feature?

Adaptable / In Between:

- Have I set up a regular schedule for re-examining the requirements to ensure that I am still producing what the user needs and expects?

- What is the process for users to indicate changes in requirements, and for those changes to be addressed?

- What is the process for refactoring and retesting the data pipeline when the inputs change in some relevant way?

Reliable / First Mile:

- Does my process fit well into the data practices and engineering production system in my organization?

- Do I have an automatic notification system in place to monitor the availability, freshness and reliability of my input data?

Reliable / Last Mile:

- Who is responsible for the ongoing monitoring, reviewing, troubleshooting, and maintenance of the dashboard itself?

- Are responsibilities and procedures clearly in place for reporting and resolving issues internally?

Reliable / In Between:

- Is each stage in my pipeline executing and completing in a timely manner?

- Is there drift in the processing time and/or amount of data being processed at any stage that may indicate a degradation in pipeline function?

Conclusion

Putting a data product into production presents challenges beyond those of making something perform well as a one-off or as a prototype. Proper attention to production-related concerns from the start accelerates your overall productivity by minimizing the amount of time you spend on routine maintenance tasks for your in-production products.

When you first build a data product, you wrestle with issues of what data is available to use and how to best use that data in your product to satisfy the requirements of the intended user. This is often somewhat of a solo effort for the analyst or data scientist.

Putting something into production is more of a team effort. There are usually others — perhaps a data engineering team — responsible for making sure that the data sources you rely on are kept accurate and up to date. You want to connect with their data in a way that works best for them. You also have your users — now a more open-ended set of people — who will be using your product. You want them to trust your product. You want to make using and understanding your product as straightforward as possible. Finally, you have your own data pipeline. You want to know as soon as possible when something happens that impacts its function.

The 12 touch points in this article and the questions associated with each touch point provide a structure for working through your productizing process. Paying attention to these questions will help prevent you from getting bogged down in support and will give you more time to be creative and build new things.

Marian Nodine is Chief Data Scientist at Mighty Canary.

Mighty Canary is a company focused on building tools to help data and analytics professionals increase trust in their reports and reduce the time they spend supporting old reports.

How to Build a Data Product that Won’t Come Back to Haunt You was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/FDP08jH

via RiYo Analytics

ليست هناك تعليقات