https://ift.tt/QDh6Jiw Homo sapiens — the KNN classifier How our decisions are governed by the KNN approach Photo by Sylas Boesten on ...

Homo sapiens — the KNN classifier

How our decisions are governed by the KNN approach

The K-nearest neighbours (KNN) method is probably the most intuitive classifier algorithm, and all of us actually use it on a daily basis. In short, it can be described by well known proverbs such as

Birds of a feather flock together

or

You are who you associate with

This post will briefly outline how the KNN classifier work, and why I feel like it is a good depiction of certain human behaviours?

The technical aspect of the KNN classifier

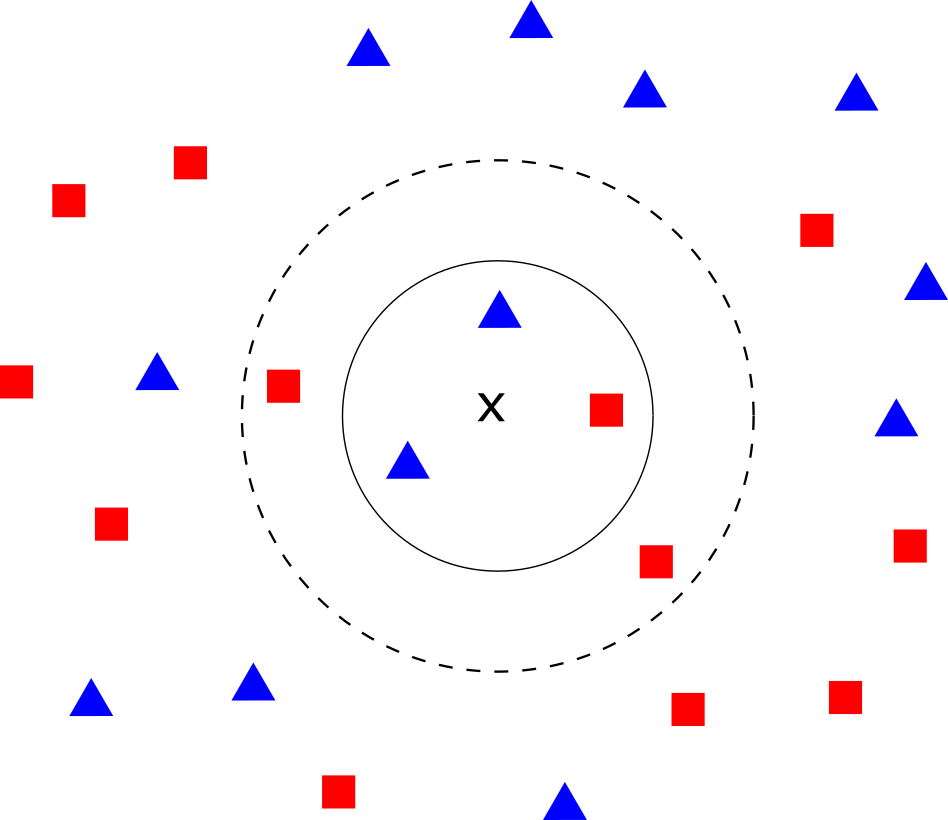

The KNN algorithm assigns classes to data points based on their surroundings (their “nearest neighbours”). The figure below schematically illustrates its working principle using three simple geometrical shapes. If a decision is made based on the three nearest neighbours (the inside of the full circle), the X point is classified as a triangle. However, extending the model to five nearest neighbours (the insides of the dotted circle) yields a square as an estimate.

If you are interested in more technical details and how to implement the algorithm in R, please check out my earlier post. If not, just read on, since this simple explanation is all we need to know in order to understand how the KNN algorithm relates to people.

“Birds of a feather flock together” — the story of the KNN classifier

Train, validate, test, go!

In order to understand the following discussion, I will first briefly go through definitions of the training, validation and test datasets. The training set is used to fit our model, the validation set to tune the model hyperparameters, and the test set to see how well it does on some previously unseen data. In the case of the KNN classifier, no actual training occurs — the model just contains the raw data from the training set which is used later to find the optimal hyperparameter values.

What is a hyperparameter in the case of KNN classifier? It is K, the number of neighbours. We use the validation set in order to find the optimal number of neighbours which yield the best possible classification results. Following that, unknown data from the test set is used to provide a measure of how well our model works in a previously unknown environment. If we are satisfied with the result, our model is ready to go!

How are people similar to KNN classifiers?

Now we can move on to the actual comparison. The training set is provided to us during our upbringing. Each time we meet someone new, we use our senses to collect their features and then assign them to a certain class. Once we are old enough, we use this data in order to set ourselves up in the world. Of course there is a lot of trial and error in this process. This is because, in the language of machine learning, we are tuning our hyperparameters. For example, we might start with a very high or very low K, i.e. we will compare the features of the newly met person to either the features of many similar people from the past or just the most similar (K = 1). There can be no a priori best value since, the best solution will highly depend on the complexity of the social structure. To sum up, we are meeting a lot of new people and trying to classify them based on our previous experience. In this way we are using them as a validation set in order to choose the optimal K for our surroundings.

Once we have confidence in our model, our goal is to go out and try to classify people into certain groups without having the ability to verify whether it is correct or not. If our model is well tuned, it can be a great tool as it allows us to rapidly assess a person and decide on the next course of action. However, this approach will naturally also lead to some errors as well.

Pride and prejudice

We have stated before that the KNN model doesn’t actually do any work during training, since it only stores the data points. This is why it is often referred to as a lazy model. This transfers really well to humans, since we are often equally lazy when judging a person. Instead of giving a person time to get to know them, we often judge them on the spot and, if we don’t like what we see, we simply move on. There is no second guesses with the KNN classifier. You either belong to a certain category or not. While the evolutionary advantage of such a simple and rapid approach is clear, it can lead to misjudgments as well. In my opinion, this similarity to the KNN classifier is at the core of why we humans are so prone to prejudicial reasoning.

Another potential pitfall can arise if the training set stops being representative of the test set. This can occur for any number of reasons, such as moving to another city/country, or changing our job. When this happens, our model’s accuracy will greatly decrease. If we are too proud and refuse to abandon the old model and create a new one, we will come into conflict with the people from our surroundings very quickly.

Final remarks

This short opinion is just that, my opinion. Of course, things are never this simple, and while there are many differences, I think the KNN algorithm describes our primal reflexes quite well. I thought the similarities were tantalizing and decided to write this post as it opened up a different perspective for me. I hope you find it equally interesting!

Homo sapiens — the KNN classifier was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/knjXKTS

via RiYo Analytics

No comments