https://ift.tt/Tij6NYm A practical guide to building critical MLOps capabilities that maximize data science ROI Photo by Ramón Salinero ...

A practical guide to building critical MLOps capabilities that maximize data science ROI

I am fortunate to work with some of the most sophisticated global companies on their AI/ML initiatives. These companies include many household names on the Fortune 500 and come from industries as diverse as insurance, pharmaceuticals, and manufacturing. Each has dozens to literally thousands of data scientists on its payroll. While they have significant investments in AI and ML, they exhibit a surprisingly wide array of maturity when it comes to MLOps. In this post, I take a moment to look at what I’ve learned from working with these companies and share common themes that emerge from their MLOps journeys. My goal in doing this is to provide a framework by which executives and leaders can measure the progress of their journey towards AI excellence.

Defining MLOps

In my experience, the definition of MLOps depends on the audience. To a technical practitioner, I would say, “MLOps is the automation of DevOps tasks specific to the data science lifecycle.” To an executive concerned with scale across organizations, I would first use the term Enterprise MLOps, and then I would say, “Enterprise MLOps is a set of technologies and best practices that streamline the management, development, deployment, and maintenance of data science models at scale across a diverse enterprise.”

In this way, MLOps accelerates what some leaders call “model velocity”, which is the speed at which a company can produce models while ensuring the highest standards of model security, safety, and accuracy.

Tying Technical Capabilities to Business Value

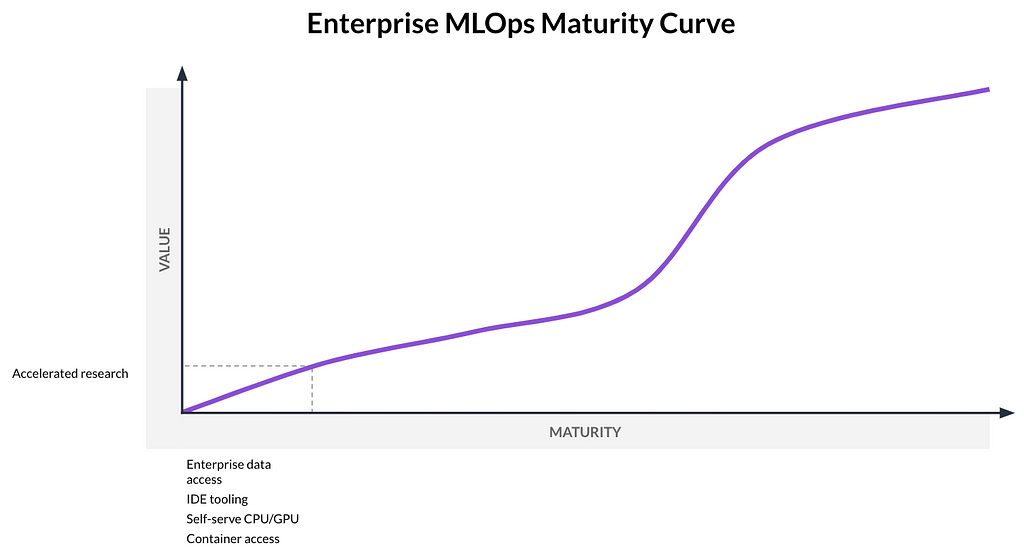

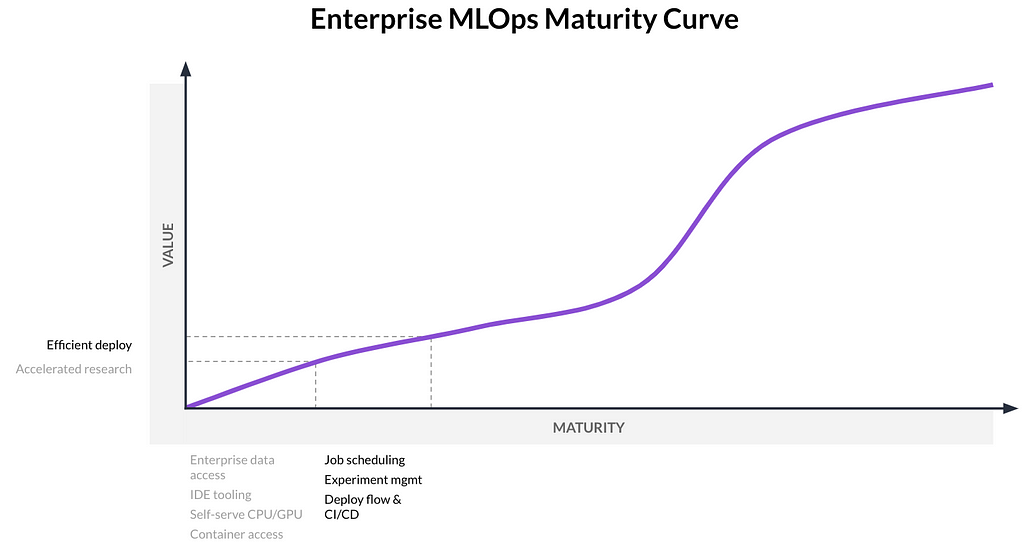

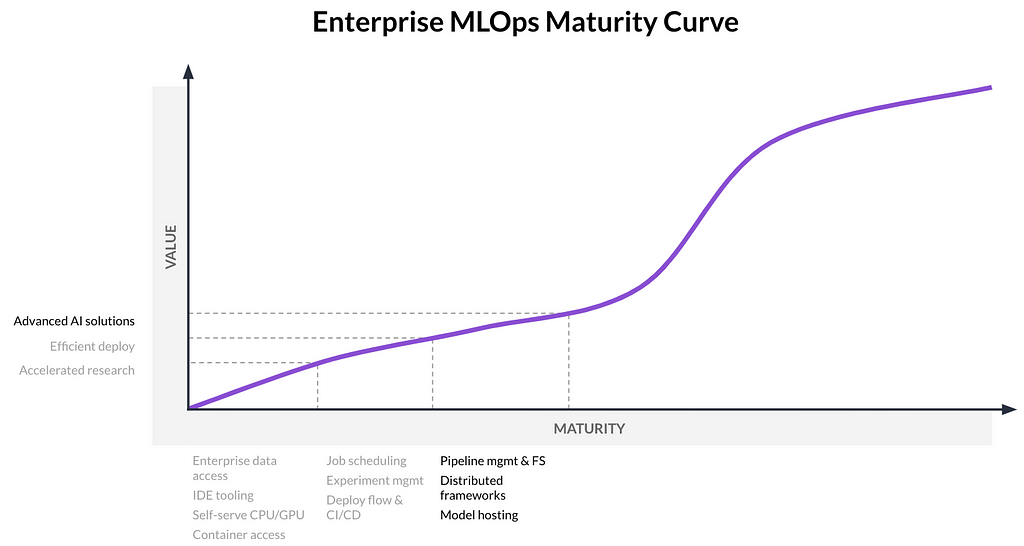

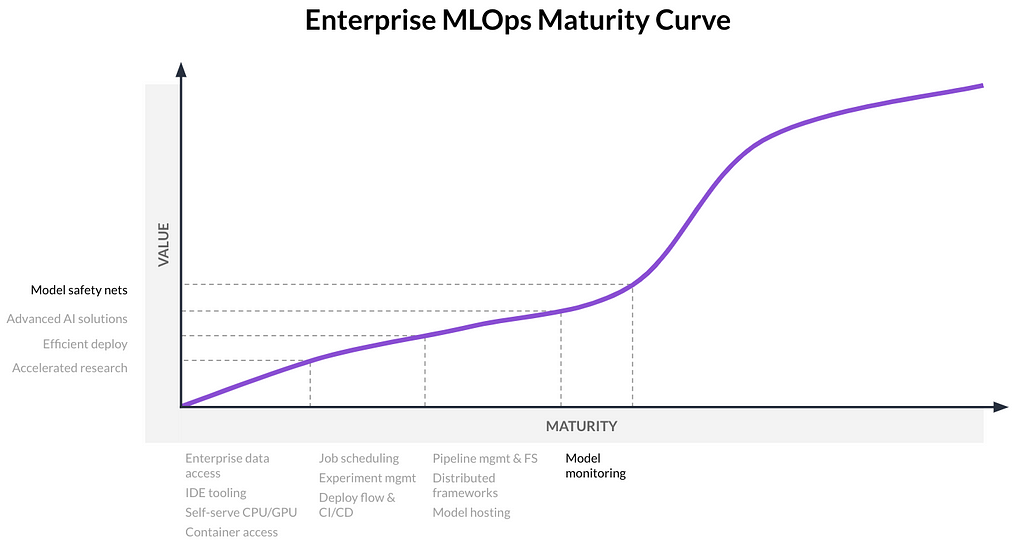

There are common themes when looking at the technical MLOps capabilities adopted by these companies. They naturally fall into groups and exhibit a progression towards advanced maturity. I’ll use a maturity curve to help guide the discussion of these concepts. Along the x-axis will be several groupings of MLOps capabilities. Along the y-axis will be the business value companies get from each grouping.

The most mature organizations prioritize adding new MLOps capabilities based on a well-founded assessment of business value. Their north star is to optimize the ROI of their entire ML/AI investment. To add texture to each value statement, I’ll share direct quotes from analytical leaders. It’s interesting to hear them put to words the value they see with the adoption of MLOps capabilities.

Accelerated Research

Access to data, access to the tools and IDEs that data scientists use daily, and access to hardware are in the first group of capabilities. To scale data science research, the software environment has to be based on container technologies like Docker. And each of these components must be self-serve in an IT-not-heavily-involved manner. If a data scientist has to fill out a ticket, send an email, or play Linux administrator to get access to any of this, we’re off track.

The business value coming from this combination of capabilities is accelerated research or quicker turns on data into insights. After achieving success in this initial stage of MLOps, one IT leader put it this way.

“Previously, it could take two to three weeks to understand and spin up an infrastructure and then start the work. That went from weeks to just a click of a button.”

— Director of Data Platforms, Pharmaceuticals

Efficient Deploy

In the next group of capabilities commonly adopted, we can schedule jobs, manage the details of experiments, and have some type of automated deploy flow such as a CI/CD pipeline. This enables efficient deployment of reports, apps, models, and other assets. An analytical leader gave color to what it might look like when you’ve arrived at this stage of maturity.

“When we build models, we can publish an application now… Anyone on my team can do that in less than a week, and some can do it in a couple of hours.”

— Senior Director of Decision Sciences, Software Services

Advanced AI Solutions

In the next stage of maturity, companies usually seek to build a stack that allows the creation of modern, complex analytical solutions. This step up in complexity comes from much larger data sizes (distributed frameworks), interrelations in the data (pipelines and feature serving), and sophisticated AI-like solutions (deep nets). Additionally, we go beyond basic model hosting and consider hosting at scale and hosting models with more complicated inference mechanics.

Achieving this level of MLOps maturity represents an important milestone in two important ways. First, organizations that can build to this level can scale advanced AI solutions much quicker than their competitors. These organizations are the disruptors that are pushing their industries to challenge old norms and create new revenue streams. For example, insurance companies are rethinking how AI can change the claims process for customers, and pharmaceuticals are connecting AI to biological markers to customize treatment for patients. Second, companies at this stage of MLOps maturity can attract and retain top analytical talent, an important point in today’s competitive talent market. These two benefits are captured in the following quotes.

“We certainly are implementing more accurate models or even models that we couldn’t have done before with more complicated workflows.”

— Principal Consultant, FinServ

“If we hadn’t invested in [MLOps] first, I wouldn’t have been able to set up a team at all, because you can’t hire a high-skilled data scientist without providing them with the state-of-the-art working environment.”

— CAO, Insurance

Model Safety Nets

There is one more capability to add before we hit an inflection point in value coming from MLOps investment. Most companies today understand the importance of monitoring their production models to provide a safety net against model risk. As one leader put it,

“Data drift can have a critical impact on predictions and ultimately, our business.”

— Head of Machine Learning, Insurance

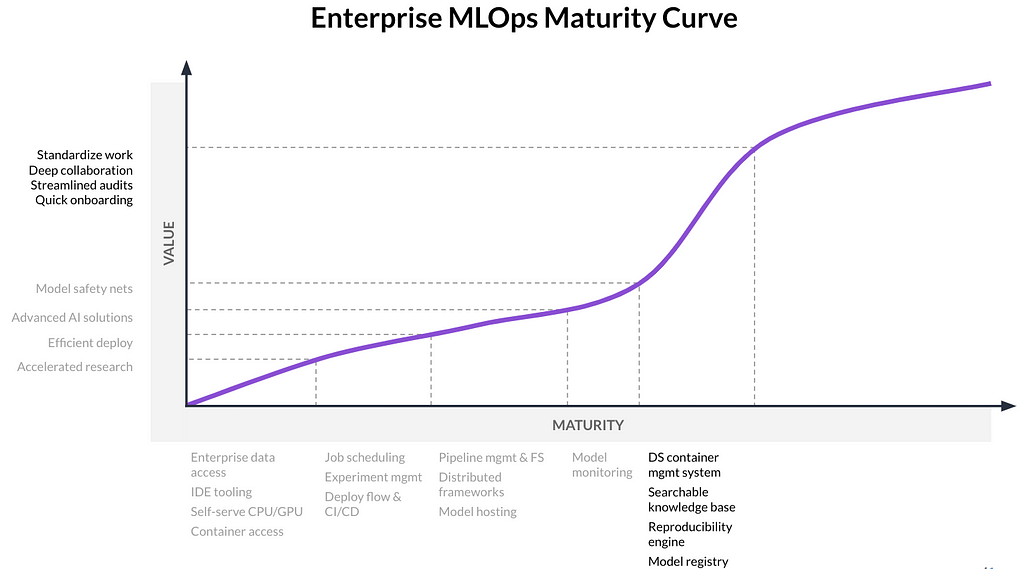

The Inflection Point

Companies that are hitting their AI strategic objectives don’t implement these four groups of capabilities in isolation. They consider them part of a unified IT framework. For these companies, their MLOps features follow a coherent strategy resulting in something IT can manage without the usual heroics.

Further, they give heavy consideration to the data scientist persona. They view the data scientist as their customer. This may include statistical analysts, quants, actuaries, clinical programmers, etc. The idea is that instead of taking bits and pieces from different open source MLOps technologies, they put it all under one umbrella, or platform, that stitches these capabilities together based on a data science-first set of principles. This data science-first way of thinking manifests itself in several subtle but important ways, from the way metadata is tracked to the way model retraining is automated.

Companies to the right of this inflection point are the ones successfully scaling AI and ML across their enterprise.

Standardize, Collaborate, Streamline, Onboard

The first group of capabilities beyond the inflection point takes the idea of containers and evolves it into a data science container management system purpose-built for the way data scientists work and collaborate. This includes managing, sharing, and versioning. It also makes it easy for data scientists to modify and build containers. Further, it includes a searchable knowledge base where all the metadata of the work can be tagged, stored, and indexed for discovery and easy collaboration resulting in less wasted time and quicker project onboarding. This group also includes a reproducibility engine, where the breadcrumb trail of your work is right in front of you, it is easy to validate model lineage for auditors and regulators, and past work is recreated with the click of a button. I also included a model registry in this group. Having a central repository where all models are captured and managed in one place is the foundation of model risk management and model governance.

Most of the larger enterprises I work with have data science teams in their lines of business, IT departments, operations organizations, research teams, and centralized centers of excellence. Standardizing on MLOps best practices across this diversity of teams breeds strong collaboration that enables scale. Speaking about this value, one leader noted that,

“[Mature MLOps enables] reproducibility and discovery. The true knowledge acceleration, however, occurs by the discovery of others’ research on the platform. With a simple keyword search, a scientist can find other relevant research or subject matter experts.”

— Sr Director, Engineering & Data Science, Life Sciences

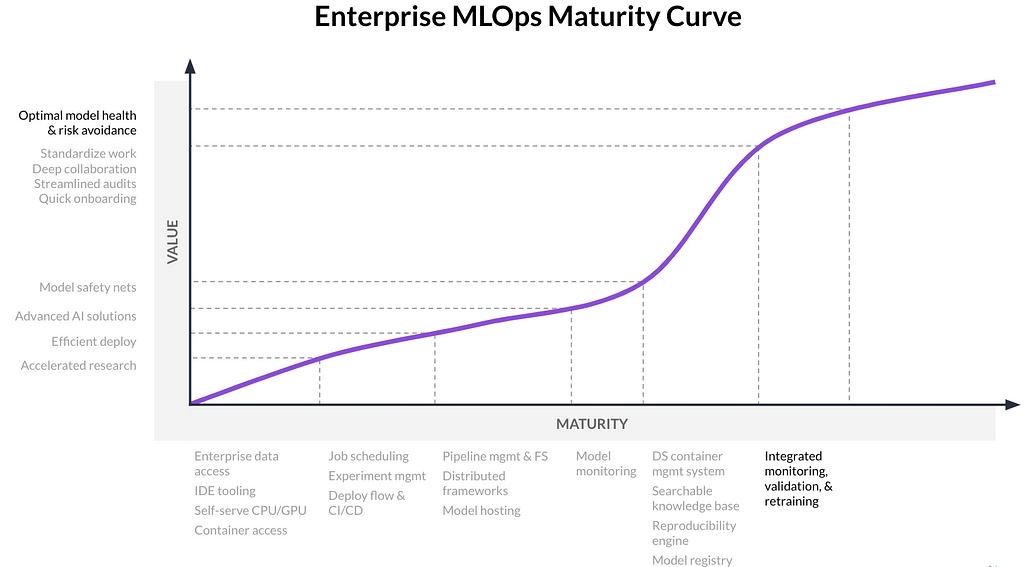

Optimal Model Health and Risk Avoidance

After taking this leap in value by focusing on a unified, best-practices, data science-centric approach to MLOps, the more advanced organizations close the loop on model risk and model health. They do this with monitoring that is integrated with the data and research capabilities adopted previously.

When a model goes wrong or when data drifts, automatic alerting triggers the work of remediation. Model validation is integrated as well, providing for the internal checks a company or a regulatory framework mandate. This might include bias checks, peer code reviews, model card creation, or explainability analyses. The key is that anyone looking at a model can understand how risk was mitigated and see how the model was created. This optimizes model health and avoids risk.

“[Integrated] model monitoring saves us significant time previously spent on maintenance and investigation, and enables us to monitor model performance in real-time and compare it to our expectations.”

— Head of Machine Learning, Insurance

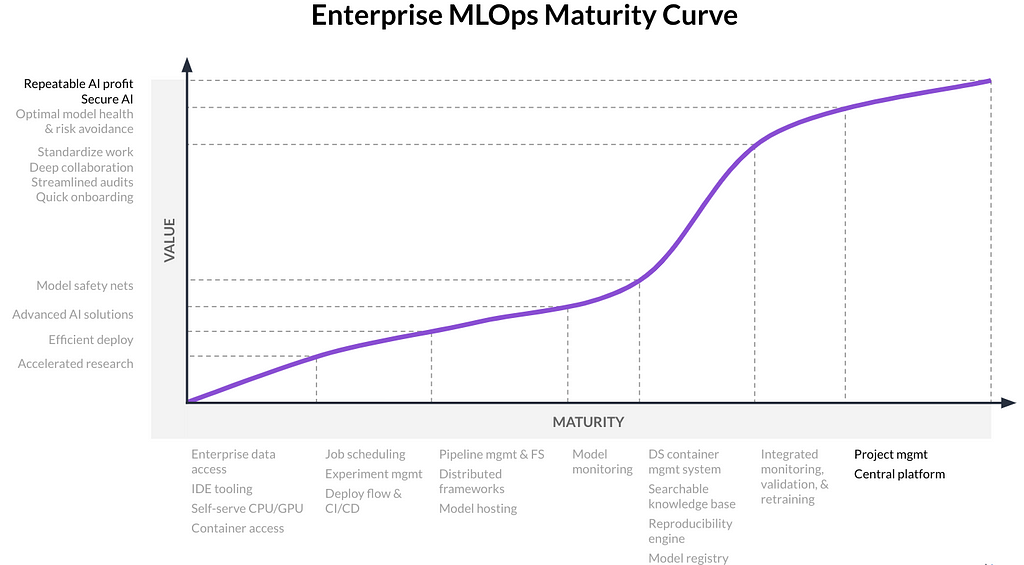

Repeatable AI Profit and Secure AI

This last group of capabilities puts a bow on the idea of centralizing MLOps features. It is here that leaders finally have an AI program that acts like a machine, pumping out a reliable stream of profitable, ROI-generating models while keeping all data and IP secure.

The first of these concepts is project management. The flow of work has to be built for the way data scientists do their work so their research can happen in a fluid collaborative manner. Projects flow through familiar stages and have logical checkpoints. The project becomes the system of record for data science work.

All this needs to occur on a centralized platform so IT can ensure security, manage users, and monitor costs. Collaborators, leaders, subject matter experts, validators, data engineers, cloud developers, and analysts can all join in project work while letting the platform manage security concerns.

With these structures in place, your data science teams become revenue-generating machines. I’ve even seen companies that have revenue targets for their data science teams.

“[Our] platform is at the core of our modern data science environment which has helped maximize the efficiency, productivity, and output of our data science teams, helping us drive innovation in support of our customers’ mission.”

— Director & Chief Data & Analytics Officer, Manufacturing

Self-Assessment

Consider your own MLOps journey and evaluate where you’re at on the maturity curve. Make plans to fill in the gaps in your strategy. Keep in mind that the key to going beyond the inflection point in value is to tightly integrate all the capabilities in a data science-centric manner. Foresight and planning are required otherwise you’ll end up with a hodgepodge of features and capabilities that inhibit scale rather than accelerate it. The companies that get this right will see a strong return on their AI/ML investment.

The Seven Stages of MLOps Maturity was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/P4rzGyA

via RiYo Analytics

ليست هناك تعليقات