https://ift.tt/IXbukEg CAUSAL DATA SCIENCE What makes an observation “unusual”? Cover image, generated by Author using NightCafé In da...

CAUSAL DATA SCIENCE

What makes an observation “unusual”?

In data science, a common task is anomaly detection, i.e. understanding whether an observation is “unusual”. First of all, what does it mean to be unusual? In this article we are going to inspect three different ways in which an observation can be unusual: it can have unusual characteristics, it might not fit the model well or it might be particularly influential in training the model. We will see that in linear regression the latter characteristic is a byproduct of the first two.

Importantly, being unusual is not necessarily bad. Observations that have different characteristics from all others often carry more information. We also expect some observations not to fit the model well, otherwise, the model is likely biased (we are overfitting). However, “unusual” observations are also more likely to be generated by a different data-generating process. Extreme cases include measurement error or fraud, but other cases can be more nuanced. Domain knowledge is always king and dropping observations only for statistical reasons is never wise.

That said, let’s have a look at some different ways in which observations can be “unusual”.

A Simple Example

Suppose we were a peer-to-peer online platform and we are interested in understanding if there is anything suspicious going on with our business. We have information about how much time our users spend on the platform and the total value of their transactions. Are some users suspicious?

First, let’s have a look at the data. I import the data generating process dgp_p2p() from src.dgp and some plotting functions and libraries from src.utils. I include code snippets from Deepnote, a Jupyter-like web-based collaborative notebook environment. For our purpose, Deepnote is very handy because it allows me not only to include code but also output, like data and tables.

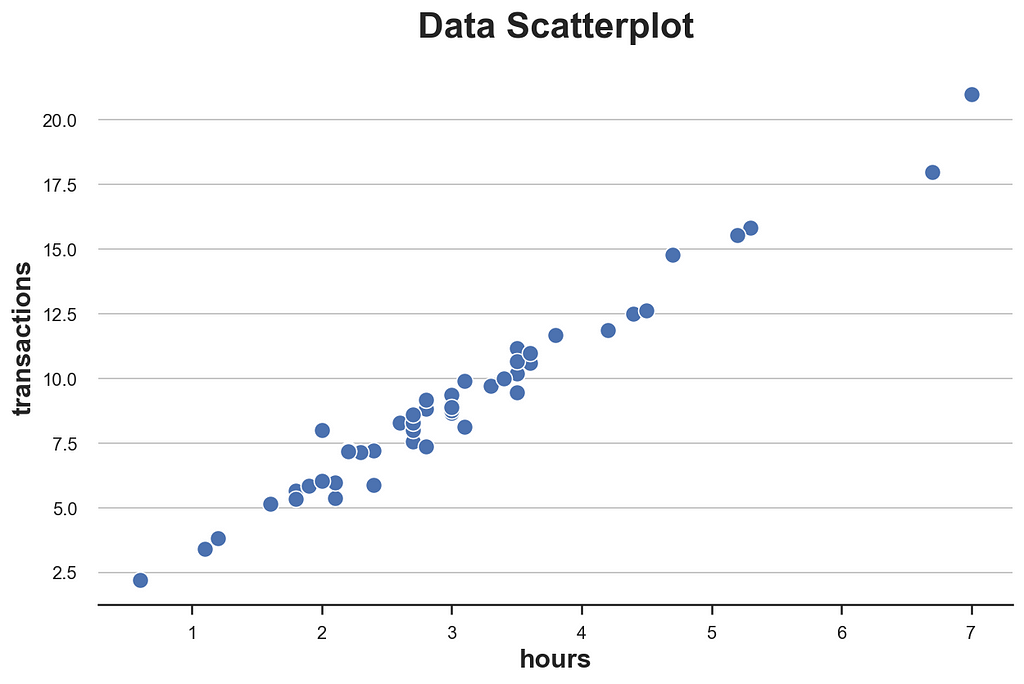

We have information on 50 users for which we observe hours spent on the platform and total transactions amount. Since we only have two variables we can easily inspect them using a scatterplot.

The relationship between hours and transactions seems to follow a clear linear relationship. If we fit a linear model, we observe a particularly tight fit.

Does any data point look suspiciously different from the others? How?

Leverage

The first metric that we are going to use to evaluate “unusual” observations is the leverage, which was first introduced by Cook (1980). The objective of the leverage is to capture how much a single point is different with respect to other data points. These data points are often called outliers and there exist a nearly infinite amount of algorithms and rules of thumb to flag them. However, the idea is the same: flagging observations that are unusual in terms of features.

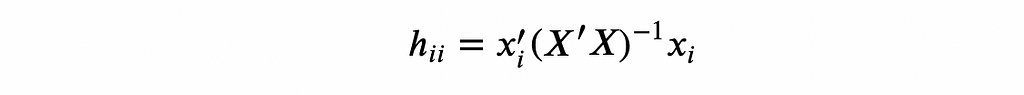

The leverage of an observation i is defined as

One interpretation of the leverage is as a measure of distance where individual observations are compared against the average of all observations.

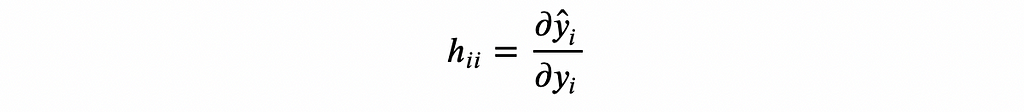

Another interpretation of the leverage is as the influence of the outcome of observation i, yᵢ, on the corresponding fitted value ŷᵢ.

Algebraically, the leverage of observation i is the iₜₕ element of the design matrix X’(X’X)⁻¹X. Among the many properties of the leverages, is the fact that they are non-negative and their values sum to 1.

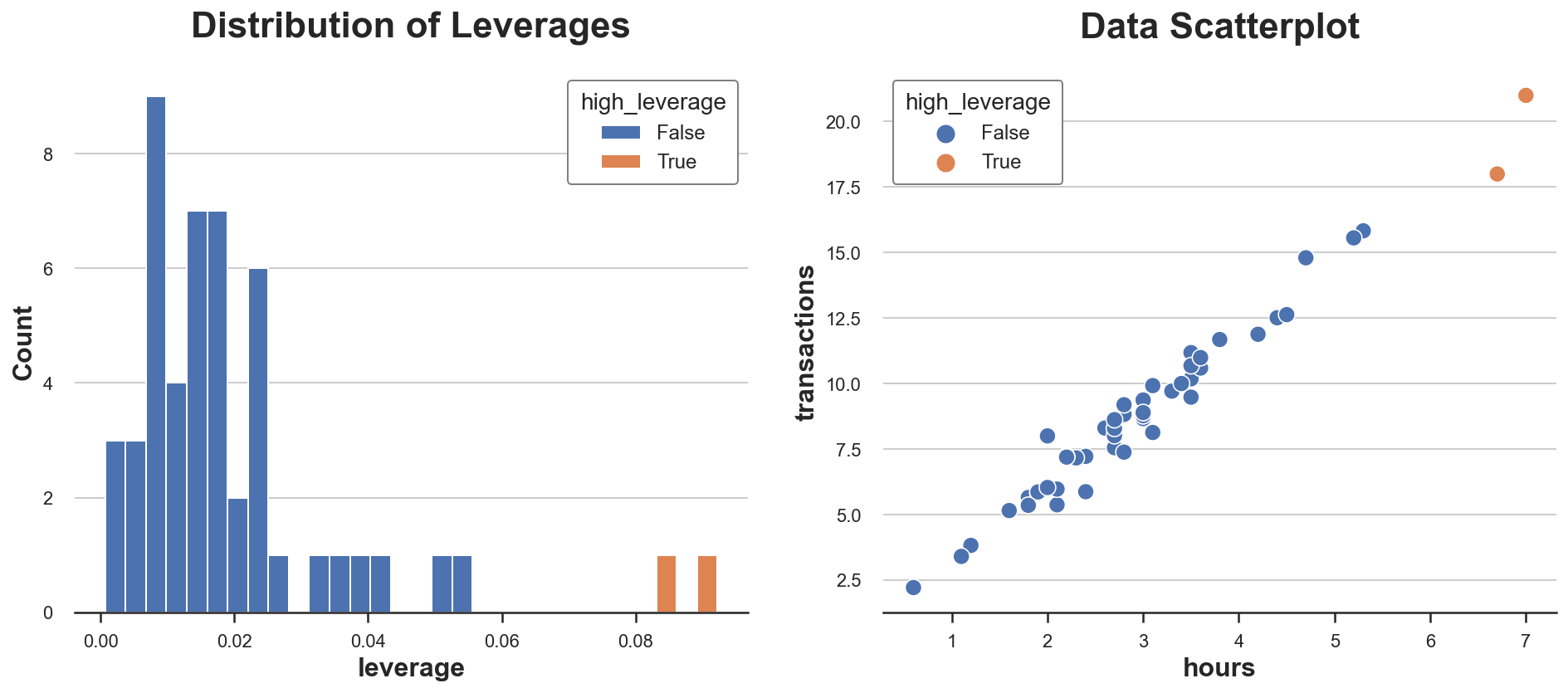

Let’s compute the leverage of the observations in our dataset. We also flag observations that have unusual leverages (which we arbitrarily define as more than two standard deviations away from the average leverage).

Let’s plot the distribution of leverage values in our data.

As we can see, the distribution is skewed with two observations having unusually high leverage. Indeed, in the scatterplot, these two observations are slightly separated from the rest of the distribution.

Is this bad news? It depends. Outliers are not a problem per se. Actually, if they are genuine observations, they might carry much more information than other observations. On the other hand, they are also more likely not to be genuine observations (e.g. fraud, measurement error, …) or to be inherently different from the other ones (e.g. professional users vs amateurs). In any case, we might want to investigate further and use as much context-specific information as we can.

Importantly, the fact that an observation has a high leverage tells us information about the features of the model but nothing about the model itself. Are these users just different observations or do they also behave differently?

Residuals

So far we have only talked about unusual features, but what about unusual behavior? This is what regression residuals measure.

Regression residuals are the difference between the predicted outcome values and the observed outcome values. In a sense, they capture what the model cannot explain: the higher the residual of one observation the more it is unusual in the sense that the model cannot explain it.

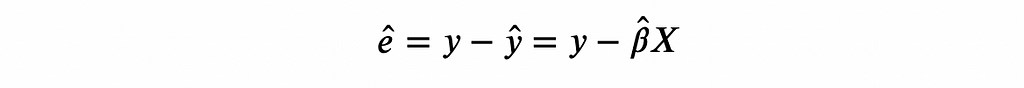

In the case of linear regression, residuals can be written as

In our case, since X is one dimensional (hours), we can easily visualize them.

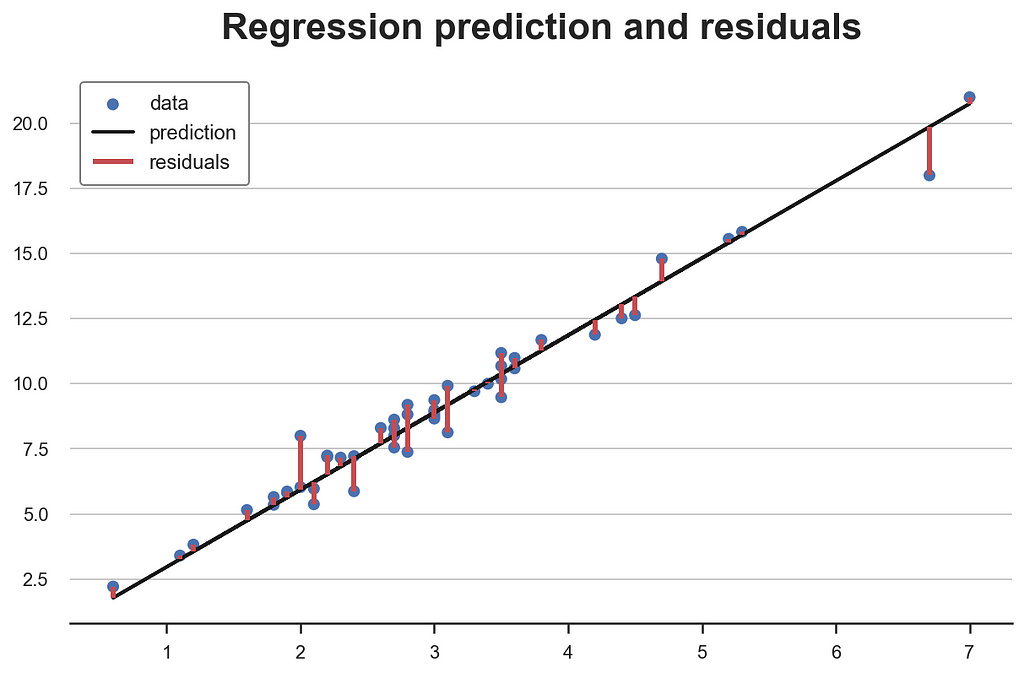

Do some observations have unusually high residuals? Let’s plot their distribution.

Two observations have particularly high residuals. This means that for these observations, the model is not good at predicting the observed outcomes.

Is this bad news? Not necessarily. A model that fits the observations too well is likely to be biased. However, it might still be important to understand why some users have a different relationship between hours spent and total transactions. As usual, information on the specific context is key.

So far we have looked at observations with “unusual” characteristics and “unusual” model fit, but what if the observation itself is distorting the model? How much our model is driven by a handful of observations?

Influence

The concept of influence and influence functions was developed precisely to answer this question: what are influential observations? This question was very popular in the 80s and lost appeal for a long time until the recent need of explaining complex machine learning and AI models.

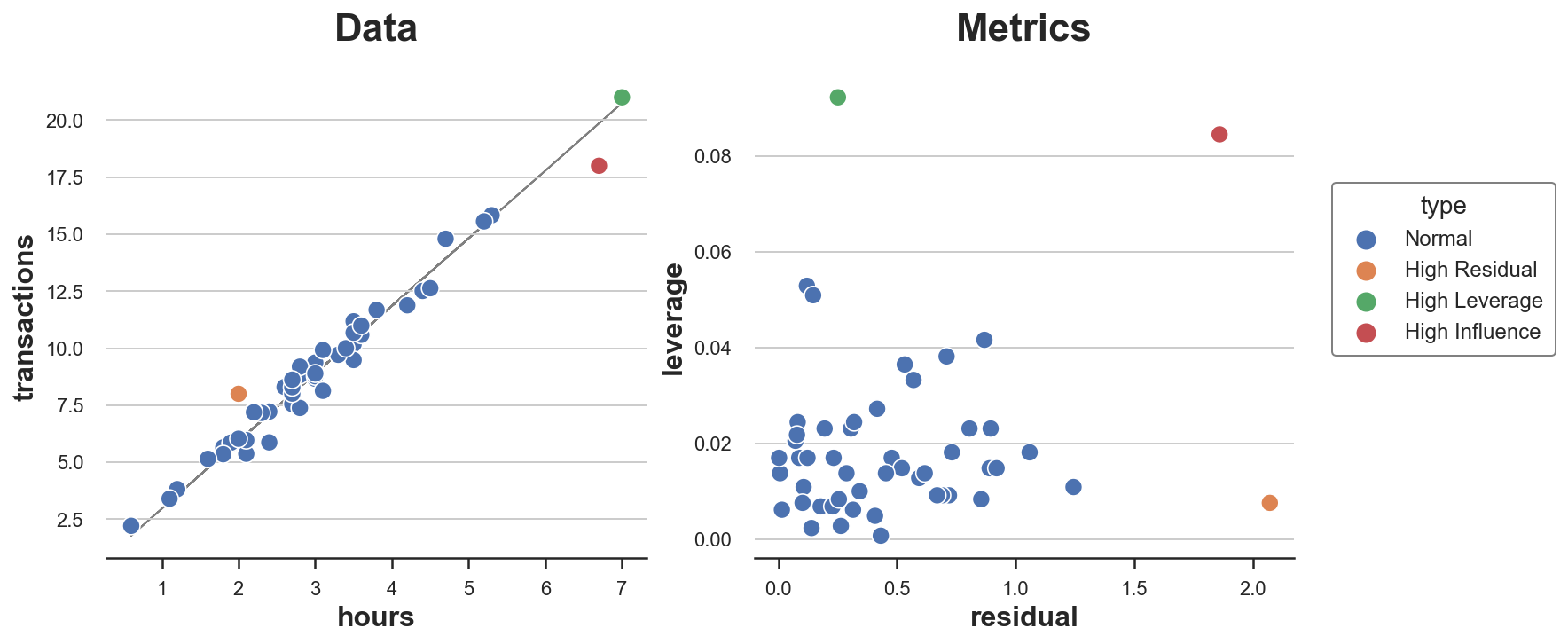

The general idea is to define an observation as influential if removing it significantly changes the estimated model. In linear regression, we define the influence of observation $i$ as:

Where β̂-i is the OLS coefficient estimated omitting observation i.

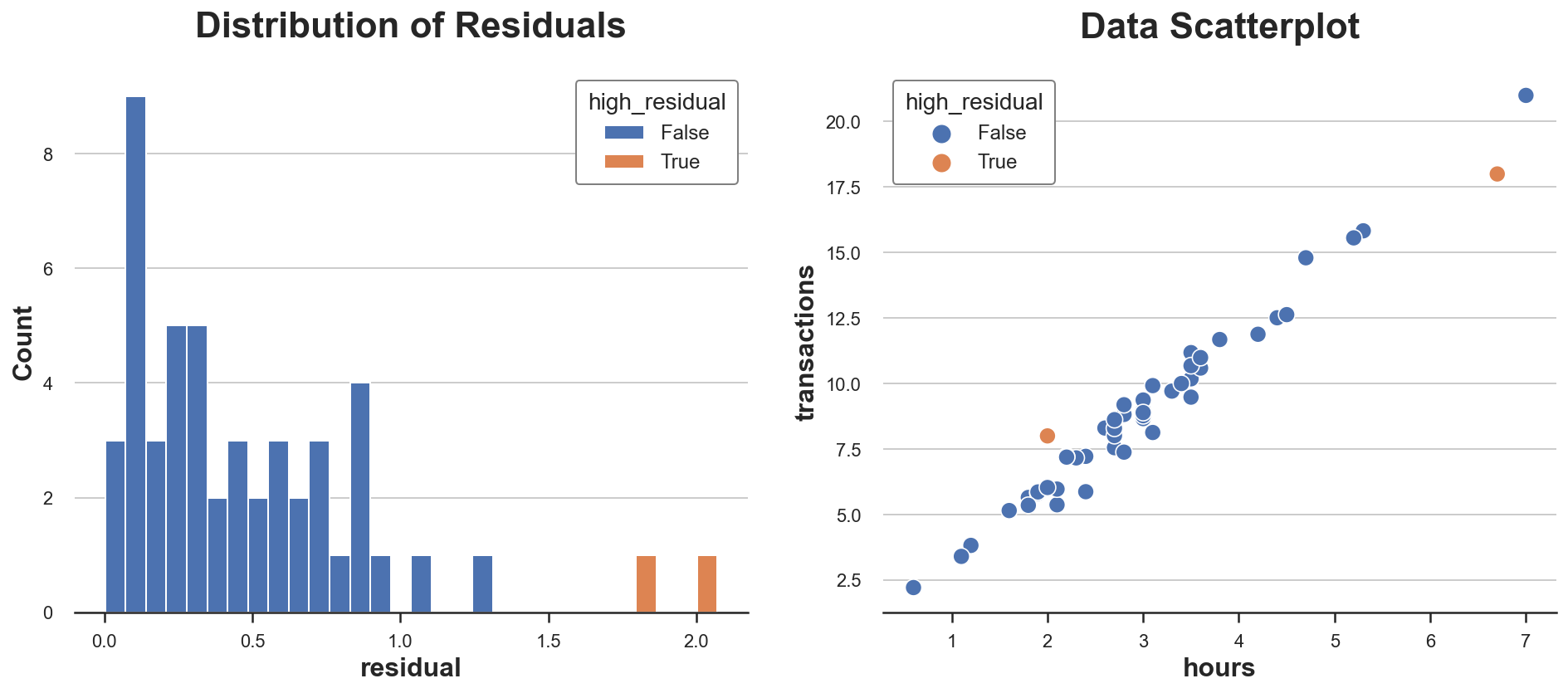

As you can see, there is a tight connection to both the leverage hᵢᵢ and residuals eᵢ: influence is almost the product of the two. Indeed, in linear regression, observations with high leverage are observations that are both outliers and have high residuals. None of the two conditions alone is sufficient for an observation to have an influence on the model.

We can see it best in the data.

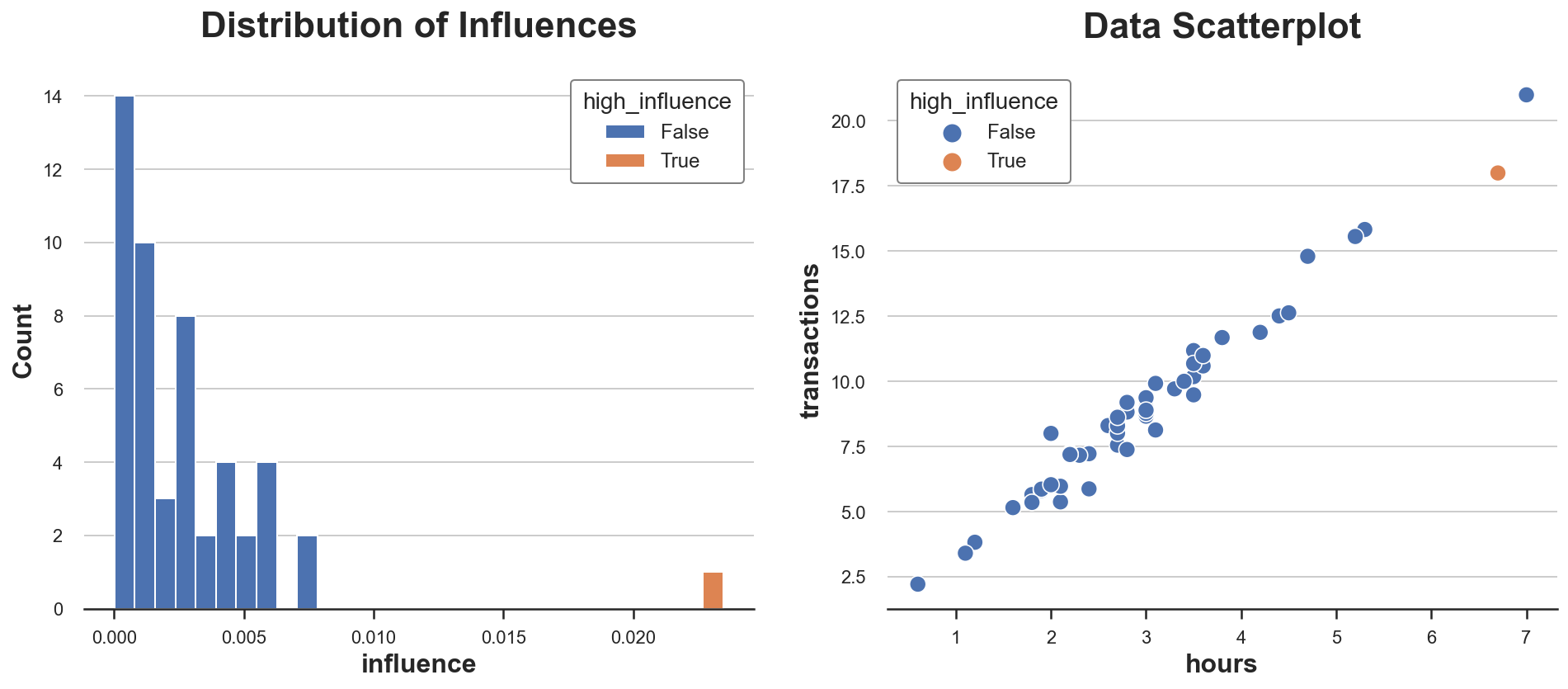

In our dataset, there is only one observation with high influence, and it is disproportionally larger than the influence of all other observations.

We can now plot all “unusual” points in the same plot. I also report residuals and leverage of each point in a separate plot.

As we can see, we have one point with high residual and low leverage, one with high leverage and low residual, and only one point with both high leverage and high residual: the only influential point.

From the plot, it is also clear why none of the two conditions alone is sufficient for an observation to rive the model. The orange point has a high residual but it lies right in the middle of the distribution and therefore cannot tilt the line of best fit. The green point instead has high leverage and lies far from the center of the distribution but it's perfectly aligned with the line of fit. Removing it would not change anything. The red dot instead is different from the others in terms of both characteristics and behavior and therefore tilts the fit line towards itself.

Conclusion

In this post, we have seen some different ways in which observations can be “unusual”: they can have either unusual characteristics or unusual behavior. In linear regression, when an observation has both it is also influential: it tilts the model towards itself.

In the example of the article, we concentrated on a univariate linear regression. However, research on influence functions has recently become a hot topic because of the need to make black-box machine learning algorithms understandable. With models with millions of parameters, billions of observations, and wild non-linearities, it can be very hard to establish whether a single observation is influential and how.

References

[1] D. Cook, Detection of Influential Observation in Linear Regression (1980), Technometrics.

[2] D. Cook, S. Weisberg, Characterizations of an Empirical Influence Function for Detecting Influential Cases in Regression (1980), Technometrics.

[2] P. W. Koh, P. Liang, Understanding Black-box Predictions via Influence Functions (2017), ICML Proceedings.

Code

You can find the original Jupyter Notebook here:

Blog-Posts/outliers.ipynb at main · matteocourthoud/Blog-Posts

Thank you for reading!

I really appreciate it! 🤗 If you liked the post and would like to see more, consider following me. I post once a week on topics related to causal inference and data analysis. I try to keep my posts simple but precise, always providing code, examples, and simulations.

Also, a small disclaimer: I write to learn so mistakes are the norm, even though I try my best. Please, when you spot them, let me know. I also appreciate suggestions on new topics!

Outliers, Leverage, Residuals, and Influential Observations was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/Ne4Qdth

via RiYo Analytics

ليست هناك تعليقات