https://ift.tt/uF9Y3tj An in-depth guide to the state-of-the-art variance reduction technique for A/B tests Image by Author During my P...

An in-depth guide to the state-of-the-art variance reduction technique for A/B tests

During my Ph.D., I spent a lot of time learning and applying causal inference methods to experimental and observational data. However, I was completely clueless when I first heard of CUPED (Controlled-Experiment using Pre-Experiment Data), a technique to increase the power of randomized controlled trials in A/B tests.

What really amazed me was the popularity of the algorithm in the industry. CUPED was first introduced by Microsoft researchers Deng, Xu, Kohavi, Walker (2013) and has been widely used in companies such as Netflix, Booking, Meta, Airbnb, TripAdvisor, DoorDash, Faire, and many others. While digging deeper into it, I noticed a similarity with some causal inference methods I was familiar with, such as Difference-in-Differences. I was curious and decided to dig deeper.

In this post, I will present CUPED and try to compare it against other causal inference methods.

Example

Let’s assume we are a firm that is testing an ad campaign and we are interested in understanding whether it increases revenue or not. We randomly split a set of users into a treatment and control group and we show the ad campaign to the treatment group. Differently from the standard A/B test setting, assume we observe users also before the test.

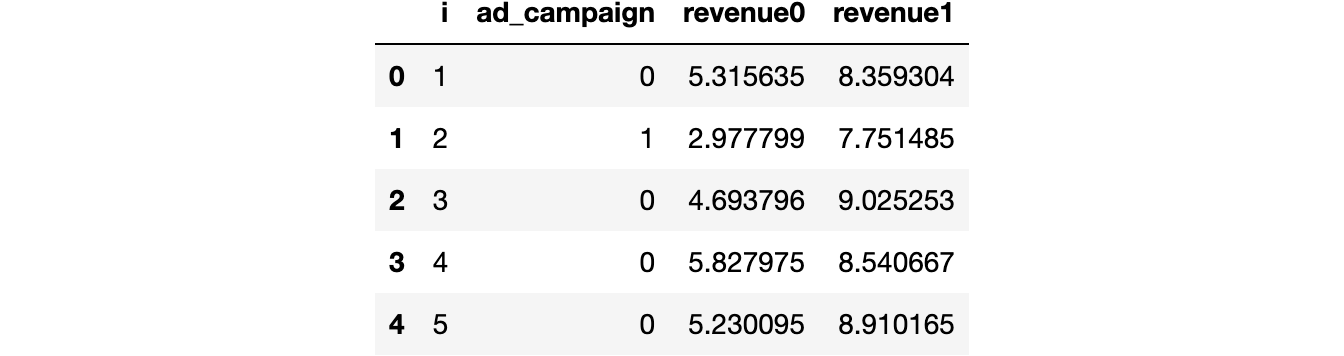

We can now generate the simulated data, using the data generating process dgp_cuped() from src.dgp. I also import some plotting functions and libraries from src.utils.

from src.utils import *

from src.dgp import dgp_cuped

df = dgp_cuped().generate_data()

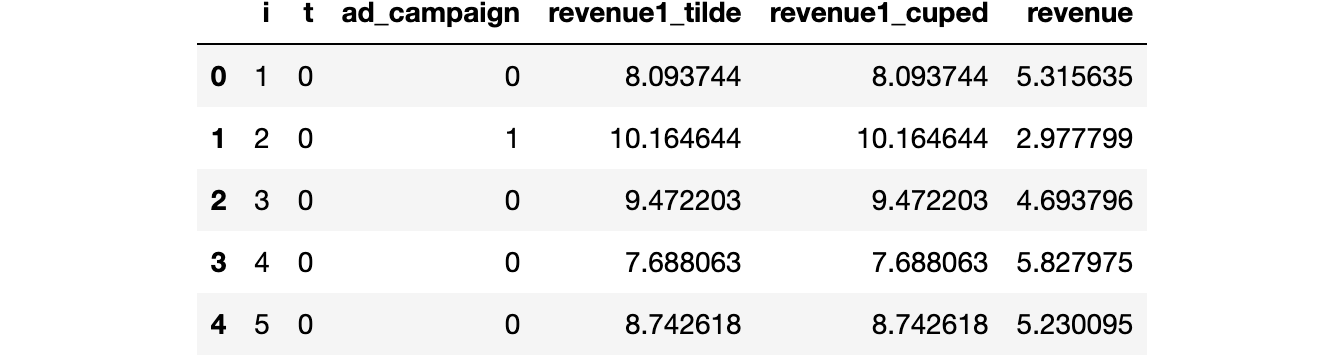

df.head()

We have information on 1000 individuals indexed by i for whom we observe the revenue generated pre and post-treatment, revenue0 and revenue1 respectively, and whether they have been exposed to the ad_campaign.

Difference in Means

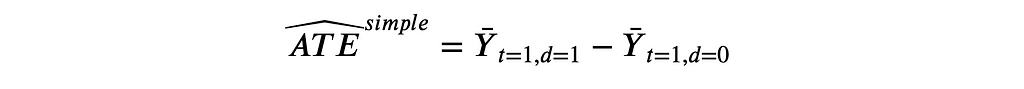

In randomized experiments or A/B tests, randomization allows us to estimate the average treatment effect using a simple difference in means. We can just compare the average outcome post-treatment Y₁ (revenue1) across control and treated units and randomization guarantees that this difference is due to the treatment alone, in expectation.

Where the bar indicates the average over individuals. In our case, we compute the average revenue post ad campaign in the treatment group, minus the average revenue post ad campaign in the control group.

np.mean(df.loc[df.ad_campaign==True, 'revenue1']) - np.mean(df.loc[df.ad_campaign==False, 'revenue1'])

1.7914301325347406

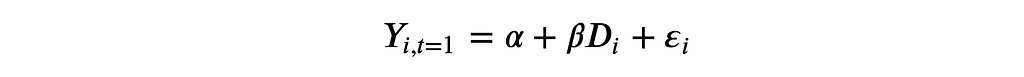

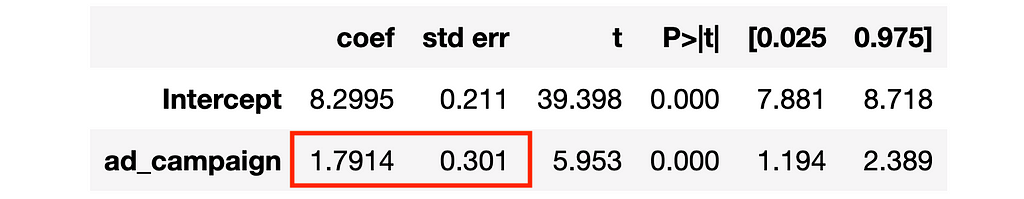

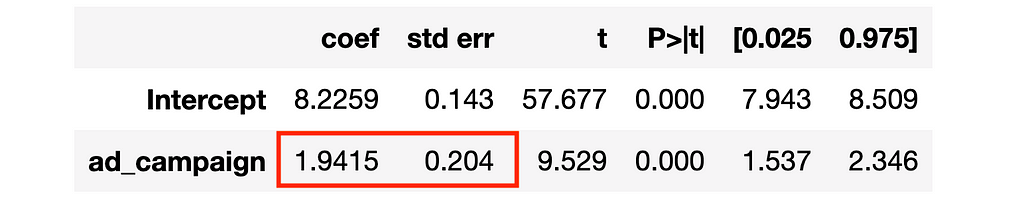

The estimated treatment effect is 1.79, very close to the true value of 2. We can obtain the same estimate by regressing the post-treatment outcome revenue1 on the treatment indicator D for ad_campaign.

Where β is the coefficient of interest.

smf.ols('revenue1 ~ ad_campaign', data=df).fit().summary().tables[1]

This estimator is unbiased, which means it delivers the correct estimate, on average. However, it can still be improved: we could decrease its variance. Decreasing the variance of an estimator is extremely important since it allows us to

- detect smaller effects

- detect the same effect, but with a smaller sample size

In general, an estimator with a smaller variance allows us to run tests with a higher power, i.e. ability to detect smaller effects.

Can we improve the power of our AB test? Yes, with CUPED (among other methods).

CUPED

The idea of CUPED is the following. Suppose you are running an AB test and Y is the outcome of interest (revenue in our example) and the binary variable D indicates whether a single individual has been treated or not (ad_campaign in our example).

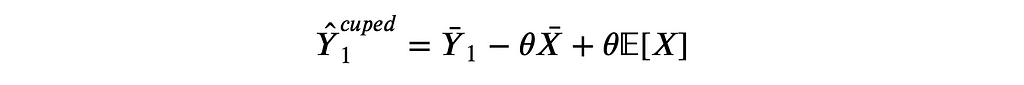

Suppose you have access to another random variable X which is not affected by the treatment and has known expectation 𝔼[X]. Then define

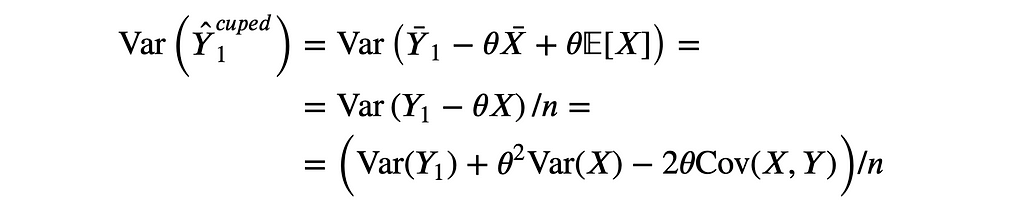

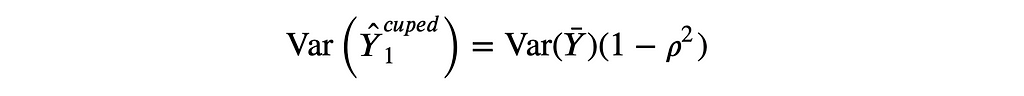

where θ is a scalar. This estimator is an unbiased estimator for 𝔼[Y] since in expectation the two last terms cancel out. However, the variance of Ŷ₁ᶜᵘᵖᵉᵈ is

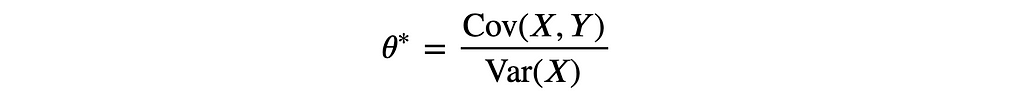

Note that the variance of Ŷ₁ᶜᵘᵖᵉᵈ is minimized for

Which is the OLS estimator of a linear regression of Y on X. Substituting θ* into the formula of the variance of Ŷ₁ᶜᵘᵖᵉᵈ we obtain

where ρ is the correlation between Y and X. Therefore, the higher the correlation between Y and X, the higher the variance reduction of CUPED.

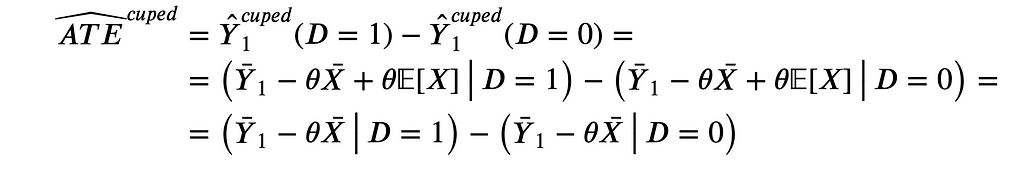

We can then estimate the average treatment effect as the average difference in the transformed outcome between the control and treatment group.

Note that 𝔼[X] cancels out when taking the difference. Therefore, it is sufficient to compute

This is not an unbiased estimator of 𝔼[Y] but still delivers an unbiased estimator of the average treatment effect.

Optimal X

What is the optimal choice for the control variable X?

We know that X should have the following properties:

- not affected by the treatment

- as correlated with Y₁ as possible

The authors of the paper suggest using the pre-treatment outcome Y₀ since it gives the most reduction in variance in practice.

Therefore, in practice, we can compute the CUPED estimate of the average treatment effect as follows:

- Regress Y₁ on Y₀ and estimate θ̂

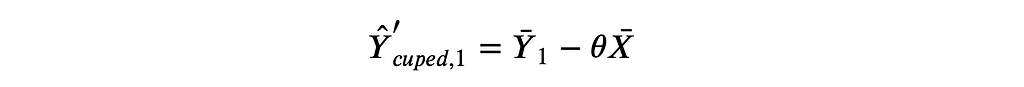

- Compute Ŷ₁ᶜᵘᵖᵉᵈ = Y̅₁ − θ̂ Y̅₀

- Compute the difference of Ŷ₁ᶜᵘᵖᵉᵈ between treatment and control group

Equivalently, we can compute Ŷ₁ᶜᵘᵖᵉᵈ at the individual level and then regress it on the treatment dummy variable D.

Back To The Data

Let’s compute the CUPED estimate for the treatment effect, one step at a time. First, let’s estimate θ.

theta = smf.ols('revenue1 ~ revenue0', data=df).fit().params[1]

Now we can compute the transformed outcome Ŷ₁ᶜᵘᵖᵉᵈ.

df['revenue1_cuped'] = df['revenue1'] - theta * (df['revenue0'] - np.mean(df['revenue0']))

Lastly, we estimate the treatment effect as a difference in means, with the transformed outcome Ŷ₁ᶜᵘᵖᵉᵈ.

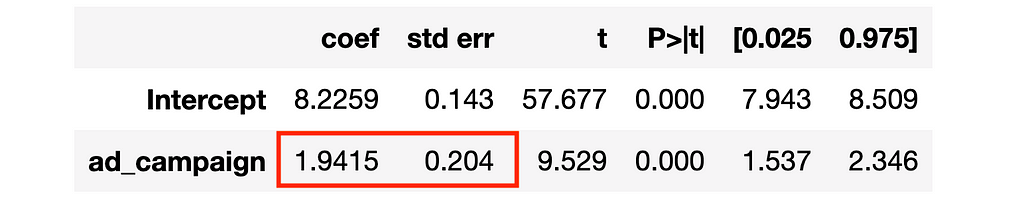

smf.ols('revenue1_cuped ~ ad_campaign', data=df).fit().summary().tables[1]

The standard error is 33% smaller (0.2 vs 0.3)!

Equivalent Formulation

An alternative but algebraically equivalent way of obtaining the CUPED estimate is the following

- Regress Y₁ on Y₀ and compute the residuals Ỹ₁

- Compute Ŷ₁ᶜᵘᵖᵉᵈ = Ỹ₁ + Y̅₁

- Compute the difference of Ŷ₁ᶜᵘᵖᵉᵈ between the treatment and control group

Step (3) is the same as before but (1) and (2) are different. This procedure is called partialling out and the algebraic equivalence is guaranteed by the Frisch-Waugh-Lowell Theorem.

Let’s check if we indeed obtain the same result.

df['revenue1_tilde'] = smf.ols('revenue1 ~ revenue0', data=df).fit().resid + np.mean(df['revenue1'])

smf.ols('revenue1_tilde ~ ad_campaign', data=df).fit().summary().tables[1]

Yes! The regression table is exactly identical.

CUPED vs Other

CUPED seems to be a very powerful procedure but it is remindful of at least a couple of other methods.

- Autoregression or regression with control variables

- Difference-in-Differences

Are these methods the same or is there a difference? Let’s check.

Autoregression

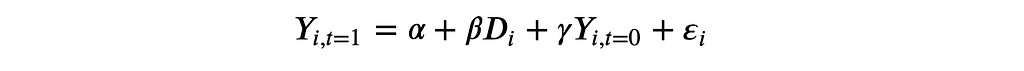

The first question that came to my mind when I first saw CUPED was “is CUPED just the simple difference with an additional control variable?”. Or equivalently, is CUPED equivalent to running the following regression

and estimating γ via least squares?

smf.ols('revenue1 ~ revenue0 + ad_campaign', data=df).fit().summary().tables[1]

The estimated coefficient is very similar to the one we obtained with CUPED and also the standard error is very close. However, they are not exactly the same.

Diff-in-Diffs

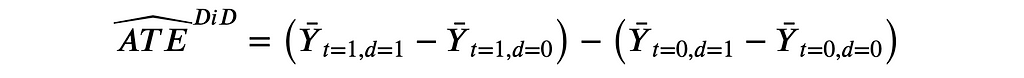

The second question that came to my mind was “is CUPED just difference-in-differences?”. Difference-in-Differences (or diff-in-diffs, or DiD) is an estimator that computes the treatment effect as a double-difference instead of a single one: pre-post and treatment-control instead of just treatment-control.

This method was initially introduced in the 19th century to estimate the causes of a Cholera epidemic in London. The main advantage of diff-in-diff is that it allows estimating the average treatment effect also when randomization is not perfect and the treatment and control group are not comparable. The key assumption that is needed is that these difference between the treatment and control group is constant over time. By taking a double difference, we difference it out.

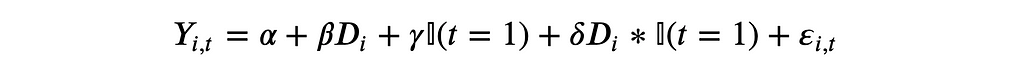

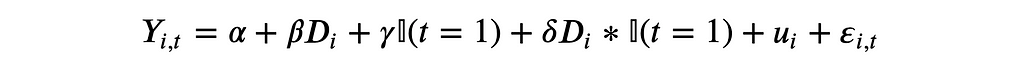

Let’s check how diff-in-diff works empirically. The most common way to compute the diff-in-diff estimator is to first reshape the data in a long format or panel format (one observation is an individual i at time period t) and then to regress the outcome Y on the full interaction between the post-treatment dummy and the treatment dummy D.

The estimator of the average treatment effect is the coefficient of the interaction coefficient, δ.

df_long = pd.wide_to_long(df, stubnames='revenue', i='i', j='t').reset_index()

df_long.head()

The long dataset is now indexed by individuals i and time t. We can now run the diff-in-diffs regression.

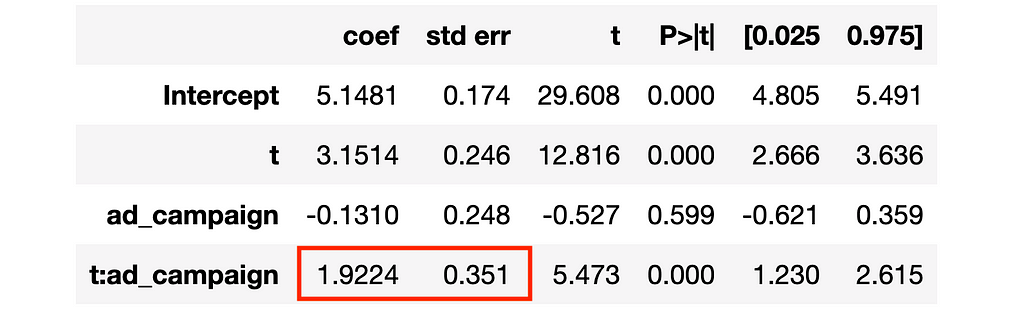

smf.ols('revenue ~ t * ad_campaign', data=df_long).fit().summary().tables[1]

The estimated coefficient is close to the true value, 2, but the standard errors are bigger than the ones obtained with all other methods (0.35 >> 0.2). What did we miss? We didn’t cluster the standard errors!

I won’t go into detail here on what standard error clustering means, but the intuition is the following. The statsmodels package by default computes the standard errors assuming that outcomes are independent across observations. This assumption is unlikely to be true in this setting where we observe individuals over time and we are trying to exploit this information. Clustering allows for correlation of the outcome variable within clusters. In our case, it makes sense (even without knowing the data generating process) to cluster the standard errors at the individual levels, allowing the outcome to be correlated over time for an individual i.

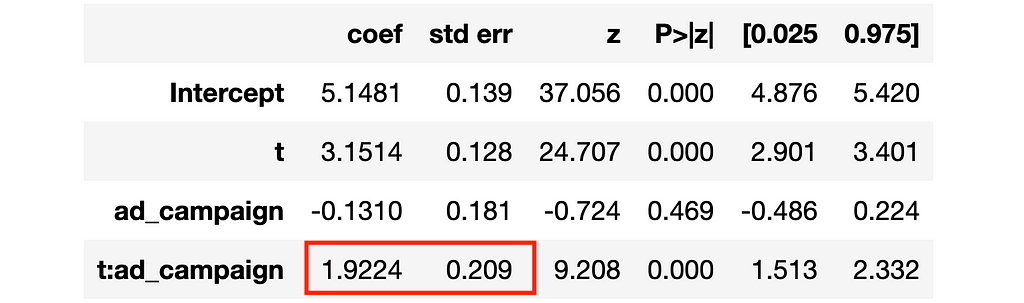

smf.ols('revenue ~ t * ad_campaign', data=df_long)\

.fit(cov_type='cluster', cov_kwds={'groups': df_long['i']})\

.summary().tables[1]

Clustering standard errors at the individual level we obtain standard errors that are comparable to the previous estimates (∼0.2).

Comparison

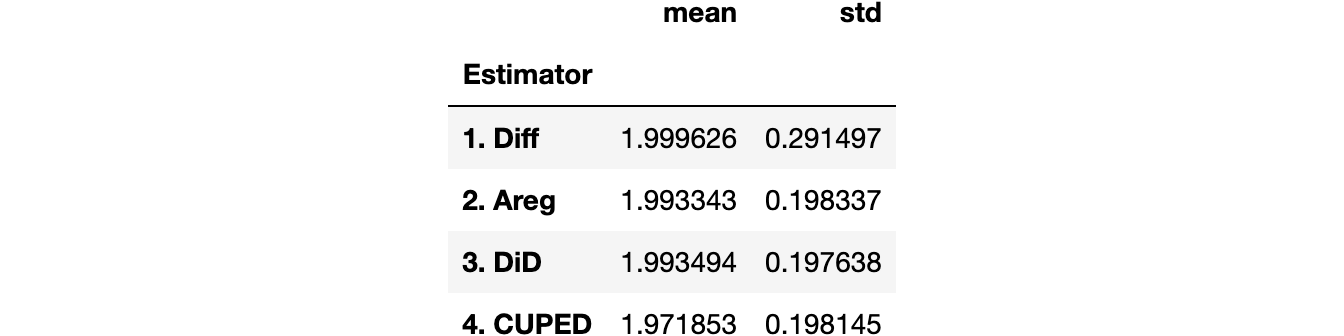

Which method is better? From what we have seen so far, all methods seem to deliver an accurate estimate, but the simple difference has a larger standard deviation.

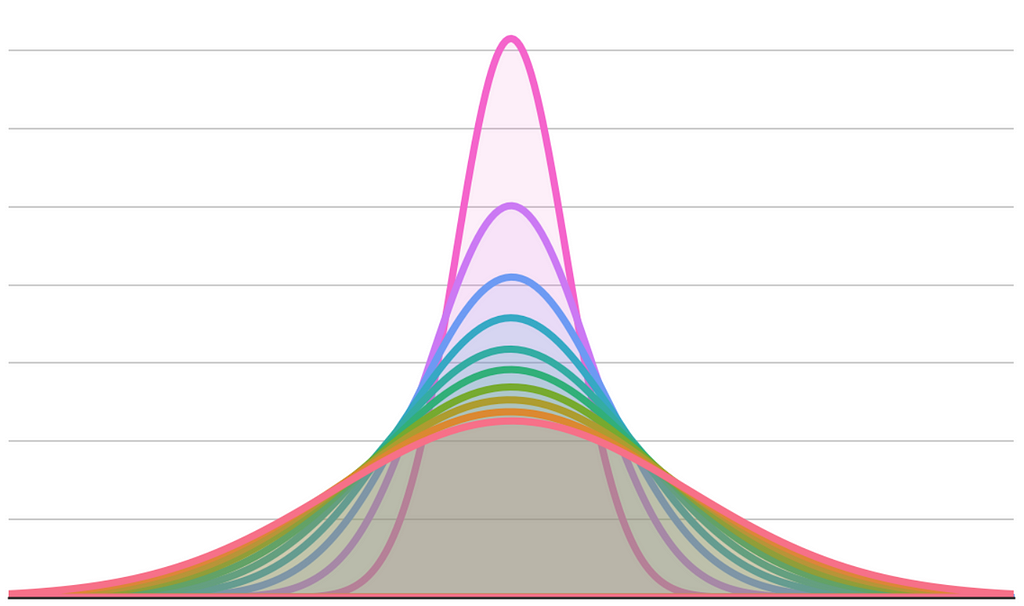

Let’s now compare all the methods we have seen so far via simulation. We simulate the data generating process dgp_cuped() 1000 times and we save the estimated coefficient of the following methods:

- Simple difference

- Autoregression

- Diff-in-diffs

- CUPED

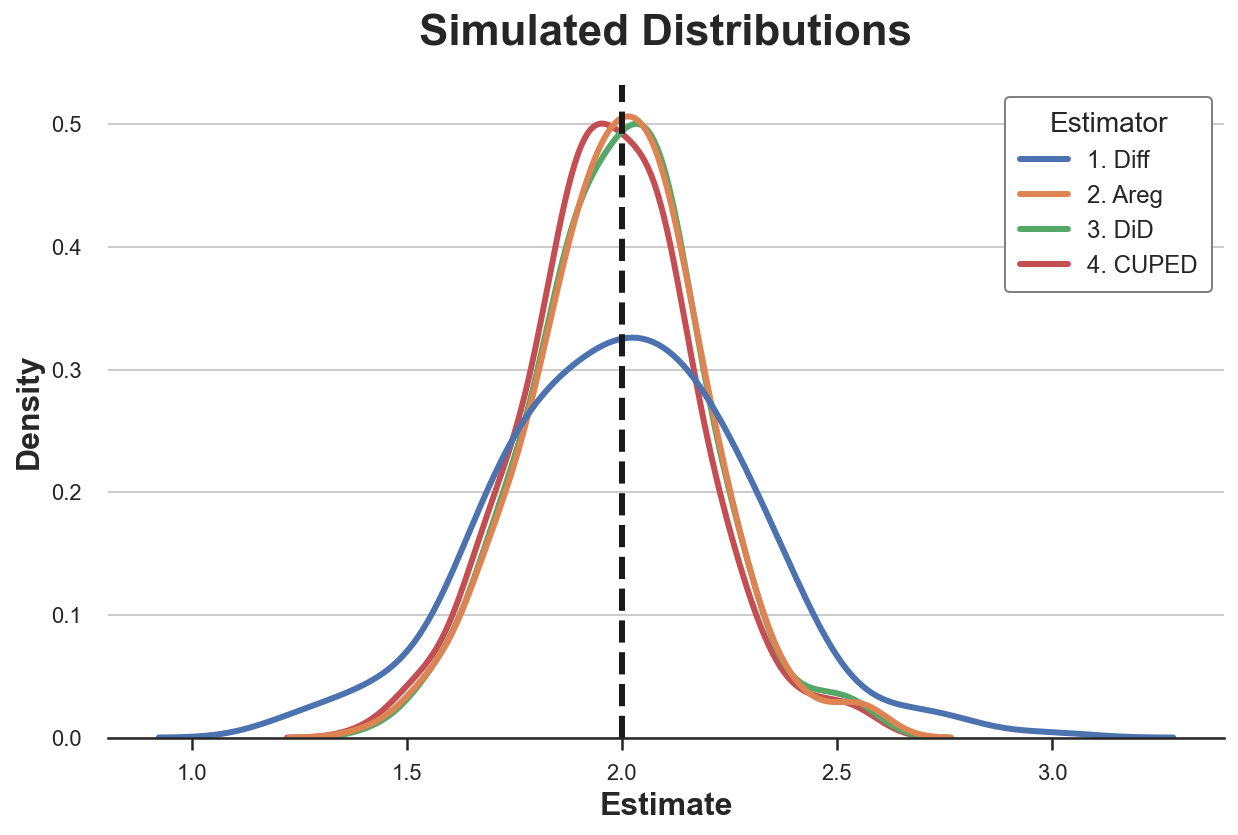

Let’s plot the distribution of the estimated parameters.

sns.kdeplot(data=results, x="Estimate", hue="Estimator");

plt.axvline(x=2, c='k', ls='--');

plt.title('Simulated Distributions');

We can also tabulate the simulated mean and standard deviation of each estimator.

results.groupby('Estimator').agg(mean=("Estimate", "mean"), std=("Estimate", "std"))

All estimators seem unbiased: the average values are all close to the true value of 2. Moreover, all estimators have a very similar standard deviation, apart from the single-difference estimator!

Always Identical?

Are the estimators always identical, or is there some difference among them?

We could check many different departures from the original data generating process. For simplicity, I will consider only one here: imperfect randomization. Other tweaks to the data generating process that I considered are:

- pre-treatment missing values

- additional covariates / control variables

- multiple pre-treatment periods

- heterogeneous treatment effects

and combinations of them. However, I found imperfect randomization to be the most informative example.

Suppose now that randomization was not perfect and two groups are not identical. In particular, if the data generating process is

assume that β≠0. Also, note that we have persistent individual level heterogeneity because the unobservable uᵢ does not change over time (is not indexed by t).

results_beta1 = simulate(dgp=dgp_cuped(beta=1))

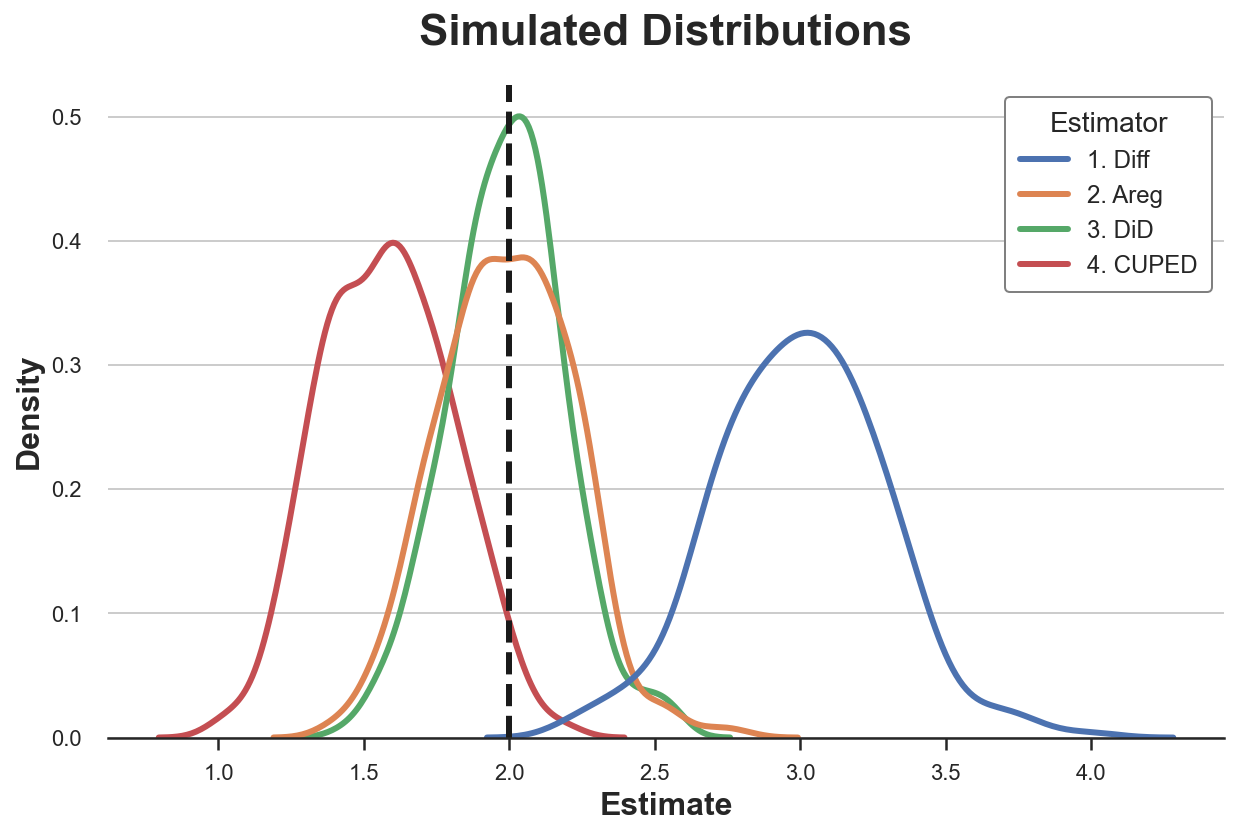

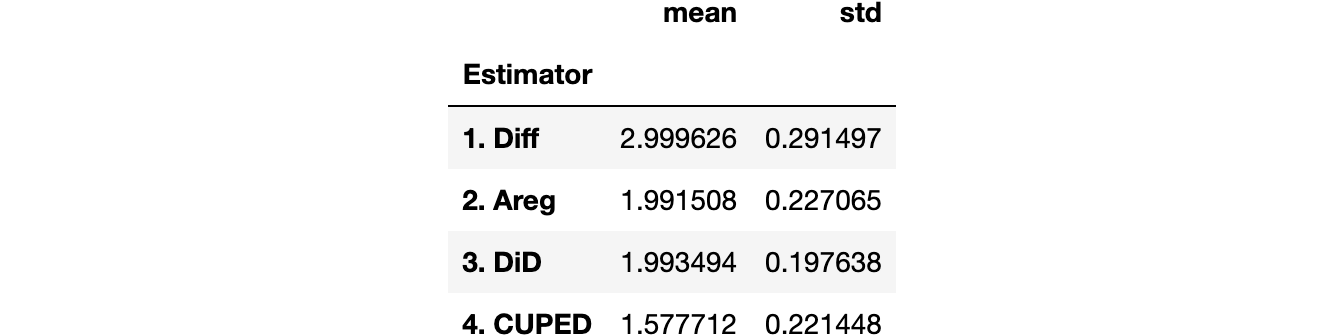

Let’s plot the distribution of the estimated parameters.

sns.kdeplot(data=results_beta1, x="Estimate", hue="Estimator");

plt.axvline(x=2, c='k', ls='--');

plt.title('Simulated Distributions');

results_beta1.groupby('Estimator').agg(mean=("Estimate", "mean"), std=("Estimate", "std"))

With imperfect treatment assignment, both difference-in-differences and autoregression are unbiased for the true treatment effect, however, diff-in-diffs is more efficient. Both CUPED and simple difference are biased instead. Why?

Diff-in-diffs explicitly controls for systematic differences between treatment and control group that are constant over time. This is exactly what this estimator was built for. Autoregression performs some sort of matching on the additional covariate, Y₀, effectively controlling for these systematic differences, but less efficiently (if you want to know more, I wrote related posts on control variables here and here). CUPED controls for persistent heterogeneity at the individual level, but not at the treatment assignment level. Lastly, the simple difference estimator does not control for anything.

Conclusion

In this post, I have analyzed an estimator of average treatment effects in AB testing, very popular in the industry: CUPED. The key idea is that, by exploiting pre-treatment data, CUPED can achieve a lower variance by controlling for individual-level variation that is persistent over time. We have also seen that CUPED is closely related but not equivalent to autoregression and difference-in-differences. The differences among the methods clearly emerge when we have imperfect randomization.

An interesting avenue of future research is what happens when we have a lot of pre-treatment information, either in terms of time periods or observable characteristics. Scientists from Meta, Guo, Coey, Konutgan, Li, Schoener, Goldman (2021), have analyzed this problem in a very recent paper that exploits machine learning techniques to efficiently use this extra information. This approach is closely related to the Double/Debiased Machine Learning literature. If you are interested, I wrote two articles on the topic (part 1 and part 2) and I might write more in the future.

References

[1] A. Deng, Y. Xu, R. Kohavi, T. Walker, Improving the Sensitivity of Online Controlled Experiments by Utilizing Pre-Experiment Data (2013), WSDM.

[2] H. Xir, J. Aurisset, Improving the sensitivity of online controlled experiments: Case studies at Netflix (2013), ACM SIGKDD.

[3] Y. Guo, D. Coey, M. Konutgan, W. Li, C. Schoener, M. Goldman, Machine Learning for Variance Reduction in Online Experiments (2021), NeurIPS.

[4] V. Chernozhukov, D. Chetverikov, M. Demirer, E. Duflo, C. Hansen, W. Newey, J. Robins, Double/debiased machine learning for treatment and structural parameters (2018), The Econometrics Journal.

[5] M. Bertrand, E. Duflo, S. Mullainathan, How Much Should We Trust Differences-In-Differences Estimates? (2012), The Quarterly Journal of Economics.

Related Articles

- Double Debiased Machine Learning (part 1)

- Double Debiased Machine Learning (part 2)

- Understanding The Frisch-Waugh-Lovell Theorem

- Understanding Contamination Bias

- DAGs and Control Variables

Code

You can find the original Jupyter Notebook here:

Blog-Posts/cuped.ipynb at main · matteocourthoud/Blog-Posts

Thank you for reading!

I really appreciate it! 🤗 If you liked the post and would like to see more, consider following me. I post once a week on topics related to causal inference and data analysis. I try to keep my posts simple but precise, always providing code, examples, and simulations.

Also, a small disclaimer: I write to learn so mistakes are the norm, even though I try my best. Please, when you spot them, let me know. I also appreciate suggestions on new topics!

Understanding CUPED was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/3lsFDA6

via RiYo Analytics

No comments