https://ift.tt/Bt5bF3i Many data scientists and ML engineers today use MLflow to manage their models. MLflow is an open-source platform tha...

Many data scientists and ML engineers today use MLflow to manage their models. MLflow is an open-source platform that enables users to govern all aspects of the ML lifecycle, including but not limited to experimentation, reproducibility, deployment, and model registry. A critical step during the development of ML models is the evaluation of their performance on novel datasets.

Motivation

Why Do We Evaluate Models?

Model evaluation is an integral part of the ML lifecycle. It enables data scientists to measure, interpret, and explain the performance of their models. It accelerates the model development timeframe by providing insights into how and why models are performing the way that they are performing. Especially as the complexity of ML models increases, being able to swiftly observe and understand the performance of ML models is essential in a successful ML development journey.

State of Model Evaluation in MLflow

Until now, users could evaluate the performance of their MLflow model of the python_function (pyfunc) model flavor through the mlflow.evaluate API, which supports the evaluation of both classification and regression models. It computes and logs a set of built-in task-specific performance metrics, model performance plots, and model explanations to the MLflow Tracking server.

To evaluate MLflow models against custom metrics not included in the built-in evaluation metric set, users would have to define a custom model evaluator plugin. This would involve creating a custom evaluator class that implements the ModelEvaluator interface, then registering an evaluator entry point as part of an MLflow plugin. This rigidity and complexity could be prohibitive for users.

According to an internal customer survey, 75% of respondents say they frequently or always use specialized, business-focused metrics in addition to basic ones like accuracy and loss. Data scientists often utilize these custom metrics as they are more descriptive of business objectives (e.g. conversion rate), and contain additional heuristics not captured by the model prediction itself.

In this blog, we introduce an easy and convenient way of evaluating MLflow models on user-defined custom metrics. With this functionality, a data scientist can easily incorporate this logic at the model evaluation stage and quickly determine the best-performing model without further downstream analysis

Usage

Built-in Metrics

MLflow bakes in a set of commonly used performance and model explainability metrics for both classifier and regressor models. Evaluating models on these metrics is straightforward. All we need is to create an evaluation dataset containing the test data and targets and make a call to mlflow.evaluate.

Depending on the type of model, different metrics are computed. Refer to the Default Evaluator behavior section under the API documentation of mlflow.evaluate for the most up-to-date information regarding built-in metrics.

Example

Below is a simple example of how a classifier MLflow model is evaluated with built-in metrics.

First, import the necessary libraries

import xgboost import shap import mlflow from sklearn.model_selection import train_test_split

Then, we split the dataset, fit the model, and create our evaluation dataset

# load UCI Adult Data Set; segment it into training and test sets X, y = shap.datasets.adult() X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.33, random_state=42) # train XGBoost model model = xgboost.XGBClassifier().fit(X_train, y_train) # construct an evaluation dataset from the test set eval_data = X_test eval_data["target"] = y_test

Finally, we start an MLflow run and call mlflow.evaluate

with mlflow.start_run() as run:

model_info = mlflow.sklearn.log_model(model, "model")

result = mlflow.evaluate(

model_info.model_uri,

eval_data,

targets="target",

model_type="classifier",

dataset_name="adult",

evaluators=["default"],

)

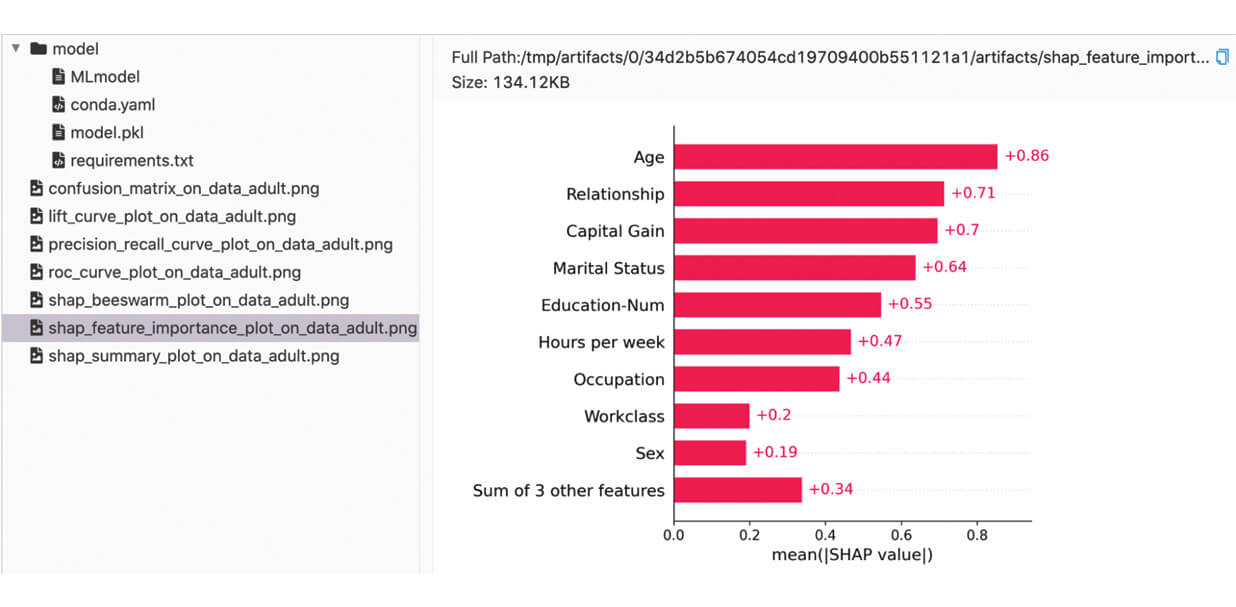

We can find the logged metrics and artifacts in the MLflow UI:

Custom Metrics

To evaluate a model against custom metrics, we simply pass a list of custom metric functions to the mlflow.evaluate API.

Function Definition Requirements

Custom metric functions should accept two required parameters and one optional parameter in the following order:

eval_df: a Pandas or Spark DataFrame containing apredictionand atargetcolumn.E.g. If the output of the model is a vector of three numbers, then the

eval_dfDataFrame would look something like:builtin_metrics: a dictionary containing the built-in metricsE.g. For a regressor model,

builtin_metricswould look something like:{ "example_count": 4128, "max_error": 3.815, "mean_absolute_error": 0.526, "mean_absolute_percentage_error": 0.311, "mean": 2.064, "mean_squared_error": 0.518, "r2_score": 0.61, "root_mean_squared_error": 0.72, "sum_on_label": 8520.4 }- (Optional)

artifacts_dir: path to a temporary directory that can be used by the custom metric function to temporarily store produced artifacts before logging to MLflow.E.g. Note that this will look different depending on the specific environment setup. For example, on MacOS it look something like this:

/var/folders/5d/lcq9fgm918l8mg8vlbcq4d0c0000gp/T/tmpizijtnvo

If file artifacts are stored elsewhere than

artifacts_dir, ensure that they persist until after the complete execution ofmlflow.evaluate.

Return Value Requirements

The function should return a dictionary representing the produced metrics and can optionally return a second dictionary representing the produced artifacts. For both dictionaries, the key for each entry represents the name of the corresponding metric or artifact.

While each metric must be a scalar, there are various ways to define artifacts:

- The path to an artifact file

- The string representation of a JSON object

- A pandas DataFrame

- A numpy array

- A matplotlib figure

- Other objects will be attempted to be pickled with the default protocol

Refer to the documentation of mlflow.evaluate for more in-depth definition details.

Example

Let’s walk through a concrete example that uses custom metrics. For this, we’ll create a toy model from the California Housing dataset.

from sklearn.linear_model import LinearRegression from sklearn.datasets import fetch_california_housing from sklearn.model_selection import train_test_split import matplotlib.pyplot as plt import numpy as np import mlflow import os

Then, setup our dataset and model

# loading the California housing dataset cali_housing = fetch_california_housing(as_frame=True) # split the dataset into train and test partitions X_train, X_test, y_train, y_test = train_test_split( cali_housing.data, cali_housing.target, test_size=0.2, random_state=123 ) # train the model lin_reg = LinearRegression().fit(X_train, y_train) # creating the evaluation dataframe eval_data = X_test.copy() eval_data["target"] = y_test

Here comes the exciting part: defining our custom metrics function!

def example_custom_metric_fn(eval_df, builtin_metrics, artifacts_dir):

"""

This example custom metric function creates a metric based on the ``prediction`` and

``target`` columns in ``eval_df`` and a metric derived from existing metrics in

``builtin_metrics``. It also generates and saves a scatter plot to ``artifacts_dir`` that

visualizes the relationship between the predictions and targets for the given model to a

file as an image artifact.

"""

metrics = {

"squared_diff_plus_one": np.sum(np.abs(eval_df["prediction"] - eval_df["target"] + 1) ** 2),

"sum_on_label_divided_by_two": builtin_metrics["sum_on_label"] / 2,

}

plt.scatter(eval_df["prediction"], eval_df["target"])

plt.xlabel("Targets")

plt.ylabel("Predictions")

plt.title("Targets vs. Predictions")

plot_path = os.path.join(artifacts_dir, "example_scatter_plot.png")

plt.savefig(plot_path)

artifacts = {"example_scatter_plot_artifact": plot_path}

return metrics, artifacts

Finally, to tie all of these together, we’ll start an MLflow run and call mlflow.evaluate:

with mlflow.start_run() as run:

mlflow.sklearn.log_model(lin_reg, "model")

model_uri = mlflow.get_artifact_uri("model")

result = mlflow.evaluate(

model=model_uri,

data=eval_data,

targets="target",

model_type="regressor",

dataset_name="cali_housing",

custom_metrics=[example_custom_metric_fn],

)

Logged custom metrics and artifacts can be found alongside the default metrics and artifacts. The red boxed regions show the logged custom metrics and artifacts on the run page.

Accessing Evaluation Results Programmatically

So far, we have explored evaluation results for both built-in and custom metrics in the MLflow UI. However, we can also access them programmatically through the EvaluationResult object returned by mlflow.evaluate. Let’s continue our custom metrics example above and see how we can access its evaluation results programmatically. (Assuming result is our EvaluationResult instance from here on).

We can access the set of computed metrics through the result.metrics dictionary containing both the name and scalar values of the metrics. The content of result.metrics should look something like this:

{

'example_count': 4128,

'max_error': 3.8147801844098375,

'mean_absolute_error': 0.5255457157103748,

'mean_absolute_percentage_error': 0.3109520331276797,

'mean_on_label': 2.064041664244185,

'mean_squared_error': 0.5180228655178677,

'r2_score': 0.6104546894797874,

'root_mean_squared_error': 0.7197380534040615,

'squared_diff_plus_one': 6291.3320597821585,

'sum_on_label': 8520.363989999996,

'sum_on_label_divided_by_two': 4260.181994999998

}

Similarly, the set of artifacts is accessible through the result.artifacts dictionary. The values of each entry is an EvaluationArtifact object. result.artifacts should look something like this:

{

'example_scatter_plot_artifact': ImageEvaluationArtifact(uri='some_uri/example_scatter_plot_artifact_on_data_cali_housing.png'),

'shap_beeswarm_plot': ImageEvaluationArtifact(uri='some_uri/shap_beeswarm_plot_on_data_cali_housing.png'),

'shap_feature_importance_plot': ImageEvaluationArtifact(uri='some_uri/shap_feature_importance_plot_on_data_cali_housing.png'),

'shap_summary_plot': ImageEvaluationArtifact(uri='some_uri/shap_summary_plot_on_data_cali_housing.png')

}

Example Notebooks

Underneath the Hood

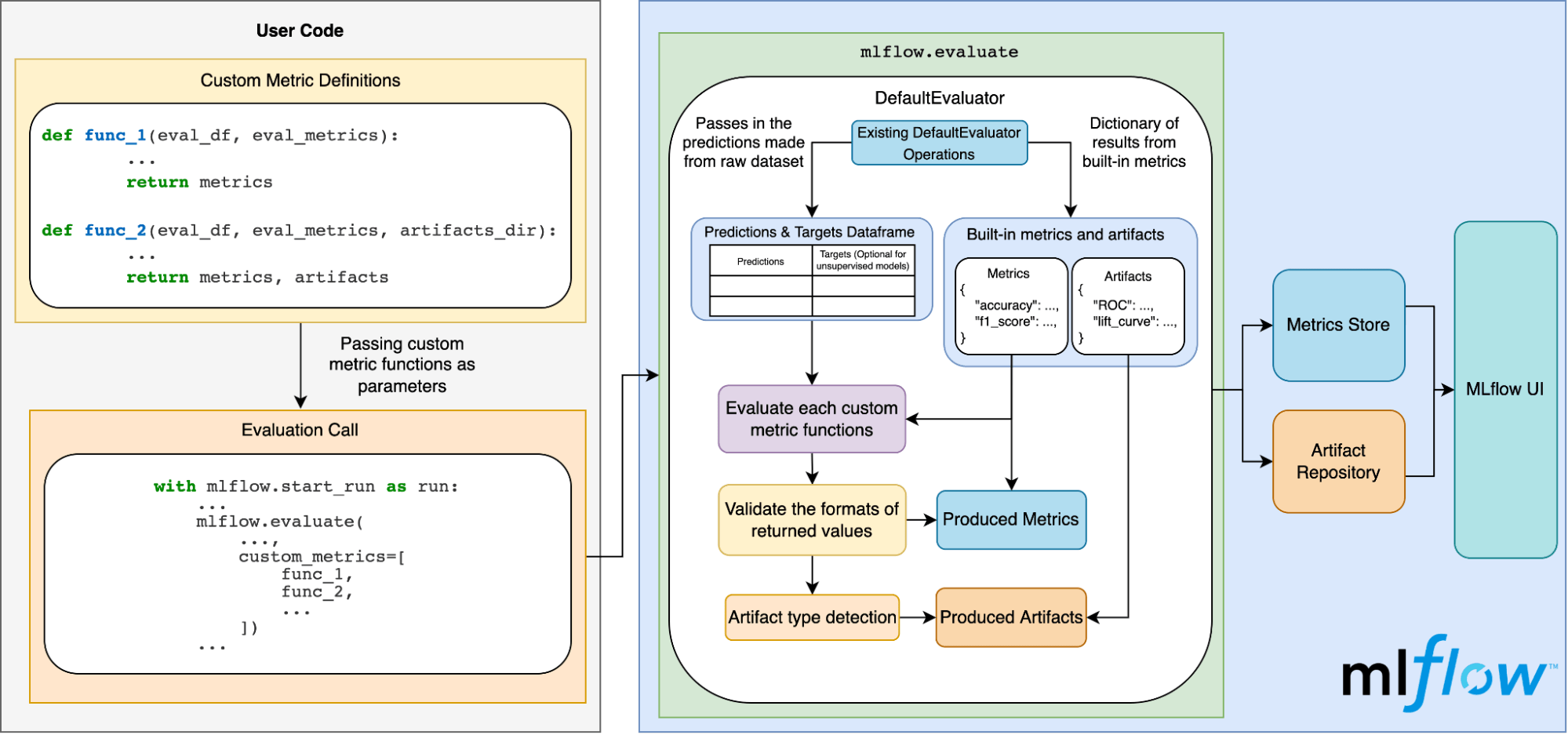

The diagram below illustrates how this all works under the hood:

Conclusion

In this blog post, we covered:

- The significance of model evaluation and what’s currently supported in MLflow.

- Why having an easy way for MLflow users to incorporate custom metrics into their MLflow models is important.

- How to evaluate models with default metrics.

- How to evaluate models with custom metrics.

- How MLflow handles model evaluation behind the scenes.

--

Try Databricks for free. Get started today.

The post Model Evaluation in MLflow appeared first on Databricks.

from Databricks https://ift.tt/a5Ee9bv

via RiYo Analytics

No comments