https://ift.tt/olhzD0v PyTorch Lightning vs DeepSpeed vs FSDP vs FFCV vs … Scale-up PyTorch model training by mixing these techniques to c...

PyTorch Lightning vs DeepSpeed vs FSDP vs FFCV vs …

Scale-up PyTorch model training by mixing these techniques to compound their benefits using PyTorch Lightning

PyTorch Lightning has become one of the most widely used deep learning frameworks in the world by allowing users to focus on the research and not the engineering. Lightning users benefit from massive speed-ups to the training of their PyTorch models, resulting in huge costs savings.

PyTorch Lightning is more than a deep learning framework, it’s a platform that allows the latest and greatest tricks to play nicely together.

Lightning is a ̶f̶r̶a̶m̶e̶w̶o̶r̶k̶ platform

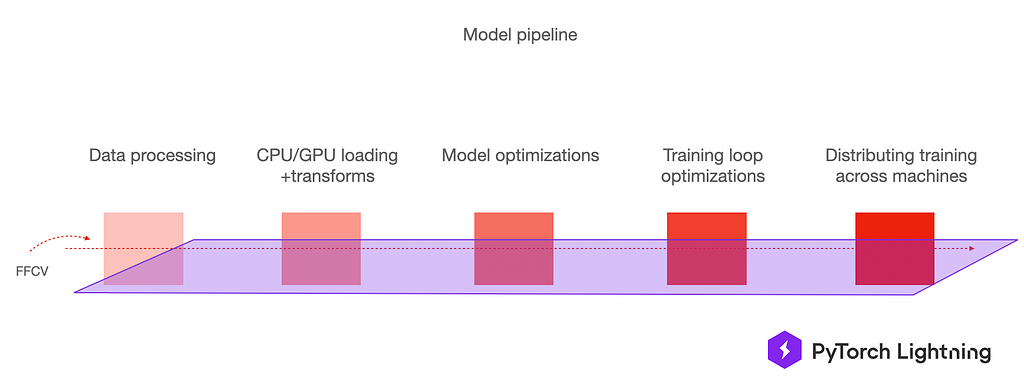

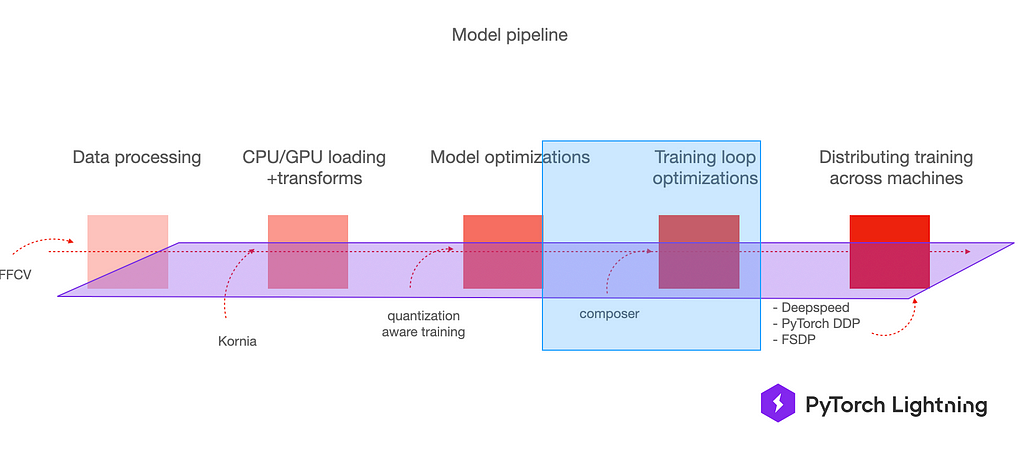

In the last few years, there have been numerous libraries that have launched to optimize each stage of the training pipeline which often results in 2–10x speedups when used alone. With Lightning, these libraries can be used together to compound the efficiency for PyTorch models.

In this post, we’ll give a conceptual understanding of each of these techniques and show how they can be enabled in PyTorch Lightning.

Benchmarking PyTorch Lightning

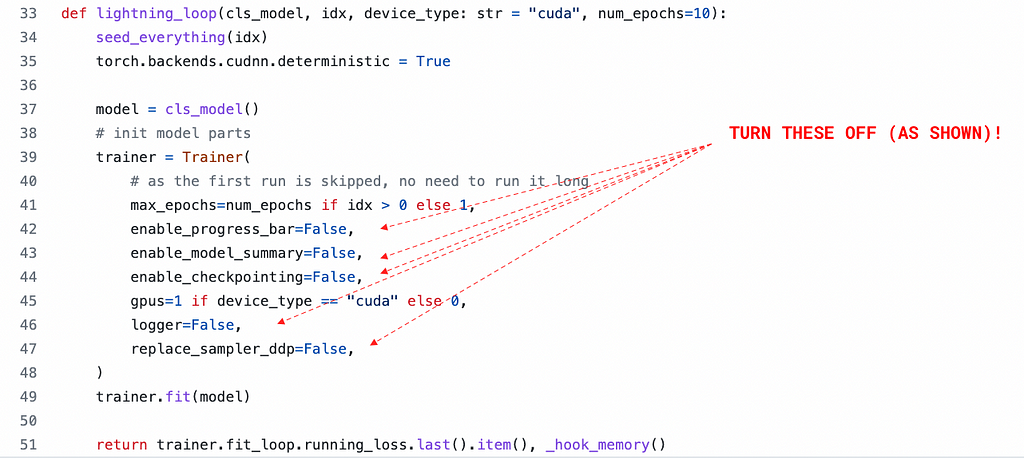

PyTorch Lightning gives users a ton of useful things by default, such as checkpointing, Tensorboard logging, a progress bar, etc… When benchmarking against PyTorch, these must be turned off (yes, PyTorch + tensorboard is MUCH slower than PyTorch without it).

To compare PyTorch Lightning to PyTorch disable the “extras”

Remember that PyTorch Lightning IS organized PyTorch, thus it doesn’t really make sense to compare it against PyTorch. Every 👏 single 👏 pull request to PyTorch Lightning benchmarks against PyTorch to make sure the two don’t diverge in their solutions or speed (check out the code!)

Here’s an example of one of these benchmarks:

DeepSpeed

DeepSpeed is a technique created by Microsoft to train massive billion-parameter models at scale. If you have 800 GPUs laying around, you can also get trillion parameter models 😉 .

The crux of how DeepSpeed enables scale is through the introduction of the Zero Redundancy Optimizer (ZERO). ZERO has 3 stages:

- Optimizer states are partitioned across processes.

- Gradients are partitioned across processes.

- Model parameters are partitioned across the processes.

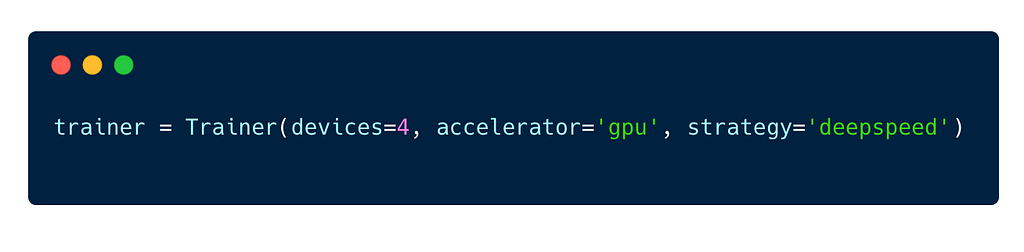

To enable DeepSpeed in Lightning simply pass in strategy='deepspeed' to your Lightning trainer (docs).

FSDP

Fully-sharded data-parallel (FSDP) is Meta’s version of sharding, inspired by DeepSpeed (stage 3) optimized for PyTorch compatibility (read about their latest 1 trillion parameter attempt).

FSDP was developed by the FairScale team at Facebook (now Meta) with a focus on optimizing PyTorch compatibility. Try both and see if you can notice a difference!

To use FSDP in PyTorch Lightning (docs), enable it with:

FFCV

DeepSpeed and FSDP optimize the part of the pipeline responsible for distributing models across machines.

FFCV optimizes the data processing part of the pipeline when you have an image dataset by exploiting the shared structure found in the dataset.

FFCV is of course complementary to DeepSpeed and FSDP and thus can be used within PyTorch Lightning as well.

Note: the benchmarks reported by the FFCV team are not exactly fair, because they were created without disabling tensorboard, logging, etc in PyTorch Lightning, and without enabling Deepspeed or FSDP to compound the effects of using FFCV.

An FFCV integration is currently in progress and will be available in PyTorch Lightning within the next few weeks.

enable ffcv with PL · Issue #11538 · PyTorchLightning/pytorch-lightning

Composer

Composer is another library that tackles a different part of the training pipeline. Composer adds certain techniques such as BlurPooling, ChannelsLast, CutMix and LabelSmoothing.

These techniques can be added to the model BEFORE the optimization begins. This means that to use composer with PyTorch Lightning, you can simply run the optimizations manually on the model before starting your Training loop.

The Lightning team is investigating the best way to integrate mosaic with PyTorch Lightning.

Support mosaic optimizations as plugins · Issue #12360 · PyTorchLightning/pytorch-lightning

Closing words

PyTorch Lightning is more than a deep learning framework, it’s a platform!

Rather than trying to reinvent the wheel, PyTorch Lightning is allowing you to integrate the latest techniques so they can work together nicely and keep your code efficient and organized.

Newly launched optimizations that tackle different parts of the pipeline will be integrated within weeks. If you’re creating a new one, please reach out to the lightning team so that the lightning team can work with you on making your integration a smooth experience for the community!

PyTorch Lightning vs DeepSpeed vs FSDP vs FFCV vs … was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/ZsnSu5P

via RiYo Analytics

No comments