https://ift.tt/IhxTGYA Add structure to your machine learning projects to accelerate and sustain innovation In recent years, momentum is b...

Add structure to your machine learning projects to accelerate and sustain innovation

In recent years, momentum is building towards MLOps, an effort to distill an operational mindset in machine learning. For me, MLOps is all about creating sustainability in machine learning. We want to build a culture of structure and reproducibility in this fast-paced innovative field. Take this familiar scenario as motivation:

Here’s a use case for you to complete. Can you do it before Friday?

Our intrepid hero cobbles together something successfully. Everyone’s happy. Until a few days after, we put it to production. And then a few weeks after, new data comes in. And then a few months after, a new guy takes over the project.

Can your code hold up to the pressure?

Developing machine learning projects is like fixing server cables. We create a dozen experiments, engineering pipelines, outbound integrations, and monitoring code. We try our best to make our work reproducible, robust, and ready for shipping. Add some team collaboration and you’ve got a big ball of spaghetti. As much as we try, something will give, especially with tight timelines. We do small shortcuts that accrue technical debt and will be more unpleasant over time. In our metaphor, messy wires are a fire hazard, and we want them fixed right away. The data must flow!

Someone figured this out before, right? Fortunately, yes, we’re in a time where MLOp is taking off. There are a dozen of tools and frameworks out there to support MLOps. In the past, I’ve used a combination of in-house self-maintained frameworks and a personal set of engineering standards. If you don’t have the time to create your own framework, then it is good to have a robust scaffolding like Kedro to take care of the foundations for you.

Firing up Kedro

Kedro is an open-source Python project that aims to help ML practitioners create modular, maintainable, and reproducible pipelines. As a rapidly evolving project, it has many integrations with other useful tools like MLFlow, Airflow, and Docker, to name a few. Think of Kedro as the glue to tie your projects together into a common structure, a very important step towards sustainable machine learning. Kedro has amazing documentation, so be sure to check it out.

Starting a kedro project is easy. I’ve written several things in the code block below.

- Virtual environments are almost always necessary. Create one, and install kedro.

- Create a kedro project. In this example, I use an official starter project. There are others, linked here. A powerful feature of Kedro is that you can create your own template through cookiecutter. Check this out for your organization’s standards.

- Create a new git environment — another must for MLOps!

- Install dependencies.

- Run the pipeline.

You’ll see that there are some directories generated for you. This structure is the backbone for any kedro project. You’ll see a couple of great operational things already baked in, like testing, logging, configuration management, and even a directory for Jupyter notebooks.

The Anatomy of a Kedro Project

Overview of the Project

For the rest of the post, I’ll be using a dataset pulled from Kaggle, which is for a cross-sell use case in health insurance. If you want my whole code, you can also check out this Github link.

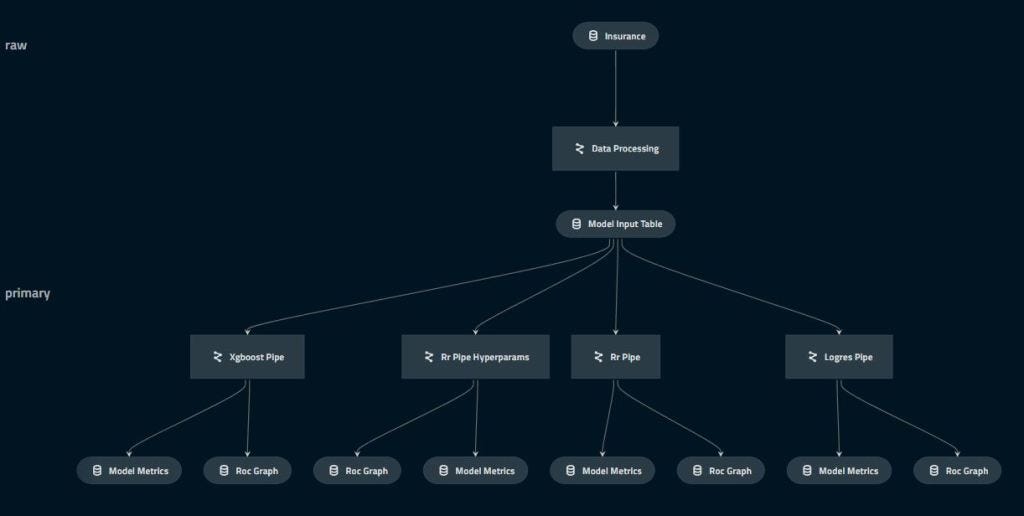

You’ll see later that the data engineering and machine learning work is quite standard but where Kedro really shines is how it can make your pipelines maintainable and frankly, beautiful. Check my pipeline here:

A Kedro pipeline is made up of data sources and nodes. Starting from the top, you see the data sources. You have the option of putting it in Python code, but in my case, I want it in a configuration file, which is key if there are changing locations, credentials, etc. If you have a dozen of datasets, you would want their definitions away from code for a more manageable headspace. In addition, you can manage intermediate tables and outputs in a configuration file so the formats are all abstracted from the code.

By the way, there’s a whole section dedicated in the documentation for credential management and referring cloud resources. I’ll keep things local for simplicity.

Creating your Kedro Nodes

The nodes are where the action is. Kedro allows for a lot of flexibility. Here, I’m using scikit-learn and XGBoost for the actual modeling bits, but you can also use PySpark, PyTorch, TensorFlow, HuggingFace, anything. In the code below, I have my data engineering code, and my modeling codes (data_science). It’s up to you how you want your project’s stages to look like. For simplicity’s sake, I only have these two.

First, my data engineering code has a single node, where all the preprocessing is done. For more complex datasets, you might include the preprocessing of specific data sources, data validation, and merging.

Secondly, the data_science codes contain the training of models. The output of the data_engineering section will be inserted as inputs to these nodes. How the inputs and outputs are stitched together is defined in the pipelines section.

Here’s an example with Optuna, a platform for hyperparameter optimization. As you’ll see below, you can also use kedro-mlflow to connect Kedro and MLFlow together.

If you’re the type to be focused on notebooks, then you might be shocked by the number of .py files. Don’t fret! Kedro can turn your Jupyter notebooks into nodes.

Defining your Kedro Pipelines

To tie it all together, Kedro uses pipelines. With pipelines, you can set the input and output of the nodes. It also sets the node execution order. It looks very similar to a Directed Acyclic Graph (DAG) in Spark and Airflow. An awesome thing you can do is to create hooks to extend Kedro’s functionality, like adding data validation after node execution and logging model artifacts through MLFlow.

- Line 32 has all the inputs from the split node, but there’s a special parameters dictionary. Kedro automatically puts in your parameters from your parameters.yml, with the default in your conf/base directory.

- Line 34 receives multiple outputs from the nodes. The first is a classifier and the second is the model metrics to be saved in MLFlow. It is specified in the catalog.yaml. Note that Kedro has its own experiment tracking functionality as well, but I want to show you that it does play nice with other tools.

- Line 45 receives a set of parameters from parameters.yamlYou can reuse the same pipeline and input several sets of parameters for different runs!

Finally, the top-level of a Kedro project is the pipelines registry, which contains the available pipelines. I define here multiple pipelines and their aliases. The default, __default__ will call all pipelines. You can invoke a particular pipeline through kedro run --pipeline=<name>.

Afterward, it’s time for deployment. From here on, you can do several things, as linked here:

- To package and create wheel files, do a kedro package. The files can then go to your own Nexus or a self-hosted PyPI. Even better if you put the package script as part of your build routine in your CI server. Nothing says job done as having another teammate simply do a pip install of your own project!

- You can also generate your project documentation using kedro build-docs. It will create the HTML documentation using Sphinx, so it includes your docstrings. Time to spice up your docs game!

- Export your pipelines as docker containers. This can then be part of your organization’s larger orchestration framework. It also helps that the community already has plugins for Kubeflow and Kubernetes.

- Integrate with Airflow and convert your project as an Airflow DAG.

- And a dozen other specific deployments!

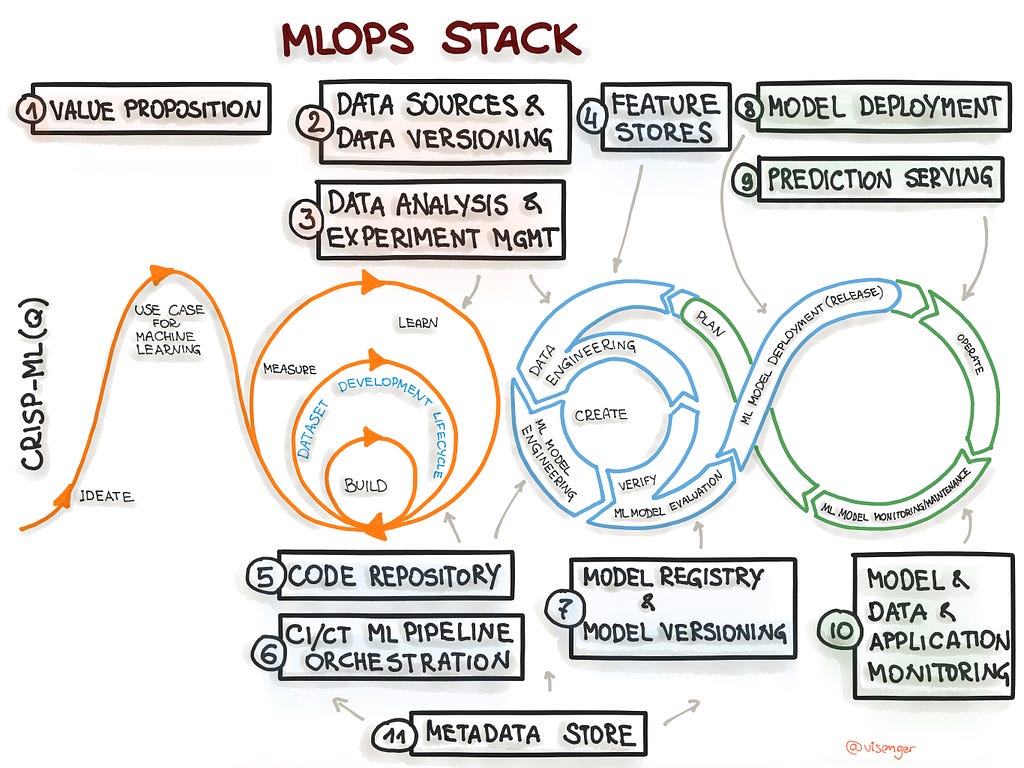

MLOps, Kedro, and more

Recall that we have set out to do MLOps, which is simply a sustainable way to rein in the massive complexity that is machine learning. We have achieved a common structure, a way to reproduce runs, track experiments, and deploy to different environments. This is just the beginning, however. Other best practices are converging and quickly evolving. So be sure to practice the fundamentals, and always remember to innovate sustainably.

Originally published at http://itstherealdyl.com on March 11, 2022.

Level Up Your MLOps Journey with Kedro was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/jV2AZa3

via RiYo Analytics

ليست هناك تعليقات