https://ift.tt/3dZ5XtD A Python library for quickly calculating and displaying machine learning model performance metrics with confidence i...

A Python library for quickly calculating and displaying machine learning model performance metrics with confidence intervals

Data scientists spend a lot of time evaluating the performance of their machine learning models. A common means of doing so is classification report for classification problems, such as the one built-into the scikit-learn library (referenced over 440,000 times on Github). Similarly for regression problems one uses r2 score, MSE or MAE.

The problem with depending on these is that when we have imbalanced and/or small datasets in our test sample, the volume of data that makes up each point estimate can be very small. As a result, whatever estimates one has for the evaluation metrics will not likely be a true representative of what happens in the real world. Instead, it would be far better to present to the reader a range of values from confidence intervals.

We can easily create confidence intervals for any metric of interest by using the bootstrap technique to create hundreds of samples from the original dataset (with replacement), and then throw away the most extreme 5% of values to get a 95% confidence interval, for example.

However, when the datasets are large, running the bootstrap as described above becomes a computationally expensive calculation. Even a relatively small sample of a million rows, when resampled 1,000 times, turns into a billion-row dataset and causes the calculations to take close to a minute. This isn’t a problem when doing these calculations offline, but engineers are often working interactively in a Jupyter notebook and want answers quickly. For this reason Meta (aka Facebook) decided to create a few fast implementations of these calculations for common machine learning metrics, such as precision & recall using the numba library, which provides a speedup of approximately 23X over regular Python parallel-processing code.

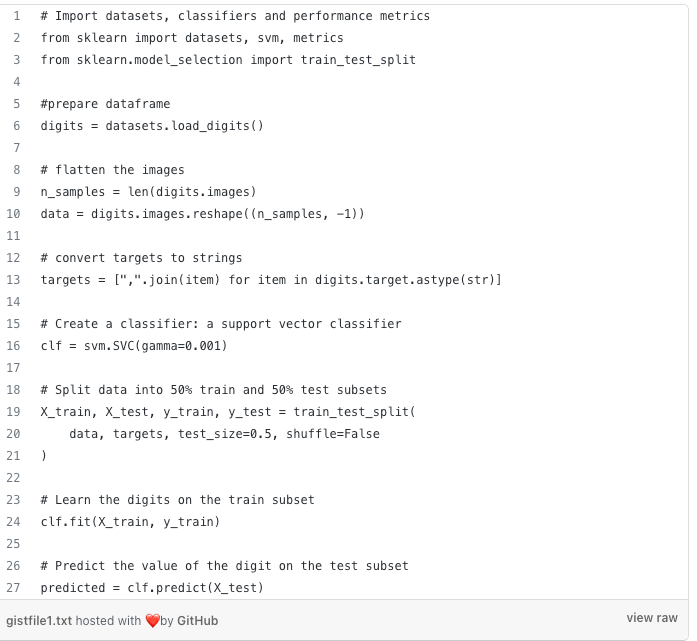

Let’s demonstrate this with examples for classification problems. Consider the standard hand-written digits dataset where we are building a ML model to recognize the digits.

So far, it is standard stuff. Now we can use FRONNI to get the precision, recall, f1-scores and the associated confidence intervals. The library is available in PyPi and to install FRONNI just run pip install.

pip install fronni

Now run the FRONNI classification report to get the precision, recall and f1 scores of each class along with their confidence intervals. You can control the number of bootstrap samples using the parameter n.

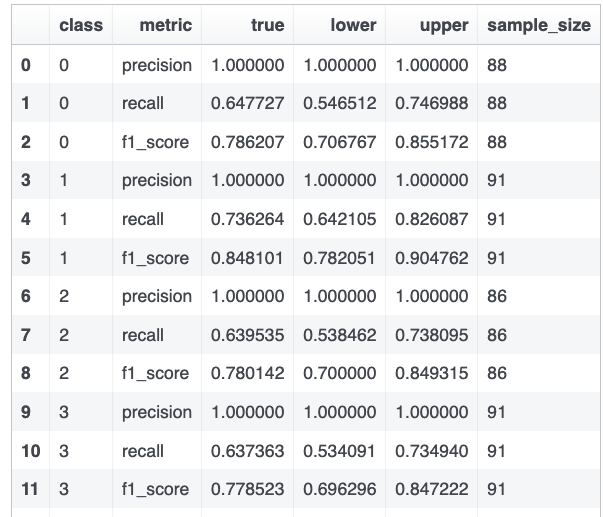

Voila!, it gives you the upper and lower bounds of precision, recall, and f1 scores of every class.

Sample output of the FRONNI classification report for the digit classifier.

Similarly the FRONNI regression module gives the confidence intervals of the RMSE, r2 score and MAE. For full documentation refer to the GitHub repository.

Calculating Machine Learning Model Performance Metrics With Confidence Intervals using FRONNI was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/33FV3ab

via RiYo Analytics

ليست هناك تعليقات