https://ift.tt/3saCC7S How to use the new Azure Machine Learning feature for object detection Pothole predictions and confidence scores f...

How to use the new Azure Machine Learning feature for object detection

Initial algorithm selection and hyperparameter optimization are activities that I personally don’t like doing. If you’re like me then maybe you’ll like Automated Machine Learning (AutoML), a technique where we can let the scripts do these time-consuming ML tasks for us.

The Azure Machine Learning (AML) is a cloud service with features that make it easier to prepare and create datasets, train models and deploy them as web services. Recently the AML team released the AutoML for Images fazeature for Public Preview. Today we’ll use this feature to train an object detection model to identify potholes in roads.

During the article I’ll give a brief review of some AML and object detection concepts, so you don’t need to be totally familiar with them to follow along. This tutorial is heavily based on this example from Azure and you can check the Jupyter notebook I coded here.

Cool, let’s get started!

What we’re going to do?

Object detection datasets are interesting because they are made of tabular data (the annotations of the bounding boxes) and also image data (.png, .jpeg etc). The COCO format is a popular format for object detection datasets and we’ll download the pothole dataset (Pothole Dataset. Shared By. Atikur Rahman Chitholian. November 2020. License. ODbL v1.0) using this format. Azure Machine Learning uses the TabularDataset format, so the first thing we’ll need to do is to convert from COCO to TabularDataset.

After the conversion we’ll choose a object detection algorithm and finally train the model.

1 — Preparing the datasets

I got the dataset from Roboflow. It has 665 images of roads with the potholes labeled and was created and shared by Atikur Rahman Chitholian as part of his undergraduate thesis. The Roboflow team re-shuffled the images into a 70/20/10 train-valid-test splits.

Each split is has two main components:

- _annotations.coco.json, a JSON file with images, categories and annotations metadata

- The image themselves (.jpg files)

This is how the COCO annotations keys look like:

- images: has information about the images of the dataset (id, filename, size, etc.)

- categories: the name and id of the categories of the bounding boxes

- annotations: has information about the objects, containing the bounding boxes coordinates (in this dataset they’re in absolute coordinates), the image_id and the category_id of the object

Now it’s time to start working with AML. The first thing you need to do is to create an Azure Machine Learning Workspace. You can do it using the web interface on https://portal.azure.com.

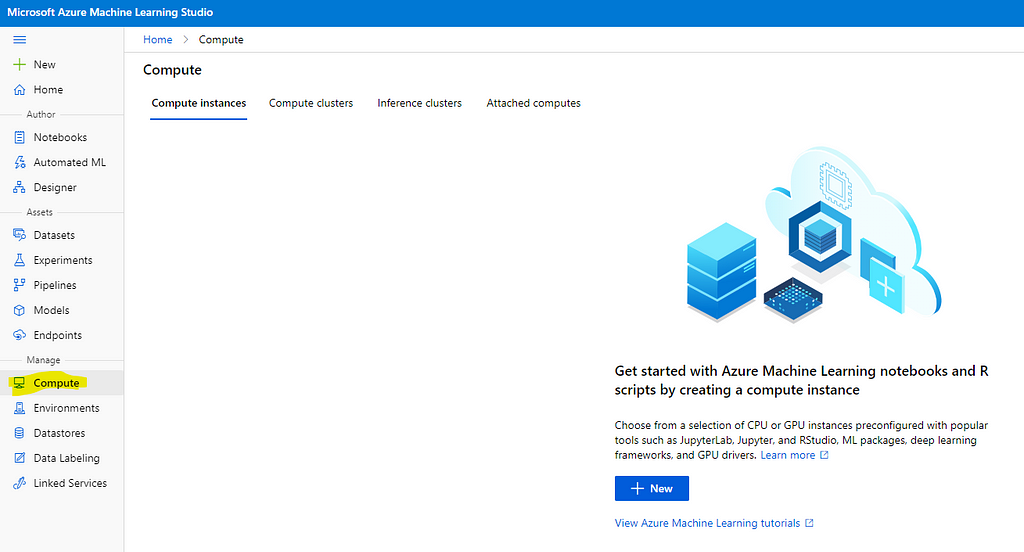

We need a compute instance to run the notebooks and later run the train experiment, so go ahead and create one inside your workspace. AutoML models for image tasks require GPU compute instances. You can create a compute instance using the web interface as well.

I’ve downloaded and extracted the dataset inside the ./potholeObjects folder. Each split has it’s on folder and inside them we have the images and the JSON files.

You need to upload the images and the JSON file to a Datastore so that AML can access them. Datastores are abstraction of cloud data sources. When you create a AML workspace, a AzureBlobDatastore is created and set as default. We’ll use this default Datastore and upload the images there.

The annotations are in the COCO format (JSON) but the TabularDataset requires it to be in JSON Lines. The TabularDataset has the same metadata but organized in different keys. This is how a TabularDataset for object detection looks like:

{

"image_url":"AmlDatastore://data_directory/../Image_name.image_format",

"image_details":{

"format":"image_format",

"width":"image_width",

"height":"image_height"

},

"label":[

{

"label":"class_name_1",

"topX":"xmin/width",

"topY":"ymin/height",

"bottomX":"xmax/width",

"bottomY":"ymax/height",

"isCrowd":"isCrowd"

},

{

"label":"class_name_2",

"topX":"xmin/width",

"topY":"ymin/height",

"bottomX":"xmax/width",

"bottomY":"ymax/height",

"isCrowd":"isCrowd"

},

"..."

]

}

Fortunately the Microsoft engineers wrote a script to convert from COCO: https://github.com/Azure/azureml-examples/blob/1a41978d7ddc1d1f831236ff0c5c970b86727b44/python-sdk/tutorials/automl-with-azureml/image-object-detection/coco2jsonl.py

The image_url key of this file needs to point to the image files in the Datastore we’re using (the default one). We specify that using the base_url parameter of the coco2jsonl.py script.

# Generate training jsonl file from coco file

!python coco2jsonl.py \

--input_coco_file_path "./potholeObjects/train/_annotations.coco.json" \

--output_dir "./potholeObjects/train" --output_file_name "train_pothole_from_coco.jsonl" \

--task_type "ObjectDetection" \

--base_url "AmlDatastore://{datastore_name}/potholeObjects/train/"

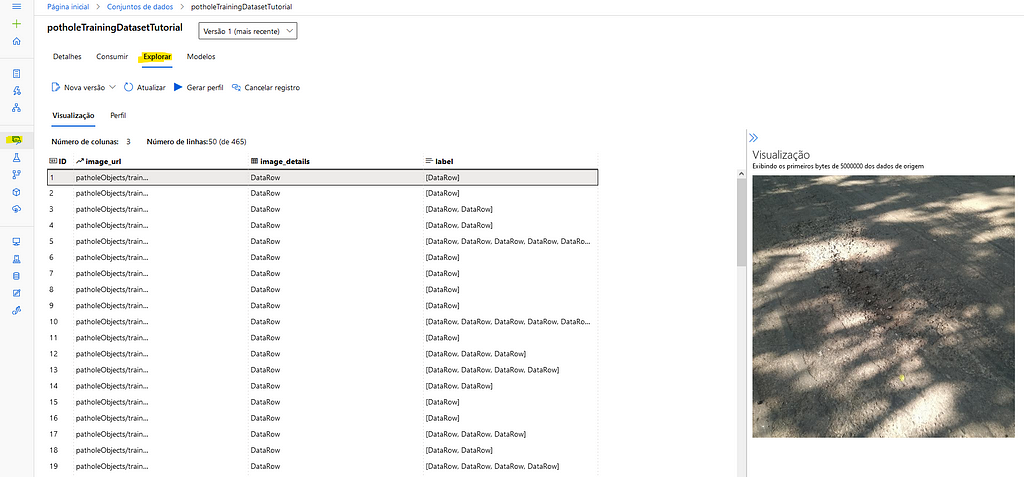

We’ll run the same command for the validation set. Now the next step is to upload the files to the Datastore and create the Datasets inside AML. Don’t confuse Datasets with Datastores. Datasets are versioned packaged data objects, usually created based on files in a Datastore. We’ll create the Datasets from the JSON Lines files.

You’ll also do that for both training and validation splits. If everying went well you can see the images preview inside AML.

2 — Running the experiment

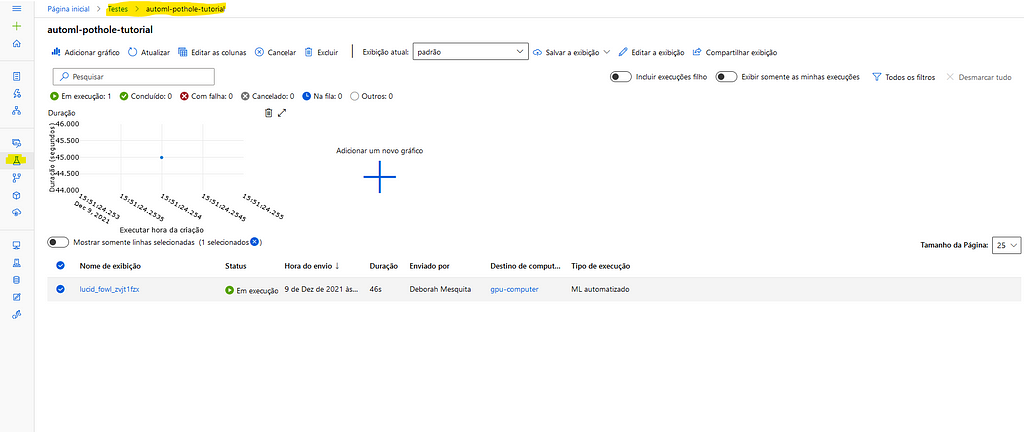

Inside AML, everything you run is called an Experiment. To train the model using AutoML you’ll create an experiment, point to the compute target it’s suppose to run on and provide the configuration for the AutoML parameters.

Let’s first create the experiment and get the computer instance from the workspace:

Here I’ll run the experiment using yolov5 default parameters. You need to provide the hyperparameters, the compute target, the training data and the validation data (as the example says, the validation dataset is optional).

Now we can finally submit the experiment:

automl_image_run = experiment.submit(automl_config_yolov5)

You can monitor the experiments using the Workspace web interface:

Here I’m using only a dict with a single model and using the default paramters, but you can explore parameters and tunning settings. Here is an example from Microsoft tutorial:

3 — Visualize the predictions

This yolov5 model is trained using Pytorch, so we can download the model and check the predictions using the Jupyter notebook. Mine took 56min to train. The first thing you need to do to get the model is to register the best runin the workspace so you can access the model thought it.

Now we can download the model.pt file and run the inference. To do that we’ll use the code from the azureml-contrib-automl-dnn-vision package:

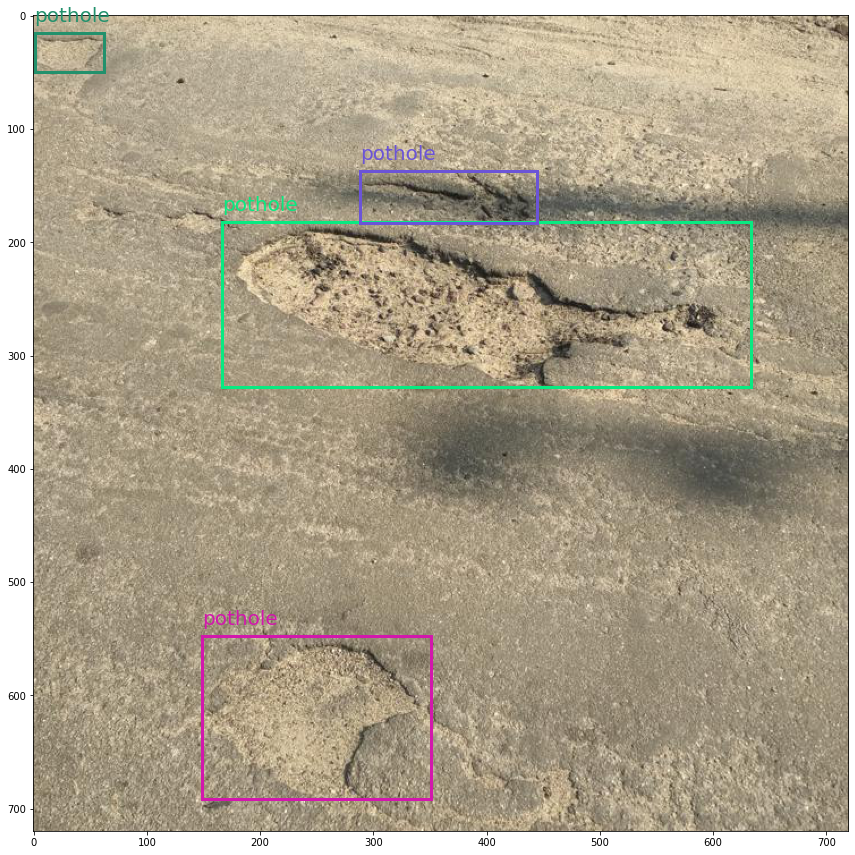

I used the code from the Microsoft tutorial to visualize the bounding boxes. Here is the result for a test image:

Cool right?

Final thoughts

Azure Machine Learning is a good tool to get you started with machine learning (well, deep learning in our case) because it hides away a lot of complexity. Now with the AutoML feature you don’t even have to think about training different models at different moments because the tunning settings can do that for us.

The next step in the pipeline would be to deploy the model as web service. If you’re curious you can check how to do that using the Microsoft tutorial as well.

Thanks for reading! :D

References

- Pothole Dataset. Shared By. Atikur Rahman Chitholian. November 2020. License. ODbL v1.0

AutoML for Object Detection: How to Train a Model to Identify Potholes was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/3yudXMm

via RiYo Analytics

No comments