https://ift.tt/3EQEAgM Opinion 3 mistakes that statistically-ignorant data nerds make In the work-from-home era, many fresh recruits won’...

Opinion

3 mistakes that statistically-ignorant data nerds make

In the work-from-home era, many fresh recruits won’t get the opportunity to enjoy cynical grumblings from a nearby cubicle, which means that much of the labor of rearing our data science young goes undone these days. Let me help out by dispensing some of that character-building goodness as it was once handed to me. I’ll do my best to emulate the grouchiest mentor I’ve ever had. Enjoy!

Newcomers to data, here are three mistakes you’ll make if you’ve never had your faith in data crushed by real-world projects:

- Thinking you’re here to get the right answer.

- Working hardest on garbage data.

- Forgetting the weakest link in your AI system.

If you love math, it’ll hurt more

If you love math for its purity, if you love finding the right answers… welcome to hell.

Two people can use the same data to come to different — and entirely valid — statistical conclusions. Yes, you heard me: same data, two different answers, both correct.

That’s because any work you do with real-world data will always be based on unsupported assumptions. (Here’s why.) As long as you take stock of the assumptions you’re making and appreciate that your conclusions are only valid in light of them, you’re doing everything correctly.

Two people can use the same data to come to different — and entirely valid — statistical conclusions by making different assumptions.

In other words, technically, we’re allowed to assume anything we like and our answers are correct in light of it.

Except, *you’re* not. You aren’t allowed to make any assumptions at all unless you’re the decision-maker, the person who is in charge of making the driven-driven decision you’re supporting. Alas, if you’re new around here, the person who’s authorized to make the assumptions is probably your boss or your boss’s boss. Unless they’re skilled at expressing their assumptions mathematically, you have the choice of either learning some extremely subtle interrogation skills or living with the fact that whatever assumptions you pick are technically wrong for the decision.

The first mistake is thinking you’re here to get the right answer. At best, you’ll get *a* right answer.

I could go on and on about delegation in data science, but let’s keep the pain to a bearable level. For the rest of this discussion, let’s assume that you’re in charge and you’re authorized to make assumptions. (A huge assumption indeed!)

When your analysis is bad and you should feel bad

When the sheer scale of the complex untestable assumptions completely swamps the amount of information you have access to, the wrong way to respond is by working harder (and for much longer) at a super-fancy analysis, finishing it, and being terribly proud of what you’ve done.

Frankly, you reacted desperately to a desperate situation and you should know better than to pretend your conclusions aren’t flimsy. If you intend to share them with others, wipe that grin off your face and apologize profusely.

“What we know is a drop, what we don’t know is an ocean.” ― Isaac Newton

For example, you might be working with an inherited dataset without any documentation about what the variable names actually mean. If so, what are you doing with that intensely (and self-righteously) complicated analysis? Your approach has more holes than Swiss cheese, so why would you claim that the output of your toil is something rigorous? You’re just making stuff up. No matter how much mathematics you apply to this situation, no one should take your results too seriously.

The second mistake is working harder when assumptions eclipse evidence.

Instead, approach it as a quest for inspiration and don’t invest too much time in the mathy bits. Your efforts would be better spent in acquiring / creating more information.

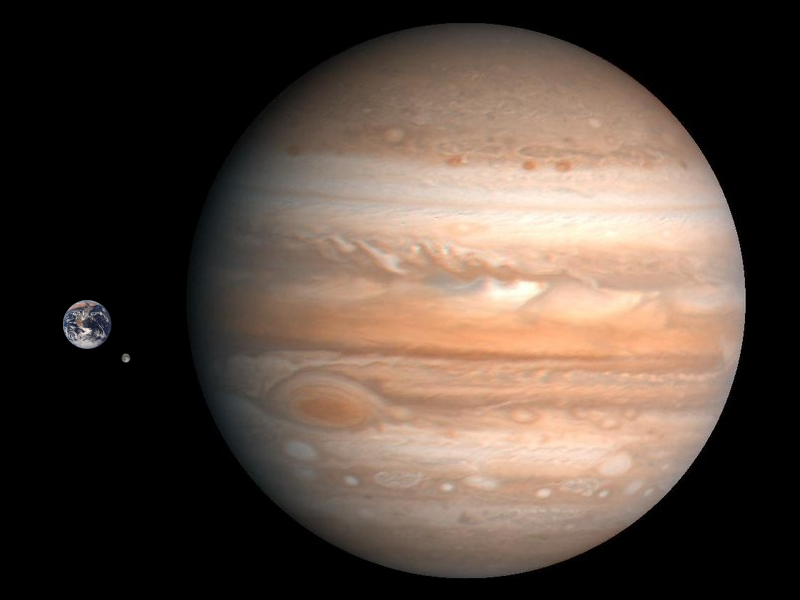

When your data are the size of the moon while your assumptions are size of Jupiter, good manners demand that you paste an apology to your readers on Every. Single. Line. (I’m only half-kidding.)

If you’re making assumptions and you’re not a domain expert, they’re probably extra pungent garbage assumptions. Apologize not once but several times on every line of your report. If your report is mostly apology, so much the better.

One of my favorite pioneers of statistics, W. Edwards Deming, famously said that “without data, you’re just another person with an opinion.’’ That is true, but unfortunately so is this: “With data, you’re still just another person with an opinion.’’ Especially if your assumptions eclipse reality.

“With data, you’re still just another person with an opinion.’’

In other words, if you’re about to publish a “data” report where you try to hide the Godzilla-sized assumptions that stomp all over your measly data’s ability to play any kind of role in your conclusion, I hope you feel ashamed of yourself. I’d prefer for you to tell the truth: “My uninformed opinion is…”

With that kind of disparity between the size of your assumptions and your evidence, your appeals to data are in bad taste. If you were honest about how little you know, your report would be so full of apology that no one would read it. Good.

If you’re not keen to be honest about how little you actually know, then work diligently to know more before you waste your stakeholders’ time.

Be quiet until you’ve done the homework that makes you worth listening to.

I’m not advocating for apologetic groveling. I’m asking you to be quiet until you’ve done the homework that makes you worth listening to. If you insist on sharing your opinion anyway, don’t hide behind a pittance of relevant data (or, worse, a mountain of irrelevant data). Have the guts to be honest about the assumptions you’re making.

Mind the gap!

Some related behavior that makes me cringe is the ML/AI nerd’s reaction to having two respectable, nutritious ML models chained around a trainwreck of a we-have-no-idea-what’s-happening-here model in the middle.

No matter how fancy and correct the bookends are, you’ve got an extremely unstable tightrope over a chasm. Why would you think the overall result a good, rigorous model? It’s about as reliable as taking a joyride from side one to the other… expect to enjoy a massive crash in the middle.

Your solution is only as good as the weakest link. Please take responsibility for the whole thing.

There are many ways this happens in practice. The often-cited one is dubious data combined with meticulous records, but my favorite example is when you buy models from other people and don’t deploy them without testing them thoroughly in context. Your solution is only as good as the weakest link. Please take responsibility for the whole thing.

The third mistake is forgetting the weakest link.

Toughen up

It’s fine to make assumptions, but don’t lie to yourself (or others) about the rigor behind your conclusions if your assumptions overshadow your evidence.

When I first posted about this on LinkedIn, some people were very upset. “Sometimes this is all we have,” they cried.

I get it. You’re sad that your data aren’t good enough for a respectable analysis. But that’s life. Get over it. Just because you have a spreadsheet full of numbers doesn’t guarantee that you’ll be able to get anything useful out of it.

The universe doesn’t owe you solid conclusions just because you got hold of some numbers.

To those brave souls who do the hard work to replace their assumptions with evidence until the data they have eclipses their unsupported assumptions, you make us all proud. Please keep doing what you’re doing! There’s nothing more beautiful than a situation where evidence crowds assumptions into a little corner and then makes a compelling argument that’s mostly fact instead of fiction.

Opinions dressed up as evidence can be worse than nothing.

But sometimes, you don’t have the information you need for making solid conclusions and it’s better to be quiet until you do. Opinions dressed up as evidence can be worse than nothing, so please don’t tell me that you think there’s honor in presenting data no matter what. If you’re evaluating the quality of your data without thinking about the quality of your assumptions, you’re doing it wrong.

What to do instead

My suggestion is to be more humble when you’re facing one of these situations (or their cousins). If you’re drowning in things that it’s impossible to gain knowledge about, keep your methods simple. This is tough when you feel like you were hired to be the clever one with the fancy toolbox. But you’ll be glad you did it.

The benefits include:

- honesty (lying to others is unethical and lying to yourself is stupid)

- transparency (it takes less time to figure out how you got your conclusions and to understand their limitations)

- conservation of effort (simple approaches are, ahem, simpler)

In March 2020, I saw a Twitter conversation where an expert advocated against making predictions about COVID-19 and a parent responded with anger plus something like, “But what do we tell our kids when they ask when schools will reopen?”

Parents, my suggestion is to tell your kids what my parents told me during uncertain times: the truth. Let’s try it together now, “I. Don’t. Know.”

And, in hindsight, we didn’t know.

Have the grace to admit what you don’t know.

Have the grace to admit what you don’t know. Once they’re weaned off expecting answers to every question, kids can handle an answer like that. And, perhaps more surprisingly, adults can too. My guess is that both groups will be better — wiser and more resilient — for it.

Thanks for reading! How about a YouTube course?

If you had fun here and you’re looking for an applied AI course designed to be fun for beginners and experts alike, here’s one I made for your amusement:

Tough Love for Naïve Data Newbies was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/3CUdJQm

via RiYo Analytics

ليست هناك تعليقات