https://ift.tt/3nGNY0H How to handle data-driven projects effectively and maximize the value of the project outcomes In recent years, data...

How to handle data-driven projects effectively and maximize the value of the project outcomes

In recent years, data scientists have risen to prominence in a variety of enterprises. Data scientists are in high demand for both technical and non-technical talents. As a result, data practitioners must continue to improve their skills in order to stay relevant. Currently, many firms recognize the value that data scientists bring to the table. Most businesses, on the other hand, appear to have incorrect preconceptions about data science and how to support it. Some argue that because data scientists utilize the R or Python programming languages, the same approach that works for software development would work for model construction. That is not the case because models differ, and applying the incorrect methodology could result in a major issue.

To manage problems requires decision-making. This process can also be supported by machine learning and data mining techniques, based on analysis and selection of project data to make the right decisions and solve critical project problems. In today’s era, a data scientist must be adaptable and ready to tackle challenges in novel ways. While project management and communication skills are crucial for a data scientist to have, it’s also important to understand how project management and data science function together. Software engineering has a Capability Maturity Model(CMM) that is used to create and improve the software development process of an organization. This model comprises a five-level evolution path. CMM resembles the ISO 9001 standards that have been defined by the International Organization for Standardization.

The Five Maturity Levels of Software Processes for CMM

· Initial Level: The processes at this stage are disorganized and chaotic. Success depends on individual efforts and is not repeatable.

· Repeatable Level: This is where basic project management techniques are defined, and success could be repeated.

· Defined Level: At this stage, the organization has defined its standard software process via greater attention to standardization, documentation, and integration.

· Managed Level: The organization controls its processes using data analysis and collection.

· Optimizing Level: This is the stage where processes are frequently improved through feedback tracking from existing processes to address the needs of the organization.

Ad-hoc processes in DS Management

When there are no standard methodologies to manage data science projects, teams usually settle on ad hoc practices that are not organized, repeatable and sustainable. As such, these teams often experience low project maturity without uninterrupted improvements, frequent feedback, and defined processes.

So, does Ad Hoc Work for Data Science?

Well, ad hoc processes have several advantages and drawbacks. First, they provide users with the freedom to decide the way to handle every problem that arises. Ad hoc can be better when teams have one-off projects that are managed by individuals and small teams. In addition, concentrating on a given task without getting concerned about the impact on other projects areas of the organization, allows you to work on a project with little administrative effort.

That aside, data science is expanding daily and requires that teams and organizations evolve to mirror the current changes. For this reason, it is not right to overly depend on ad hoc practices because it might result in numerous problems for data science teams and projects. While adopting mature project management methodologies will not completely solve the project management challenges, but it will reduce the numerous problems and increase the odds of success. Apart from the simple and small projects implemented by small teams, ad hoc is not the best for data science projects.

That said, let’s review mature software project management approaches that can be adopted in the data science sector.

Crisp-DM Methodology

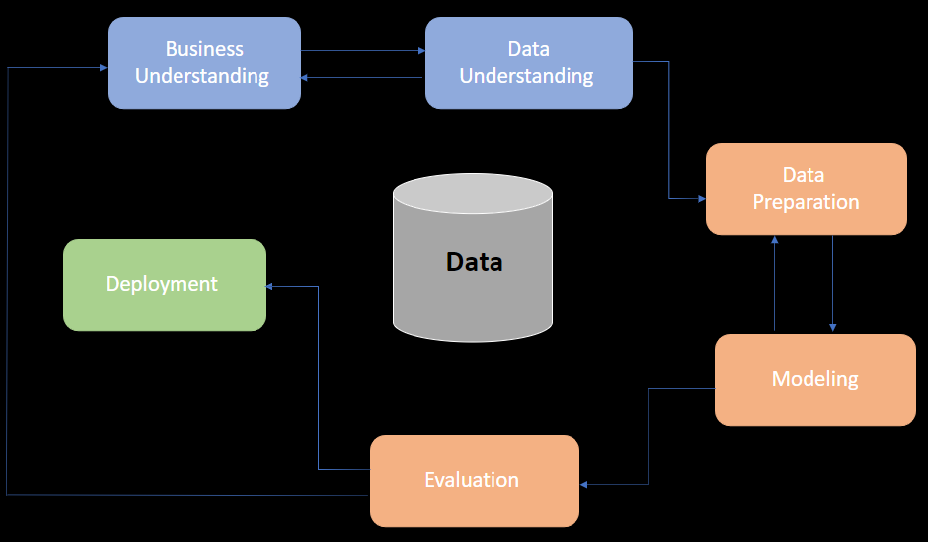

The CRISP-DM in full is the cross-industry process for data mining. This methodology has a structured approach to organizing a data mining project. It is a strong and well-proven methodology. When data scientists highlighted the need for a standard methodology and procedure that is suitable in data analysis and mining, they created the CRISP-DM methodology. CRISP-DM comprises six unique phases. The phases of CRISP-DM include Data Understanding, Business Understanding, Evaluation, Preparation, Modelling, and Deployment. At a nominal level, the processes are conducted sequentially. However, the process is always iterative. This implies that models are designed to be enhanced by subsequent knowledge. The process involved within a CRISP-DM is revealed in the image below. Let’s review this process from the angle of data analysis.

CRISP-DM in Business

Most businesses, when they want to understand their customers, target markets, and clients. They will always have a set of data. For instance, they may have a contact list either from those who purchased, completed the form or those who filled it online. As such, the first step of the CRISP-DM is to understand the business and highlight the specific goals of the organization. Understanding a business entails identifying the challenges the business wishes to address. Once the goals are understood, the process of identifying what is found in the data begins. Depending on the information source, there could be information about the customer's interests. All this data can be useful for future campaigns. Thereafter, the data is prepared and analyzed to make it useful. The data preparation process is massive and can take 70-80% of the project time. Part of the data preparation process entails the identification and creation of new data points, which can be computed from the existing entries. Later, the information extracted in the data preparation phase is then used to establish different behaviour models. With the help of Machine Learning tools, numerous tests are executed using this data.

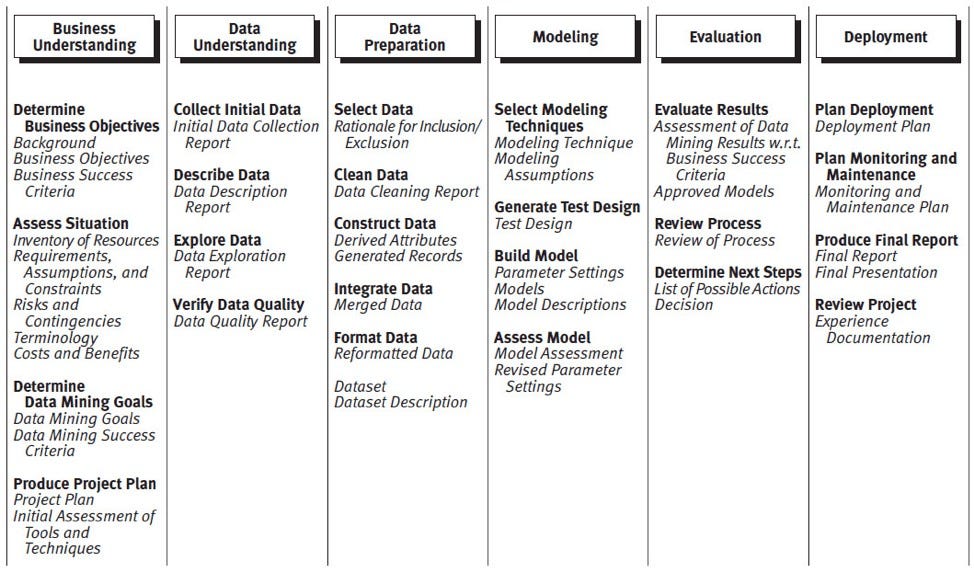

CRISP-DM in Detail

CRISP-DM tasks in bold and the results in italics

CRISP-DM and Data Science Project Management

Data science teams that integrate a loose implementation of CRISP-DM with agile-based practices will for sure achieve the best results. Even teams that don’t extremely apply all the approaches of CRISP-DM will attain a better result. CRISP-DM provides a standard framework for:

- Experience documentation

- Guidelines

- CRISP-DM methodology supports the best practices and supports project replication.

- CRISP-DM can be used in any Data Science project no matter the domain.

- CRISP-DM is a de-facto industry standard process model for data mining. Therefore, it is critical that data scientists understand the various steps of the model.

Waterfall Methodology

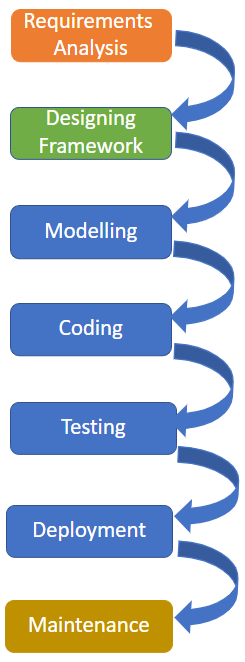

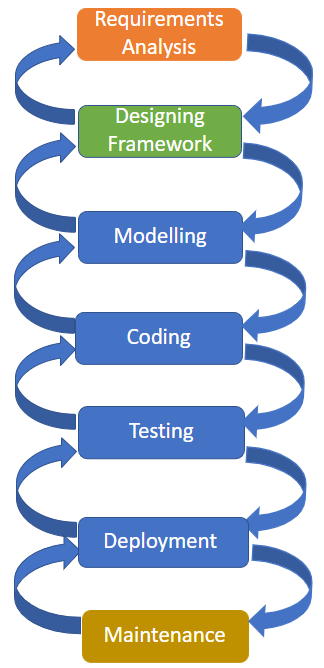

Winston Royce is credited with the discovery of the Waterfall model in 1970. This is one of the oldest methodologies of software engineering. The waterfall model represents a sequential model that is divided into pre-defined phases. The waterfall methodology acquired its name ‘waterfall model’ because every stage establishes a well-defined output that is relayed to the next stage as input. Once a product moves to the next stage, it cannot be reverted. Therefore, we can say that this whole software development process acts like a sequence of small waterfalls. The waterfall model divides the whole process of software development into various stages. Then the stages are executed in sequence one after another. Each stage proceeds with the processing until the end product of that stage is achieved before switching to the next stage.

When should you use the waterfall model?

Typically, you may want to know when is the right time to use the waterfall model. Well, the waterfall methodology must be adopted when the client can provide all his requirements at once plus the requirements must be stable. In addition, the developer team should be able to interpret all the client requirements at the start of the software development. However, it is difficult for anyone to outline all the requirements at once. Since a stricter waterfall model does not allow reverting once a stage is complete, a new variation of the waterfall model was introduced that accommodates feedback. This is known as the ‘feedback between the adjoining stages’.

This model allows problem correction between adjoining stages by accepting feedback from the current stage. For example, if an error happens in the testing stage during coding, it can be fixed by going back to the coding stage.

Is the Waterfall Methodology suitable for Data Science Project Management?

According to Pressman and Maxim (2015), Waterfall methodology cannot be used in Data Science Project Management. First, the methodology is perfect in a scenario where all the requirements are stated and are not likely to change. This is not the case with data science projects given that the field of data science has numerous experimentation, modification of requirements, and technology is still novel. The waterfall model was designed to be used in the manufacturing and construction sector where the progressive movement of a project is sequential. That explains why there is no overlap in the work phases of the model. You will need to complete the frame of a car before you can begin to bolt the body. Nevertheless, the well-structured state of the waterfall model is perfect for certain stages of the data science project such as planning, resource management, scope, and validation.

Agile Methodology

Agile methodology is based on the agile manifesto. The manifesto comprises 4 foundational values and 12 key principles. The focus of the manifesto is to uncover the best approaches to building software by establishing a measurable structure that promotes iterative development, change recognition, and team collaboration. The optimum value in Agile methodology is that it allows teams to provide quick value with higher quality and predictability. Agile processes offer a disciplined project management practice that supports frequent adaptation, inspection, self-organization, and a set of engineering practices that provide quick delivery of high-quality software needs.

The 4 values of the Agile Manifesto

1. Customer collaboration over contract negotiation.

Based on the agile manifesto, it is important to have continuous development. There is a need for a feedback loop with customers so that one can be sure the product works with them.

2. Individuals and interactions over tools and processes.

The presence of the right group of individuals in a software team is critical. Further, the interactions between these individuals and team members play a big role in solving the problem at hand.

3. Responding to change over adhering to a plan.

The agile manifesto recommends that a team of software should have the ability to respond to change whenever it is required.

4. Working software over comprehensive documentation.

The agile manifesto prioritizes working software over documentation.

The 12 primary principles in Agile Methodology

- Satisfy customers using early and constant delivery of important software.

- Accept varying requirements including those that come in late during development.

- Present functional software regularly from a period of weeks to months.

- Developers and business people must collaborate daily throughout the project.

- Establish projects around inspired individuals. Provide them with the support and environment they want.

- The most effective and efficient means of relaying information within a development team.

- The functioning software is the main determinant of progress.

- Agile processes stress sustainable development. The developers, users, and sponsors should uphold constant pace.

- Constant attention to the technical profession and proper design improves agility.

- Apply simplicity to maximize the amount of work not done.

- Self-organizing teams present the best requirements, designs, and architectures.

- During intervals, the team should reflect on becoming effective and adjust the behavior.

When should you use Agile methodologies?

The features of Agile methodology such as adaptability, continuous delivery, iteration, and short time frames make it the perfect project management method for ongoing projects and those which all requirements are not known from the start. In other words, projects without a clear timeline, available resources, and constraints make them the best choice for Agile methodology. Many software products nowadays use agile. It is the standard and has expanded to be used in marketing, design, and business.

Is it possible for Data Science to profit from Agile methodology?

Agile is perfect with Data Science Project Management. Data science processes entail a high degree of uncertainty. For this reason, agile methodologies work hand in hand with data science because it allows the non-linear processes to succeed rather than force them into sequential order.

Benefits of Agile Data Science

· Fast delivery of customer value-By delivering incremental product features like data ports, minimal viable, and models, users attain value before the end of the project.

· Relevant Deliverables-Defining requirements upfront before development, the tenets are likely to satisfy the current needs.

· Better communication. Agile addresses collaboration, clear communication and focuses on individuals. Therefore, when data science teams scale and become diverse, the value of effective communication increases both within the stakeholders and team.

· Agile enables data scientists to experiment and learn what works and what doesn’t. Experimentation starts with hypothesis creation and determining the variables. Next is the data collection and analysis.

While agile was created as a methodology for software development, it has continued to evolve to satisfy the wants of many team types. However, as the agile techniques continue to evolve, and new agile applications are released, the agile frameworks continue to evolve too.

Scrum

Scrum is a popular agile framework ideal for teams. According to the Scrum Guide, Scrum is a framework within which people can handle adaptive challenges while creatively delivering products of the greatest value. In some instances, scrum can be confusing because teams will build hybrids that use several aspects from other frameworks like Kanban. The main objective of scrum is to fulfill customer needs through transparent communication, continuous progress, and collective responsibility.

Scrum is implemented in min blocks that are short and periodic known as sprints. A sprint ranges from 2 to 4 weeks. Each sprint is an entity that delivers the full result. The process is composed of a starting point and requirements that complete the project plan.

Is it possible for Data Science to profit from Scrum’s methodology?

Scrum allows a team to collaborate and provide incremental value. However, this process is not easy. The biggest challenge involved is defining fixed-length sprints that pose a challenge in a data science environment. For example, it is not easy to estimate the number of times that a task will take.

Data science teams prefer sprints of different duration but are not possible when using Scrum. Because of these issues, some teams prefer to apply Data-Driven Scrum(DDS). The DDS has some of the major concepts of Scrum, but it also addresses the drawbacks of using Scrum. The main advantages of the scrum are it is customer/product-focused, able to keep consistency, information acquired by experimentation, and clear direction around the team members. Scum is hard to master, pretty much substantial meeting overhead for the team members, and fulfilling requirements with given time can be challenging for the team.

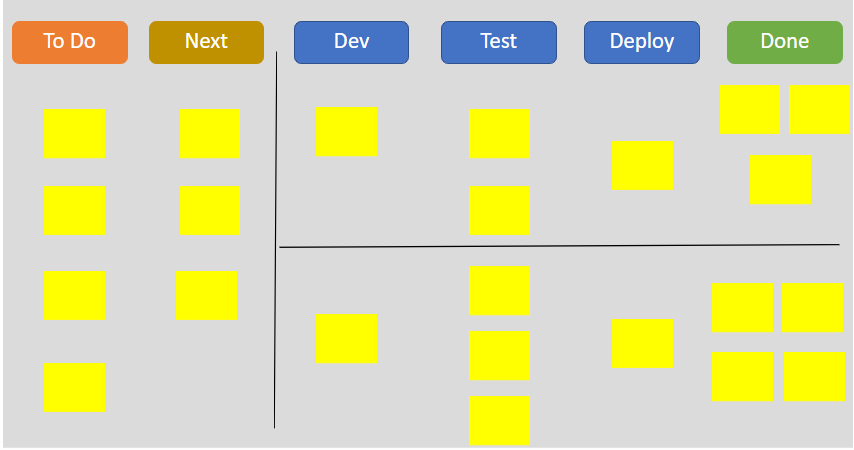

Kanban

Kanban is a project management approach that is best for companies of any size. The tool allows you to attain a visual overview of the tasks that need to be completed or get done. It is made up of a digital or physical board with three columns (In progress, Done, To Do). The tasks are then outlined as story cards. Every card will be extracted from left to right until it is completed. The Kanban system was first applied by the Toyota factories to balance material supplies with actual production.

Is it fine to use Kanban for Data Science Project Management?

Kanban has shown to be a great methodology for many types of projects, right from large projects with multiple layers of complexity to small ones that can be quickly completed by one or two persons. Kanban provides flexibility that is demanded by data scientists who want the flexibility to execute their tasks without getting constant deadlines. According to Saltz, Shamshurin, and Crowston (2017), Kanban provides a structure that is more than what data science teams possess.

Kanban best practices

Using Kanban in a project provides the ability to visualize the workflow. As such, in the case of bottlenecks, overworked steps, and other obstacles, they are easily identified. The team should also come together to identify ways to deliver the most critical jobs in the best way possible.

Advantages of Kanban

· It is very visual hence it is effective at communicating the work in progress for stakeholders and team members.

· It is very flexible. It can pull work items one at a time, unlike Scrum’s batch cycle.

· Offers better coordination. The flexible structure, simplicity, visual nature, and lightweight features make it conducive to teamwork.

· Reduces the Work in Progress.

· Provides clear rules for every step of the process.

Disadvantages of Kanban

· Lack of deadlines. The absence of deadlines may make teams work on specific tasks for a long time.

· Kanban column definition. It is challenging to define the columns for a data science Kanban board.

· The customer interaction is undefined. As such, customers may not feel dedicated to the process without the structured cadence of sprint reviews.

Research and Development for Data-Driven Project Management

R&D refers to the activities that businesses engage in to develop and introduce new products and services, as well as to improve their current offers. R&D is distinct from the majority of a company’s operating activity. Typically, research and development are not carried out with the hope of instant profit. Rather, it is intended to add to a company’s long-term profitability. As discoveries and products are developed, R&D may result in patents, copyrights, and trademarks.

Is it effective to use R&D approaches for Data-Driven Project Management?

Yes!

Data science comprises several steps, from EDA to model creation, and each phase necessitates its own set of experiments. Each phase is interconnected and iterative. R&D is the best technique for data-driven initiatives because it allows for a lot of flexibility in terms of creativity and producing something new. R&D has gotten us to where we are now in the data science space. Organizations face a variety of data-driven issues that aren’t easily solved using normal procedures and practices.

Conclusion

As we come to the end of this article, it is important to enlighten ourselves with the different project management approaches and the way they can be integrated into an information science. By doing that, we will be able to discover and create a technique that efficiently works. There is a lot of project management tools that are used to maintain and report on project progress. We can use those tools not only to notify the senior management but also to help stakeholders write assumptions and project dependencies. Project success depends on numerous factors. Therefore, it is important to develop and analyze system main performance indicators at all stages of the project life-cycle.

Here are additional resources to help you enlighten yourself with data-driven Project Management.

Reference list

Waterfall Model

https://binaryterms.com/waterfall-process-model.html

https://www.lucidchart.com/blog/pros-and-cons-of-waterfall-methodology

https://corporatefinanceinstitute.com/resources/knowledge/other/capability-maturity-model-cmm/

Adhoc

https://www.datascience-pm.com/tag/ad-hoc/

Agile

https://zenkit.com/en/blog/agile-methodology-an-overview/

https://projectmanagementacademy.net/agile-methodology-training

https://www.marketing91.com/agile-methodology/

https://www.digite.com/agile/scrum-methodology/

Kanban

https://www.paymoapp.com/blog/what-is-kanban/

https://bigdata-madesimple.com/why-apply-kanban-principles-big-data-projects/

Other Sources

DATA SCIENCE PROJECT MANAGEMENT METHODOLOGIES

https://www.dominodatalab.com/resources/field-guide/managing-data-science-projects/

https://www.datascience-pm.com/

https://xperra.com/blog/crispy.html

https://www.datascience-pm.com/crisp-dm-2/

https://www.agilealliance.org/agile101/12-principles-behind-the-agile-manifesto/

https://www.productboard.com/glossary/agile-values/

https://www.mygreatlearning.com/blog/why-using-crisp-dm-will-make-you-a-better-data-scientist/

Adapting Project Management Methodologies to Data Science was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/3FDrfbR

via RiYo Analytics

ليست هناك تعليقات