https://ift.tt/3CgathH Want to apply AI in healthcare? Do these projects to hone your skills. Photo by Scott Graham on Unsplash Arist...

Want to apply AI in healthcare? Do these projects to hone your skills.

Aristotle once said, “For the things we have to learn before we can do them, we learn by doing them.” Our human brain learns best from observations, experiences, and the feedback loop of these two. Doing things yourself, especially when learning data science, builds rich experiences and results in observations aborning a long-lasting learning experience.

The major chunk of learning data science comes from “doing” data science projects, it helps in building deeper knowledge, greater retention, understanding of “actual” problems faced by data scientists and will hone your technical skills overall. Also, these data science projects will add to your portfolio or resume which will help you land a better job opportunity.

I have listed the following projects each focussing on a certain type of machine learning challenge and algorithm refurbishing your overall machine learning and data science skills.

Stroke Prediction

Liver cirrhosis prediction

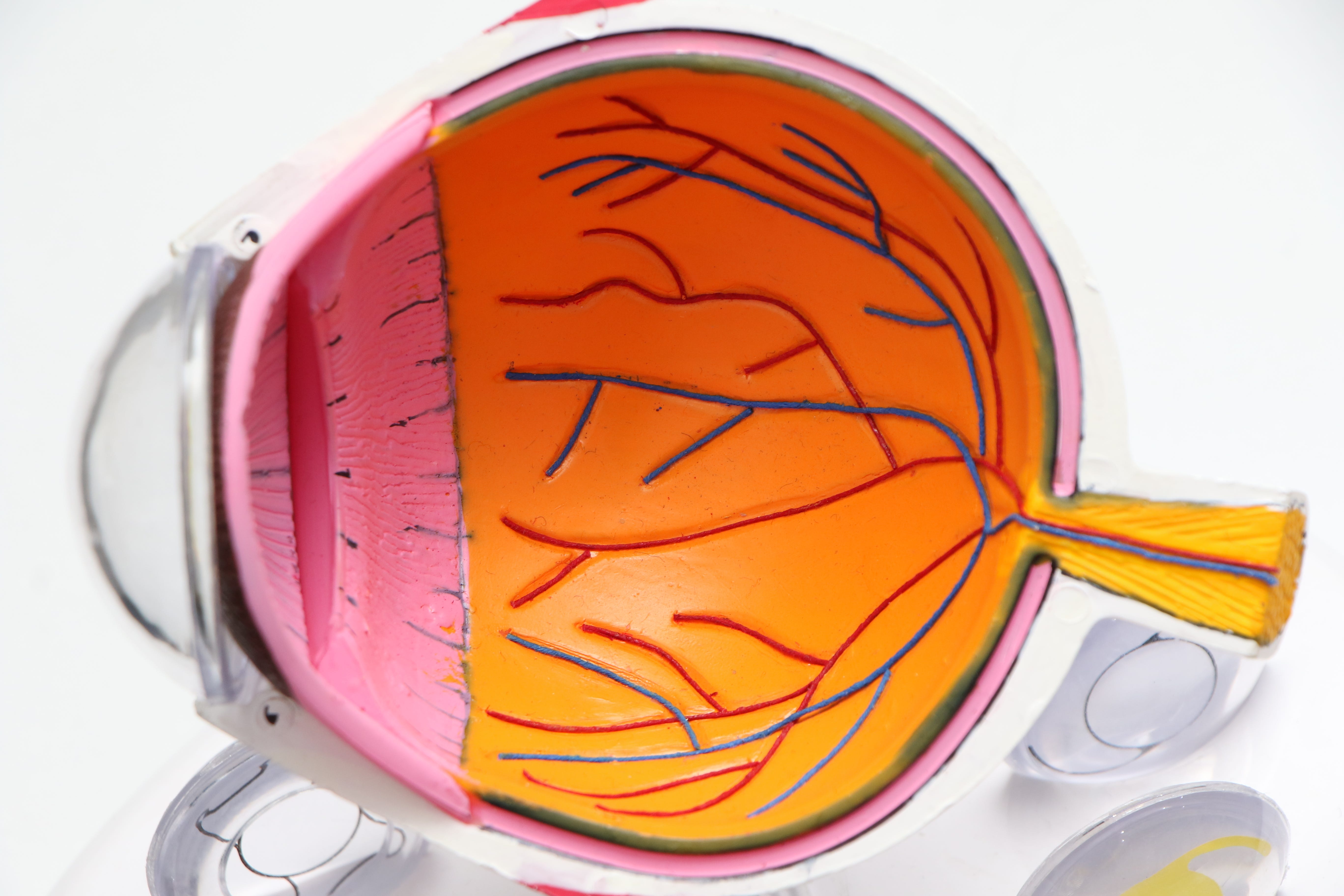

Diabetes Retinopathy

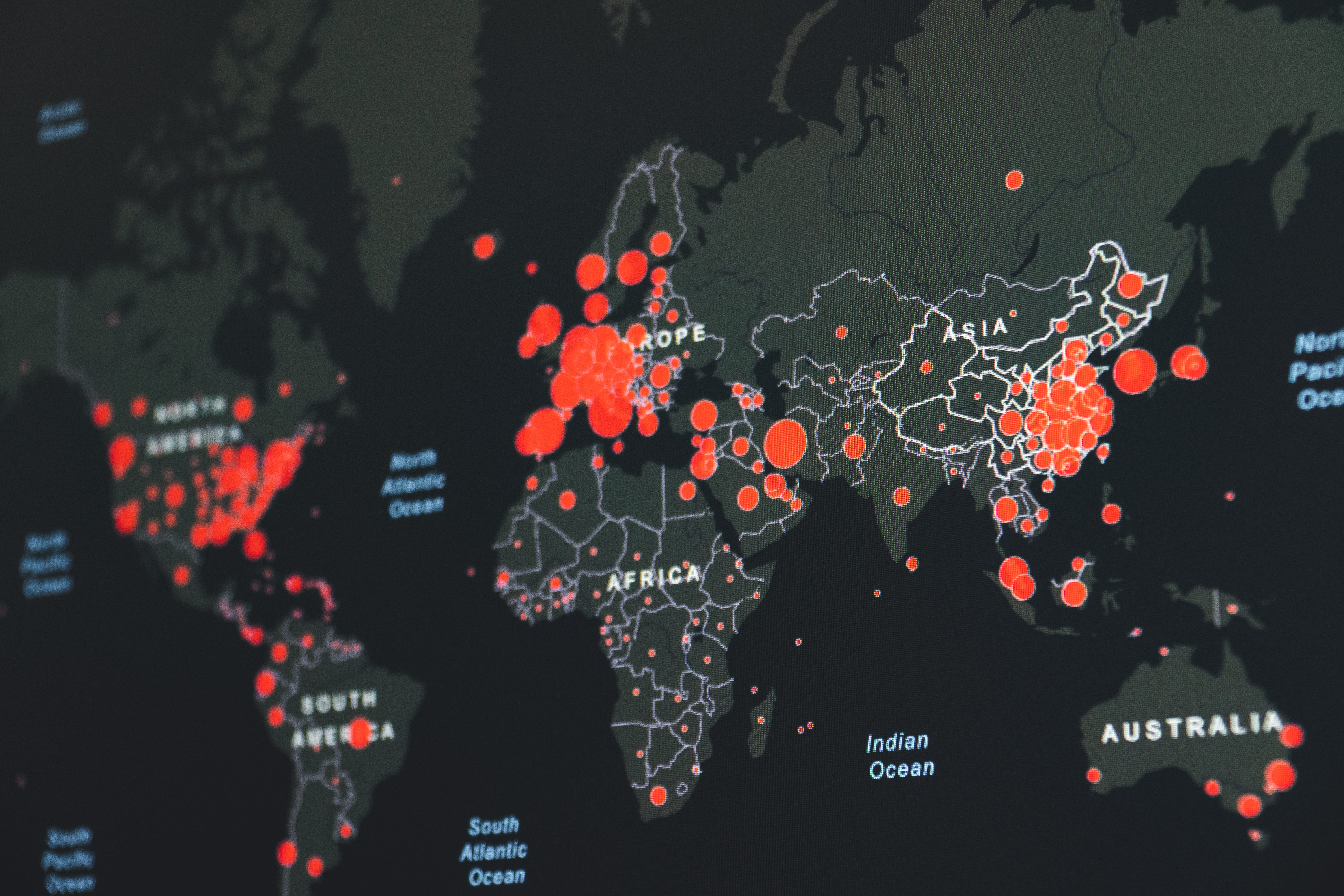

Time-series forecasting of Covid-19 cases and mortality rate

Breast Cancer Detection

Skills you will learn with the help of each project:

- Stroke Prediction: We will be applying Support Vector Machines to solve this problem. SVM is the most extensively used algorithm in the field of Healthcare because of some advantages it provides. Therefore, it is necessary to get a hold of this algorithm which will ultimately be very useful when applying it in the healthcare industry. We will also be performing some extensive exploratory data analysis for this project and will hone your EDA skills as well.

- Liver cirrhosis prediction: In this project, we will use xgboost which is a decision tree-based classifier. This project will hone our skills in the most-used ensemble learning method, xgboost. Also, we will refurbish our EDA skills too with this project.

- Diabetes Retinopathy: It is a computer vision problem. Computer vision is widely applied in the field of healthcare and is a must-have skill for anyone wishing to apply AI in healthcare mainly because a lot of healthcare data is in the form of diagnostic images e.g. MRI’s, etc.

- Time-series forecasting of Covid-19 cases and mortality rate: This project involves time-series analysis of the covid-19 related data. Time-series analysis is a great skill set to have in your toolbox of machine learning. The main objective of this project is to understand how the cases of covid-19 are progressing within each region individually and then on a global scale as a whole. During the hiring process, having time-series models on your portfolio will give you an extra edge and display the diversity of knowledge you have in data science. Also, drafting key insights from the data after careful data analysis is one of the most underrated skills a data scientist must have. This project will also help you build critical-thinking skills required for solving complex problems and data analytics.

- Breast Cancer Detection: In this project, we will use a deep learning technique. It is one of the most important and advanced parts of machine learning in general. Honing your skills in deep learning techniques is a must, this project will help you to do just that.

Applying machine learning in healthcare is a challenge because of data scarcity, poor quality, and inconsistency. Each of the projects, in general, will help you prepare for this challenge as each of the datasets have one or more such issues. By applying the techniques mentioned below you can learn to build your skills to deal with data issues better which will help you ace your interview and land a great job.

In each project, I will be providing the objective of the problem, dataset link, algorithm to be used and the implementation procedure. It is important to do these projects on your own, therefore I wont be providing the full code. But hey, dont worry! I will be providing a step by step roadmap of the implementation process and also be listing some important libraries and functions to be used in each project. So stick with me.

Now let’s delve deeper into the projects!

1. Stroke Prediction:

In this project, we will predict whether a patient will have a heart stroke or not based on his/her comorbidities, work, and lifestyle. Projects like these are widely applied in the healthcare sector, doing this project will impart you a better understanding of analysis of data, data cleaning, data visualizations, and algorithms.

Data set — The stroke data is available on Kaggle.

This dataset lists multiple features like gender, age, glucose level, BMI, smoking status, other comorbidities, etc., and the target variable: stroke. Each row specifies a patient’s relevant information. Following are the features listed in the dataset:

1) id: unique identifier

2) gender: “Male”, “Female” or “Other”

3) age: age of the patient

4) hypertension: 0 if the patient doesn’t have hypertension, 1 if the patient has hypertension

5) heart_disease: 0 if the patient doesn’t have any heart diseases, 1 if the the patient has a heart disease

6) ever_married: “No” or “Yes”

7) work_type: “children”, “Govt_jov”, “Never_worked”, “Private” or “Self-employed”

8) Residence_type: “Rural” or “Urban”

9) avg_glucose_level: average glucose level in blood

10) BMI: body mass index

11) smoking_status: “formerly smoked”, “never smoked”, “smokes” or “Unknown”*

12) stroke: 1 if the patient had a stroke or 0 if not

This dataset lists all the relevant information required to predict stroke chances and these identifiers are often used by medical practitioners as well. These input parameters can be used to predict the chances of stroke in a patient.

Algorithm:

This can be implemented using Support Vector Machines. It is advantageous for applications with a small sample size. SVM has demonstrated high performance in solving classification problems in bioinformatics.These are the reasons why it is used so extensively in the healthcare sector.

Fit the data with a linear SVM. Import the library as:

from sklearn.svm import SVC

Now, .fit a Gaussian kernel SVC and see how the decision boundary changes. Use the “rbf” kernel. Apply this using this function:

SVC_Gaussian = SVC(kernel=’rbf’)

You can also use the Nystroem method. Import the library as:

from sklearn.kernel_approximation import Nystroem

Implementation:

First of all, do some data cleaning. A caveat for using this data set is that it has certain null values and outliers, you can either delete them or replace them with a median value. After that, perform data visualization to understand the underlying relationships and dependencies within the data. Create cat plots, heatmaps, pairplots and boxplots for different features of the data set to look for any relationships between the features and the target variable.

After that, split the data into train and test sets. Train and then predict the random forest model on the data set. In the end get the precision, recall, accuracy scores to check the model performance. From sklearn.metrics, you can import classification_report, accuracy_score, precision_score, recall_score to check the performance metrics.

2. Liver cirrhosis prediction:

Liver cirrhosis is a widespread problem especially in North America due to high intake of alcohol. In this project, we will predict liver cirrhosis in a patient based on certain lifestyle and health conditions of a patient.

Data set: This data set is available on Kaggle which was collected from the Mayo Clinic trial.

This data set has about 20 features. These features are related to the patient’s details like age, sex, etc. and patient’s blood tests like prothrombin, triglycerides, platelets levels, etc. All these factors help in understanding a patient’s chances of liver cirrhosis.

Again, the challenges for this dataset are the null values which need to either be replaced or deleted. Also, this data set has unbalanced classes, so it might require up-sampling the dataset.

Algorithm:

We can use the random forest classifier for this as well as the XGBoost Classifier for this model. A good practice is to train the data on both of these models and choose the model with the best scores. From xgboost, we can import XGBClassifier.

Implementation:

First, perform some data cleaning, check the outliers and null values. Outliers can be checked by calculating the z-score of the dataset and keeping a threshold value beyond which the data values will be considered outliers. Then do some Exploratory data analysis and visualizations to understand the data better. When splitting the data into training and test sets, use Stratified K-fold to ensure the target variable is equally balanced in all the test and training splits. It can be done by importing StratifiedKFold from sklearn.model_selection. Then train the data on both the models and choose the model based on the performance metrics like precision, recall, accuracy, etc. From sklearn.metrics, you can import classification_report, accuracy_score, precision_score, recall_score to check the performance metrics.

3. Diabetes Retinopathy:

The objective of this project is to identify and predict chances of damage to the blood vessels in the tissue at the back of the eye (retina) in diabetic patients called diabetic retinopathy.

Data set — this dataset can be found on Kaggle

Algorithm:

This is a computer vision problem and we will be applying a deep learning technique for it. We will use U-Net architecture to train the data. To implement U-Net we will use keras which is a powerful deep learning library run on top of TensorFlow. Import the libraries as:

from keras.models import Sequential

from keras.layers import Dense, Conv2D, MaxPooling2D, Dropout,Flatten

There are different stages and layers in the U-Net architecture. Mainly it has three phases: Expansion, Concatenation and then Contraction phase.

Implementation:

First of all, data(the image)is loaded and preprocessed and converted into an array format. The array values represent the pixel intensities.

After data preprocessing, split the data into train and test split. This split has to be stratified to ensure the ratio of both labels are proportionate and gauge the real distribution of retinopathy patients to healthy patients. The stratified k-fold split is especially important in healthcare applications because it can otherwise lead to biases in disease prediction and detection. It can be done by importing this library:

import StratifiedKFold from sklearn.model_selection

Check how the model performs by looking at the losses of the training set and test set. Plot the graphs for both the sets and compare the results.

4. Time-series forecasting of Covid-19 cases and mortality rate

In this project, the objective is to identify the nature of the pandemic and forecast its progression.

Data set — This data set is available on this link but is originally from John Hopkins University.

This dataset contains information on covid-19 progression, it has 6 parameters:

id, province state, country, confirmed cases, fatalities

Objective and Implementation:

You can first visualize the data to look for possible trends within the data. Analyze the time series, especially the number of fatalities and the confirmed cases for different regions and how they vary with region.

The main objective of this project is to understand how the cases of covid-19 are progressing within each region individually and then on a global scale as a whole. Draft key insights from the data after careful data analysis. An important skill of a data scientist apart from writing code and machine learning is the ability to draw insights from the data which is powered by critical thinking.

Within the dataset look for “how the cases rose within a certain time frame and for certain regions more than the other regions”, “regions where the slope of cases per time is higher”, “the window for maximum increase in evolution of fatalities per region”.

All of this information is critical in monitoring the spread of the disease, devising pandemic-related strategies e.g. lockdown planning etc.

Algorithm:

Use the ARIMA model for this problem. to implement this import the library:

from statsmodels.tsa.arima_model import ARIMA

You can also use a simple RNN model for this as well.

Check the distribution of cases with plots of Rolling mean and standard deviation. You can also check the stationarity of the time series using the Dickey fuller test.

Split the data into train and test sets. Calculate the Mean absolute percentage error and the confidence interval.

5. Breast Cancer Prediction:

In this project, we will predict whether a patient has breast cancer or not.

Data set — The breast cancer data set can be found on the UCI ML repository and on Kaggle as well.

Algorithm and Implementation:

In this project, we will predict breast cancer based on fluid samples, taken from patients with solid breast masses. Based on certain features of the cell nucleus like “perimeter”, “area”, “texture”, “concavity”, etc. we can predict whether the breast cancer is malignant or benign.

The data includes few categorical features, they need to be converted to numerical features so that any machine learning model can process them. We can use the “get_dummies” or “one_hot_encoder” function of sklearn library for it.

After some data processing, apply a machine learning algorithm. Apply a Deep Neural Network for this problem. To implement it import these libraries:

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Activation

We can find the best model by changing the hyperparameters like “optimizer”, “loss function” etc. You can try making two models with different loss functions and optimizers — loss=’binary_crossentropy’ and “mean_squared_error”, optimizer= “rmsprop” and “adam”. Check the best model between the model types based on the performance metrics.

From sklearn.metrics, you can import classification_report, accuracy_score, precision_score, recall_score to check the performance metrics.

5 Data Science Projects in Healthcare that will get you hired was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Towards Data Science - Medium https://ift.tt/3vHNdXk

via RiYo Analytics

ليست هناك تعليقات